docs-en

Introduction

Aim & composition of this manual

This manual explains the functions of GridDB.

This manual is targeted at administrators who are in-charge of the operational management of GridDB and designers and developers who perform system design and development using GridDB.

The contents of this manual are as follows.

- What is GridDB?

- Describes the features and application examples of GridDB.

- Structure of GridDB

- Describes the cluster operating structure in GridDB.

- The data model of GridDB

- Describes the data model of GridDB.

- Functions provided by GridDB

- Describes the data management functions, and operating functions provided by GridDB.

- Parameter

- Describes the parameters to control the operations in GridDB.

- GridDB Community Edition is only available in single configuration, and cluster configuration with multiple nodes is limited only to GridDB Enterprise Edition.

- OS user (gsadm) is created when GridDB is installed using the package.

- ODBC is Enterprise Edition only.

What is GridDB?

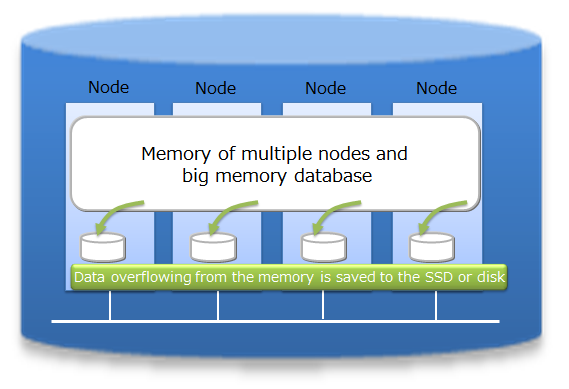

GridDB is a distributed NoSQL database to manage a group of data (known as a row) that is made up of a key and multiple values. Besides having a composition of an in-memory database that arranges all the data in the memory, it can also adopt a hybrid composition combining the use of a disk (including SSD as well) and a memory. By employing a hybrid composition, it can also be used in small scale, small memory systems.

In addition to the 3 Vs (volume, variety, velocity) required in big data solutions, data reliability/availability is also assured in GridDB. Using the autonomous node monitoring and load balancing functions, labor-saving can also be realized in cluster applications.

Features of GridDB

Big data (volume)[Enterprise Edition]

As the scale of a system expands, the data volume handled increases and thus the system needs to be expanded so as to quickly process the big data.

System expansion can be broadly divided into 2 approaches - scale-up (vertical scalability) and scale-out (horizontal scalability).

-

What is scale-up (vertical scalability)?

This approach reinforces the system by adding memory to the operating machines, using SSD for the disks, adding processors, and so on. Generally, this approach increases individual processing time and increases the system processing speed. On the other hand, since the nodes must be stopped before the scale-up operation, as it is not a cluster application using multiple machines, once a failure occurs, failure recovery is also time-consuming.

-

What is scale-out (horizontal scalability)?

This approach increases the number of nodes constituting a system to improve the processing capability. Since multiple nodes are generally set to operate in coordination, this approach features that there is no need to completely stop the service during maintenance or even when a failure occurs. However, the application management time and effort increase as the number of nodes increases. This architecture is suitable for performing highly parallel processing.

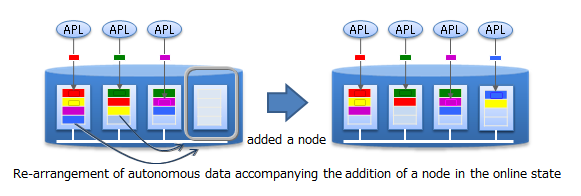

In GridDB, in addition to the scale-up approach to increase the number of operating nodes and reinforce the system, new nodes can be added to expand the system with a scale-out approach to incorporate nodes into an operating cluster.

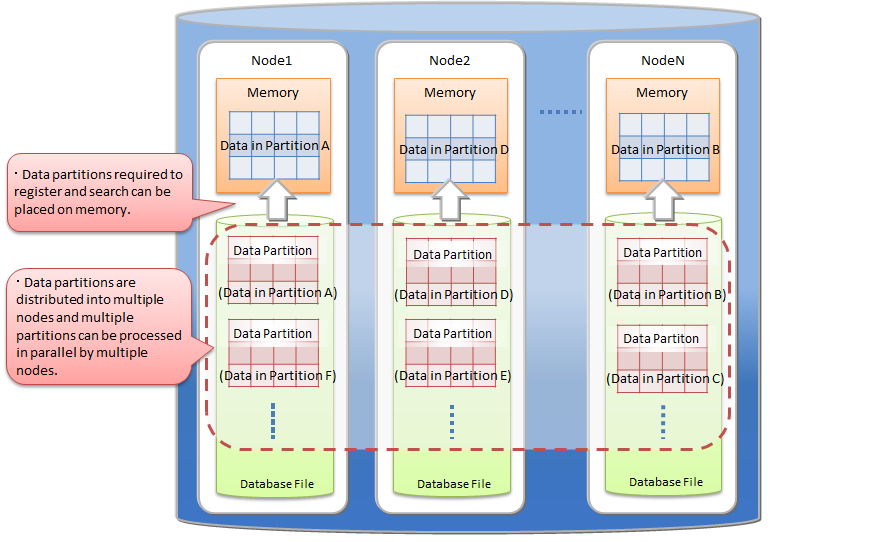

As an in-memory processing database, GridDB can handle a large volume of data with its scale-out model. In GridDB, data is distributed throughout the nodes inside a cluster that is composed of multiple nodes. That is, GridDB provides a large-scale memory database by handling memories of more than one node as one big memory space.

Moreover, since GridDB manages data both in memories and on a disk, even when a single node is in operation, it can maintain and access the data larger than its memory size. A large capacity that is not limited by the memory size can also be realized.

System expansion can be carried out online with a scale-out approach. That is, without stopping the system in operation, the system can be expanded when the volume of data increases.

In the scale-out approach, data is relocated into the new nodes added to the system in accordance with the load of each existing node in the system. As GridDB will optimize the load balance, the application administrator does not need to worry about the data arrangement. Operation is also easy because a structure to automate such operations has been built into the system.

Various data types (variety)

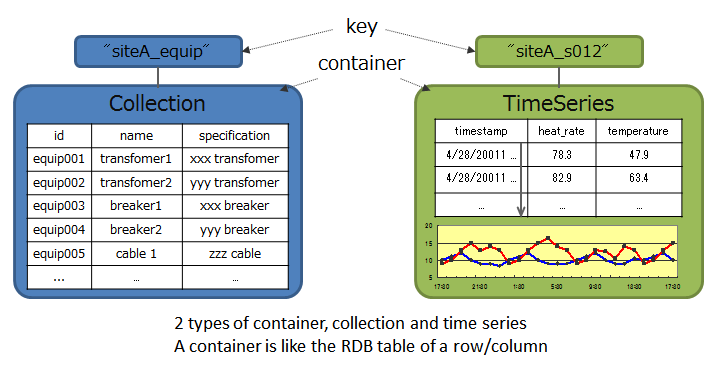

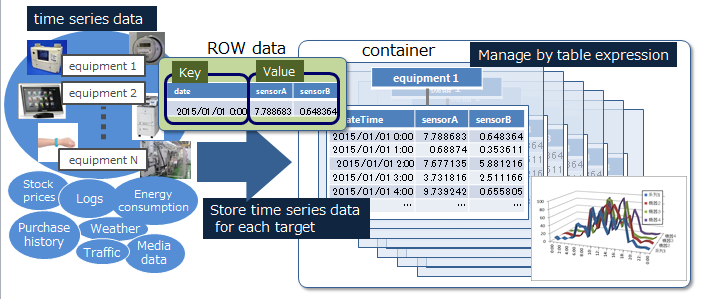

GridDB data adopts a Key-Container data model that is expanded from Key-Value. Data is stored in a device equivalent to a RDB table known as a container. (A container can be considered a RDB table for easier understanding.)

When accessing data in GridDB, the model allows data to be short-listed with a key thanks to its Key-Value database structure, allowing processing to be carried out at the highest speed. A design that prepares a container serving as a key is required to support the entity under management.

Besides being suitable for handling a large volume of time series data (TimeSeries container) that is generated by a sensor or the like and other values paired with the time of occurrence, space data such as position information, etc. can also be registered and space specific operations (space intersection) can also be carried out in a container. A variety of data can be handled as the system supports non-standard data such as array data, BLOB, and other data as well.

High-speed processing (velocity)

A variety of architectural features is embedded in GridDB to achieve high-speed processing.

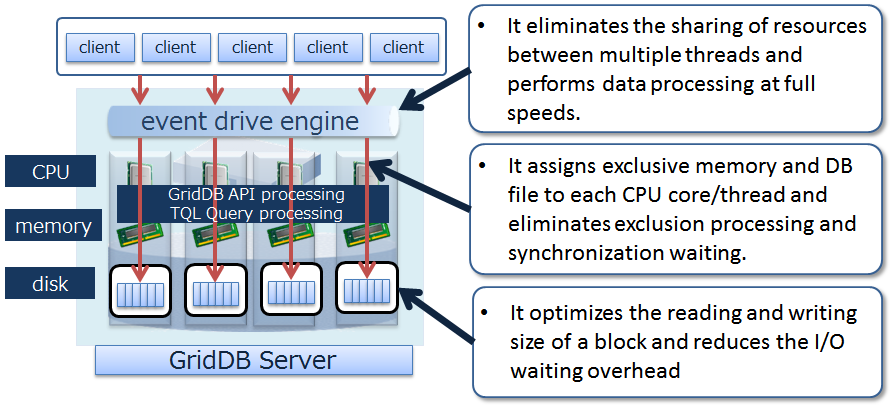

Processing is carried out in the memory space as much as possible

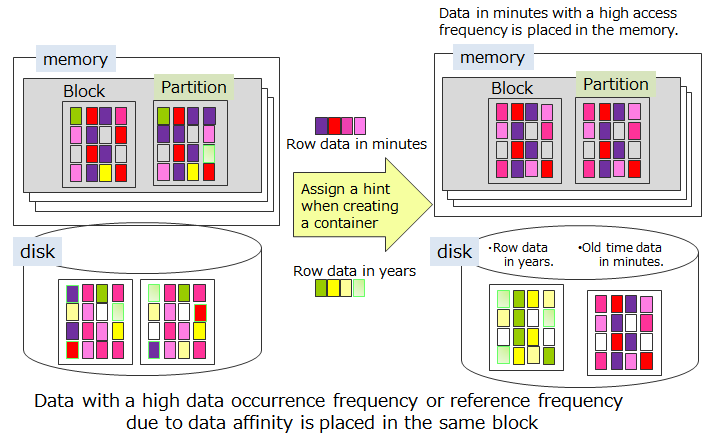

In an operating system in which all the data is located in the memory and operation can be performed in-memory, there is no real need to be concerned about the access overhead in the disk. However, in order to process a volume of data too large to save in the memory, there is a need to localize the data accessed by the application and to reduce access to the data arranged in the disk as much as possible.

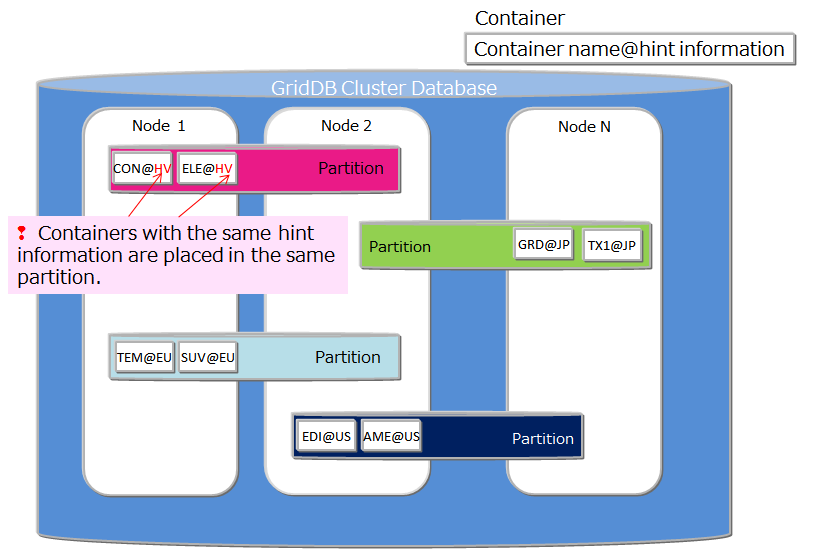

In order to localize data access from an application, GridDB provides a function to arrange related data in the same block as far as possible. Since data in the data block can be consolidated according to the hints provided in the data, the memory hit rate is raised during data access, thereby increasing the processing speed for data access. By setting hints for memory consolidation according to the access frequency and access pattern in the application, limited memory space can be used effectively for operation (Affinity function).

Reduces the overhead

In order to minimize waiting time caused by locks or latches in a simultaneous access to the database, GridDB allocates exclusive memory and DB files to each CPU core and thread, so as to eliminate waiting time for exclusive and synchronization processing.

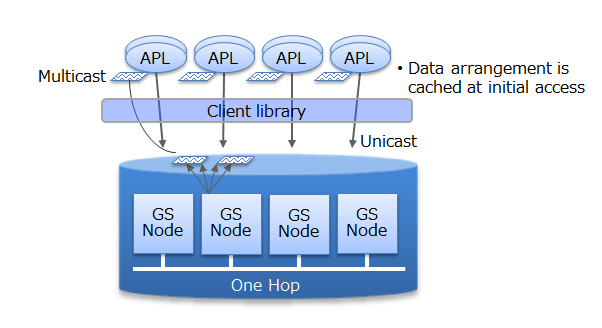

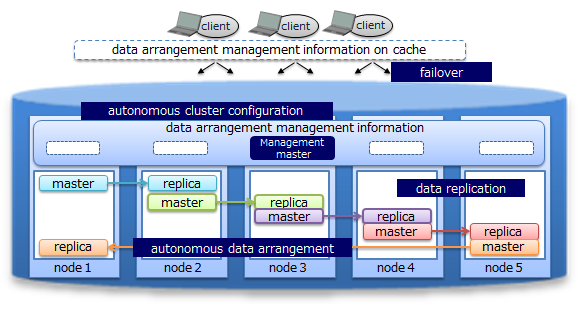

In addition, direct access between the client and node is possible in GridDB by caching the data arrangement when accessing the database for the first time on the client library end. Since direct access to the target data is possible without going through the master node to manage the operating status of the cluster and data arrangement, access to the master node can be centralized to reduce communication cost substantially.

Processing in parallel

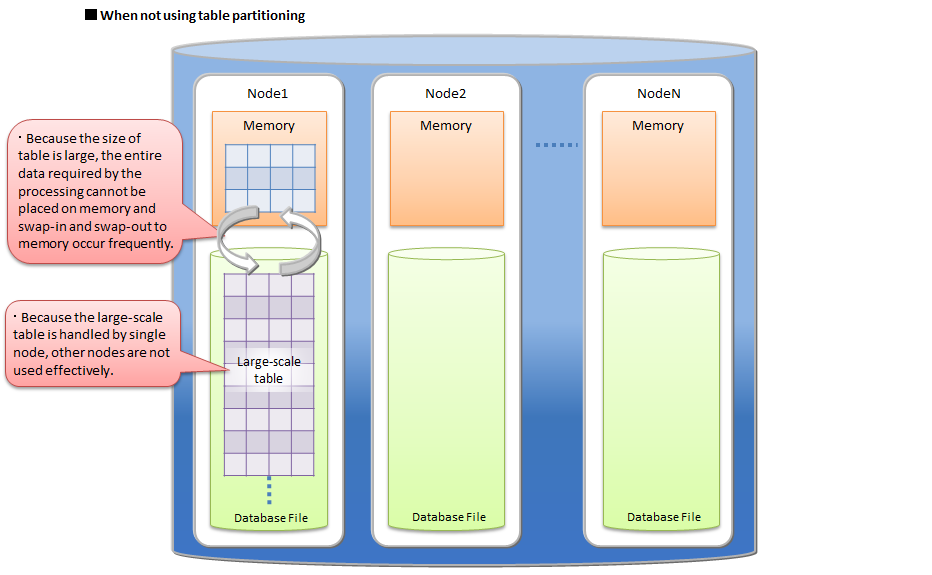

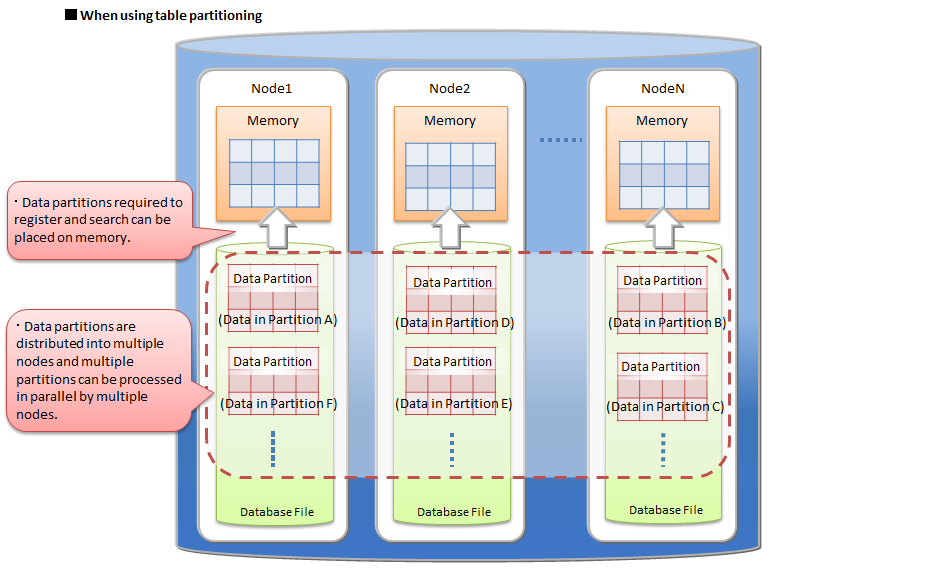

GridDB provides high-speed processing using the following functions: parallel processing e.g., by dividing a request into processing units capable of parallel processing in the drive engine and executing the process using a thread in the node and between nodes, as well as dispersing a single large data into multiple nodes (partitioning) for processing to be carried out in parallel between nodes.

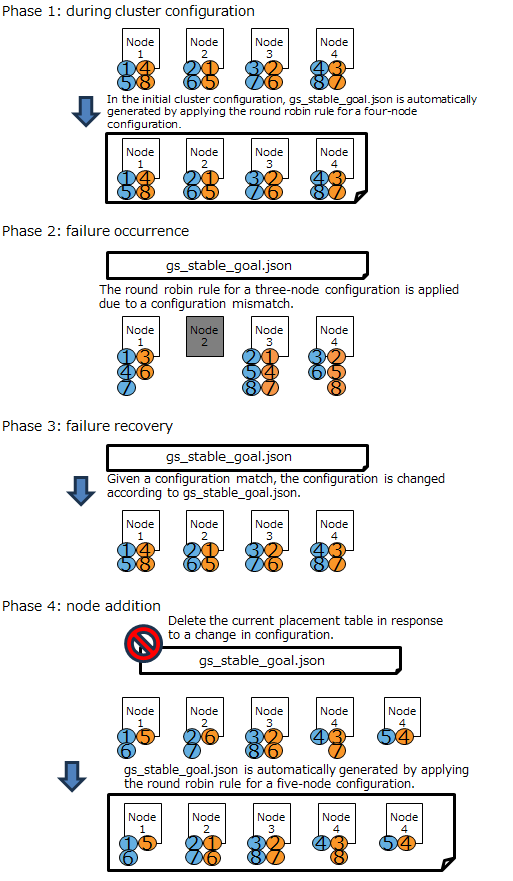

Reliability/availability[Enterprise Edition]

Data are duplicated in a cluster and the duplicated data, replicas, are located in multiple nodes. Replicas include master data, called an owner replica, and duplicated data called a backup. By using these replicas, processing can be continued in any of the nodes constituting a cluster even when a failure occurs. Special operating procedures are not necessary as the system will also automatically perform re-arrangement of the data after a node failure occurs (autonomous data arrangement). Data arranged in a failed node is restored from a replica and then the data is re-arranged so that the set number of replicas is reached automatically.

Duplex, triplex, or multiplex replica can be set according to the availability requirements.

Each node performs persistence of the data update information using a disk. Even if a failure occurs in the cluster system, all the registered and updated data up to the failure can be restored without being lost.

In addition, since the client also possesses cache information on the data arrangement and management, upon detecting a node failure, it will automatically perform a failover and data access can be continued using a replica.

GridDB Editions

GridDB has the following products.

- GridDB Community Edition (CE)

- GridDB Enterprise Edition (EE)

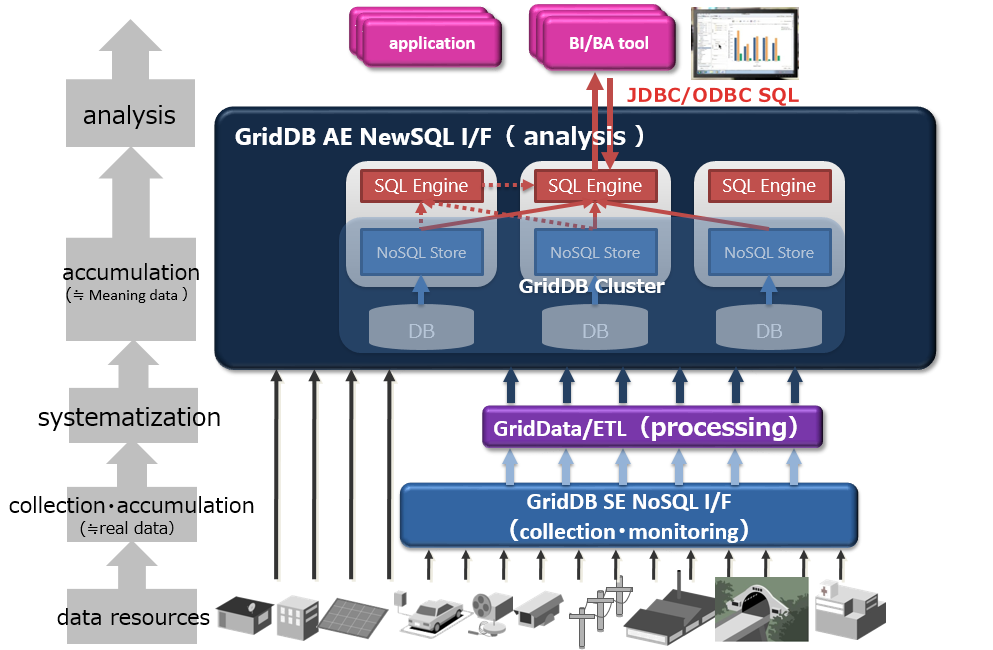

In addition to the features described in Features of GridDB above, GridDB has the following two features:

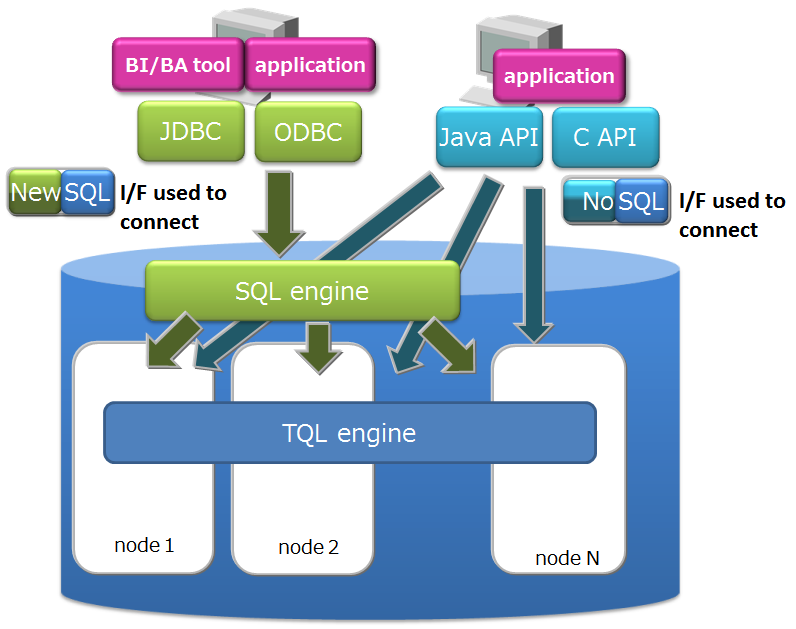

- NewSQL interface

- In addition to being SQL 92 compliant, GridDB AE supports ODBC (C language interface) and JDBC (Java interface) application interfaces.

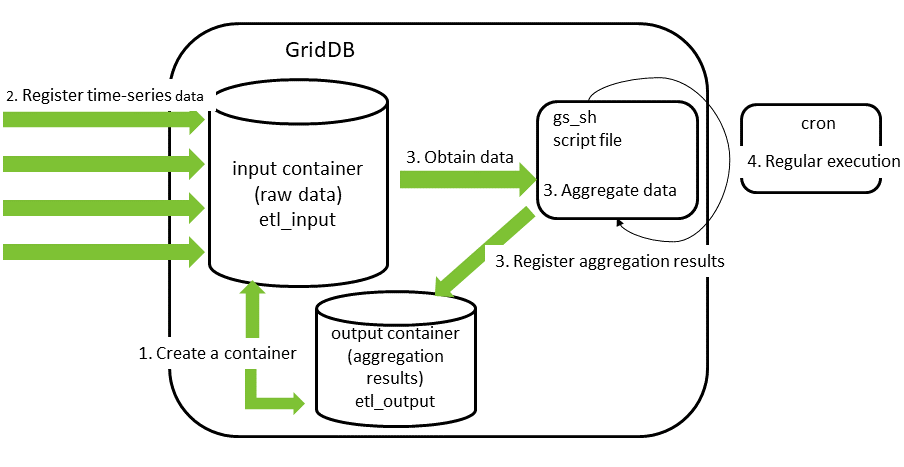

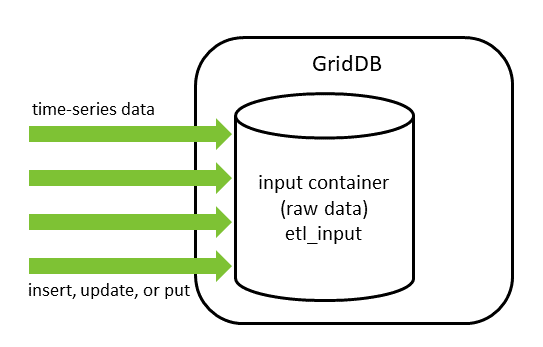

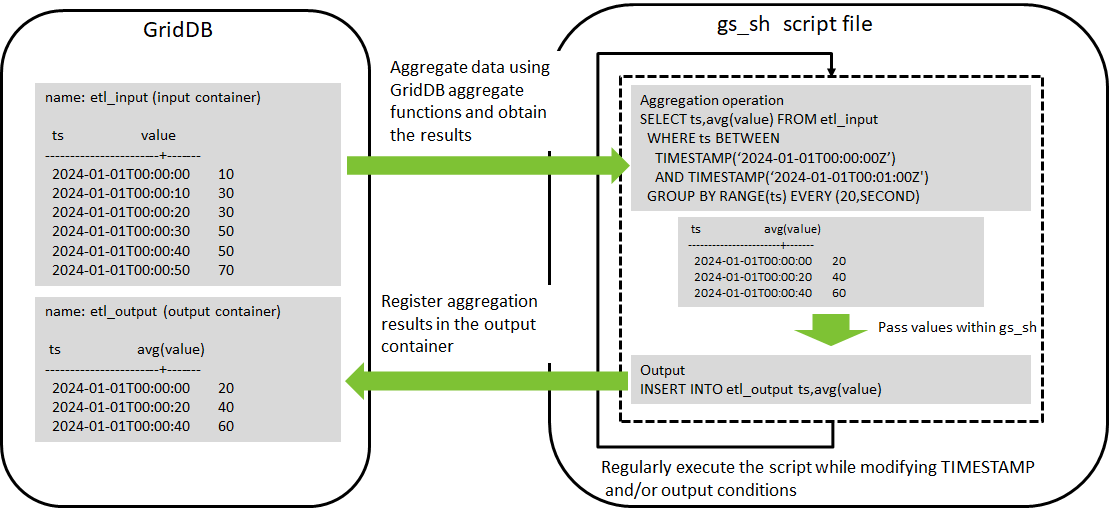

- By using ODBC/JDBC, direct access to the database from BI (Business Intelligence) or ETL (Extract Transfer Load) tool becomes possible.

- Containers can be considered as tables and operated.

- Table partitioning function

- Partitioning function for high speed access to a huge table.

- Since data is divided into multiple parts and distributed to multiple nodes, it is possible to parallelize data search and extraction from the table, thus realizing faster data access.

The features of each interface are as follows.

- NoSQL interface (NoSQL I/F)

- Client APIs (C, Java) of NoSQL I/F focus on batch processing of big data at high speed.

- It is used for data collection, high-speed access of key value data, simple aggregate calculation using TQL, etc.

- NewSQL interface (NewSQL I/F)

- ODBC/JDBC of NewSQL I/F focus on cooperation with existing applications and development productivity using SQL.

- It is used to classify and analyze data collected using BI tools, etc.

When using GridDB, both NoSQL I/F and NewSQL I/F can be used depending on the use case.

The GridDB database and NoSQL/NewSQL interface of GridDB are compatible within the same major version (e.g., a minor version upgrade). The version notation is as follows:

- The version of GridDB is represented as “X.Y[.Z]”, and each symbol represents the following.

- Major version (X): Changed for significant enhancements.

- Minor version (Y): Changed for expanding or adding functions.

- Revision (Z): Changed for such as bug fixes.

When using both NoSQL I/F and NewSQL I/F in GridDB AE, check the following specification in advance

- Containers created by NoSQL I/F can be operated as tables by NewSQL I/F. Containers created with NoSQL I/F can be accessed as tables in NewSQL I/F.

- And tables created with NewSQL I/F can be accessed as containers in NoSQL I/F. The names of tables and containers must be unique.

Terminology

Describes the terms used in GridDB in a list.

| Term | Description |

|---|---|

| Node | Refers to the individual server process to perform data management in GridDB. |

| Cluster | Single or a set of nodes that perform data management together in an integrated manner. |

| Master node | Node to perform a cluster management process. |

| Follower node | All other nodes in the cluster other than the master node. |

| number of nodes constituting a cluster | Refers to the number of nodes constituting a GridDB cluster. When starting GridDB for the first time, the number is used as a threshold value for the cluster to be valid. (Cluster service is started when the number of nodes constituting a cluster joins the cluster.) |

| number of nodes already participating in a cluster | Number of nodes currently in operation that have been incorporated into the cluster among the nodes constituting the GridDB cluster. |

| Block | A block is a data unit for data persistence processing in a disk (hereinafter referred to a checkpoint) and is the smallest physical data management unit in GridDB. Multiple container data are arranged in a block. Block size is set up in a definition file (cluster definition file) before the initial startup of GridDB. |

| Partition | A partition is a unit of data management for placing a container and is equivalent to a data file on the file system when persisting data to a disk. One partition corresponds to one data file. It is also the smallest unit of data placement between clusters, as well as a unit of data movement and copy for adjusting the load balance between nodes (rebalancing) and for managing data multiplexing (replicas) in the event of a failure. |

| Row | Refers to one row of data registered in a container or table. Multiple rows are registered in a container or table. A row consists of values of columns corresponding to the schema definition of the container (table). |

| Container (Table) | Container to manage a set of rows. It may be called a container when operated with NoSQL I/F, and may be called a table when operated with NewSQL I/F. What these names refer are the same object, only in different names. A container has two data types: collection and timeseries container. |

| Collection (table) | One type of container (table) to manage rows having a general key. |

| Timeseries container (timeseries table) | One type of container (table) to manage rows having a timeseries key. Possesses a special function to handle timeseries data. |

| Database file | A database file is a group of files where the data retained by nodes configuring a cluster is written to disks or SSDs and is persisted. A database file is a collective term for data files, checkpoint log files, and transaction log files. |

| Data file | A file to which partition data is written. Updated information located on the memory is reflected at the interval (/checkpoint/checkpointInterval) specified in the node definition file. |

| Checkpoint log file | This is a file for storing block management information for a partition. Block management information is written in smaller batches at the interval (/checkpoint/checkpointInterval) specified in the node definition file. |

| Transaction log file | Update information of the transaction is saved sequentially as a log. |

| LSN (Log Sequence Number) | Shows the update log sequence number, which is assigned to each partition during the update in a transaction. The master node of a cluster configuration maintains the maximum number of LSN (MAXLSN) of all the partitions maintained by each node. |

| Replica | Replication is the process of creating an exact copy of the original data. In this case, one or more replicas are created and stored on multiple nodes, which results to the creation of partition across the nodes. There are two forms of replica: master and backup. The former one refers to the original or master data, whereas the latter one is used in case of failure as a reference. |

| Owner node | A node that can update a container in a partition. A node that records the container serving as a master among the replicated containers. |

| Backup node | A node that records the container for backup data among the replicated containers. |

| Definition file | Definition file includes two types of parameter files: gs_cluster.json, hereinafter referred to as a cluster definition file, used when composing a cluster; gs_node.json, hereinafter referred to as a node definition file, used to set the operations and resources of the node in a cluster. It also includes a user definition file for GridDB administrator users. |

| Event log file | Event logs of the GridDB server are saved in this file including messages such as errors, warnings and so on. including messages such as errors, warnings and so on. |

| Audit log file | It is a file in which audit logs of the GridDB server are stored. |

| OS user (gsadm) | An OS user has the right to execute operating functions in GridDB. An OS user named gsadm is created during the GridDB installation. |

| Administrator user | An administrator user is a GridDB user prepared to perform operations in GridDB. |

| General user | A user used in the application system. |

| user definition file | File in which an administrator user is registered. During initial installation, two administrators (system and admin) are registered. |

| Cluster database | General term for all databases that can be accessed in a GridDB cluster system. |

| Database | Theoretical data management unit created in a cluster database. A public database is created in a cluster database by default. Data separation can be realized for each user by creating a new database and giving a general user the right to use it. |

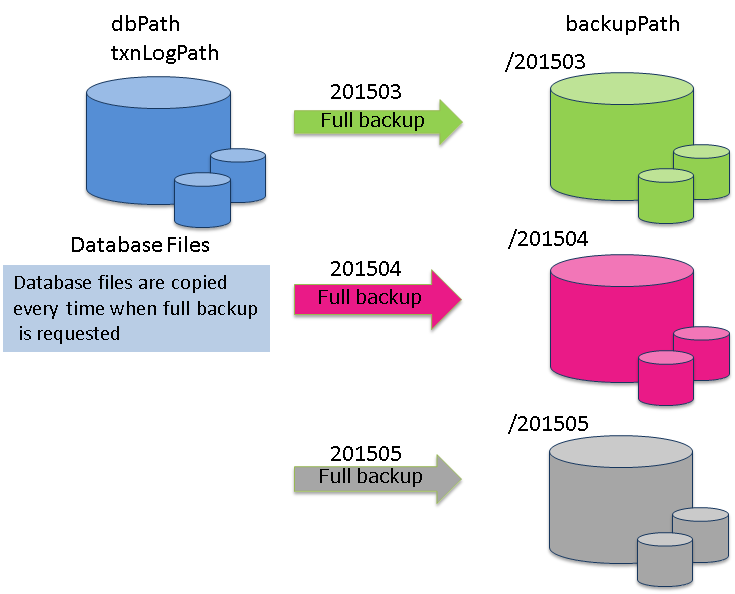

| Full backup | A backup of the cluster database currently in use is stored online in the backup directory specified in the node definition file. |

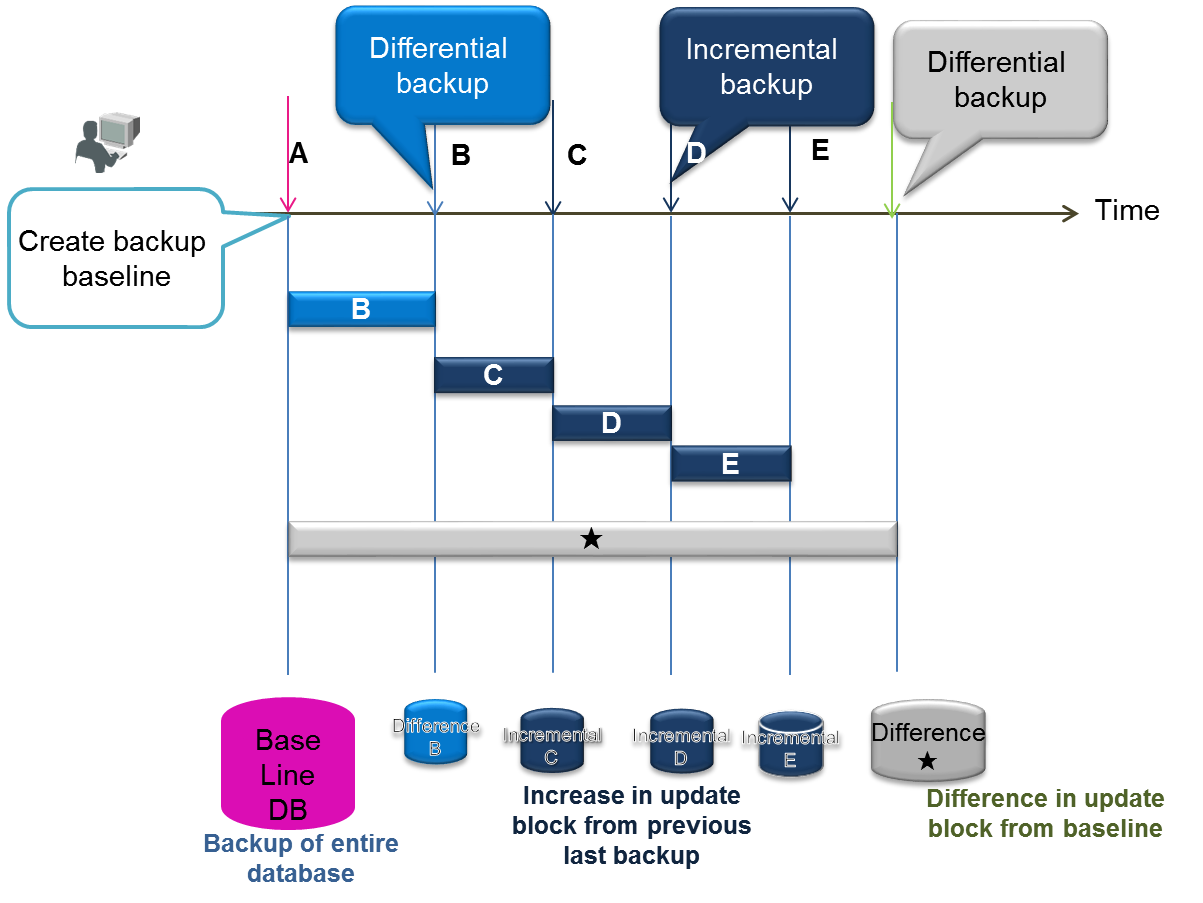

| Differential/incremental backup | A backup of the cluster database currently in use is stored online in the backup directory specified in the node definition file. In subsequent backups, only the difference in the update block after the backup is backed up. |

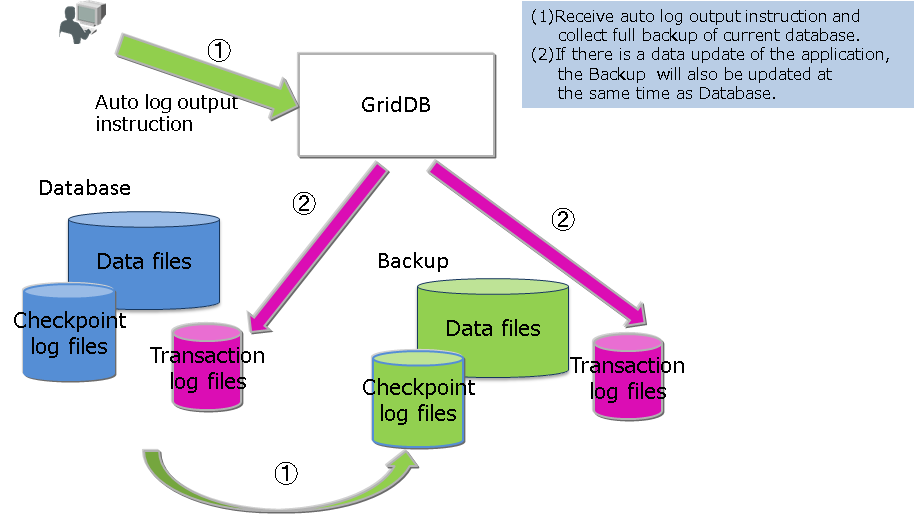

| Automatic log backup | In addition to backing up the cluster database currently in use in the specified directory online, the transaction log is also automatically picked up at the same timing as the transaction log file writing. The write timing of the transaction log file follows the value of /dataStore/logWriteMode in the node definition file. |

| Failover | When a failure occurs in a cluster currently in operation, the structure allows the backup node to automatically take over the function and continue with the processing. |

| Client failover | When a failure occurs in a cluster currently in operation, the structure allows the backup node to be automatically re-connected to continue with the processing as a retry process when a failure occurs in the API on the client side. |

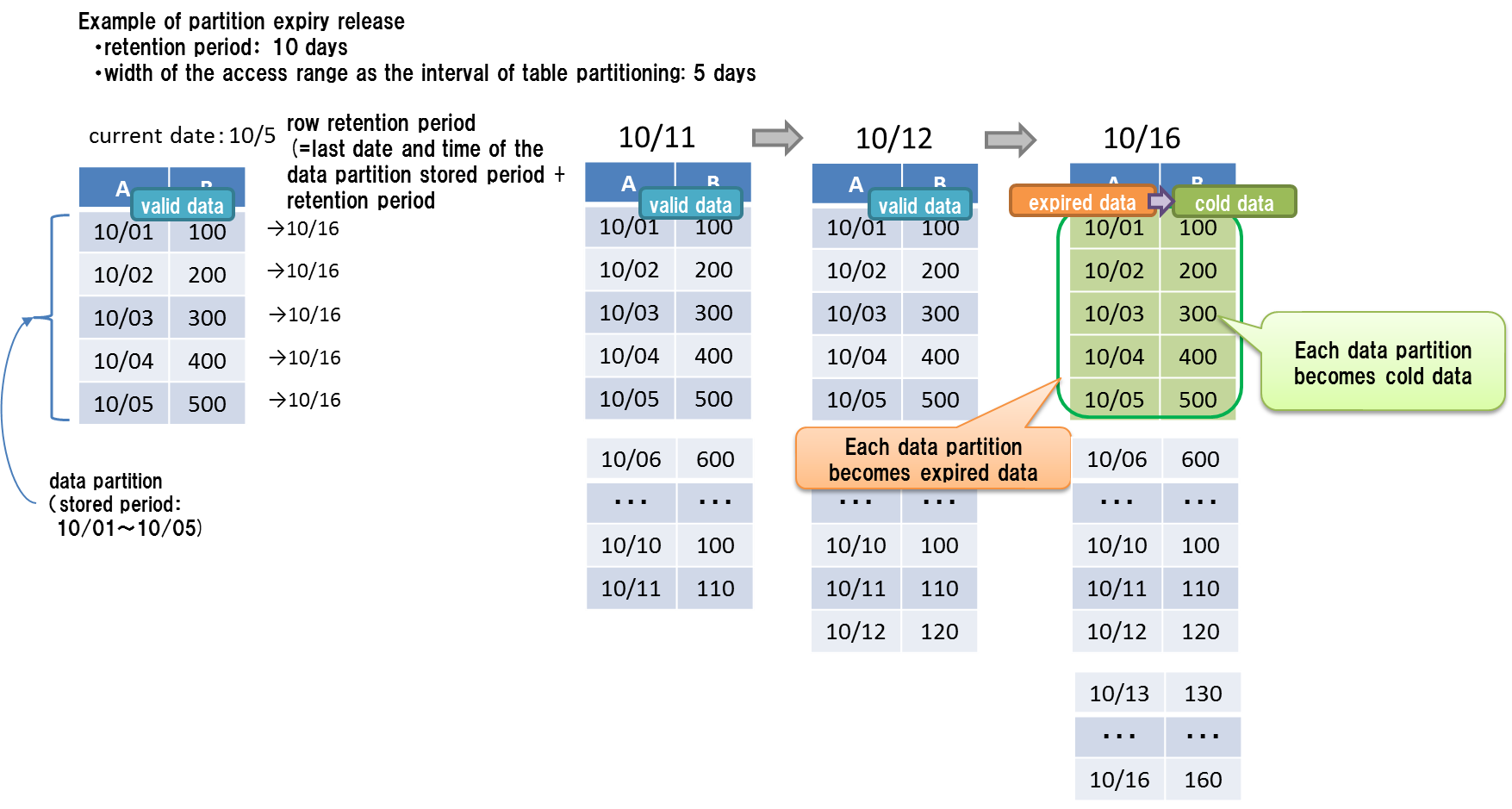

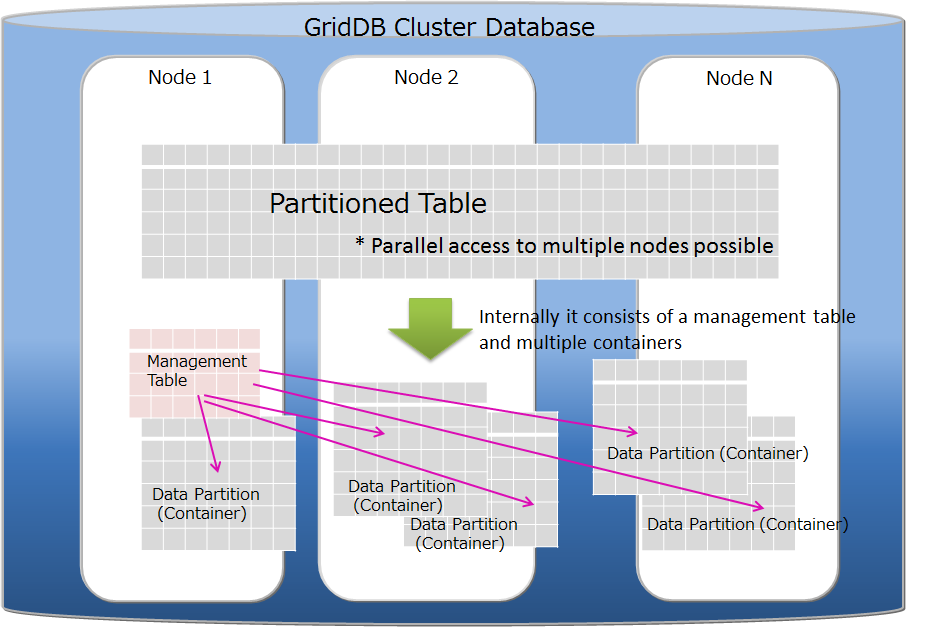

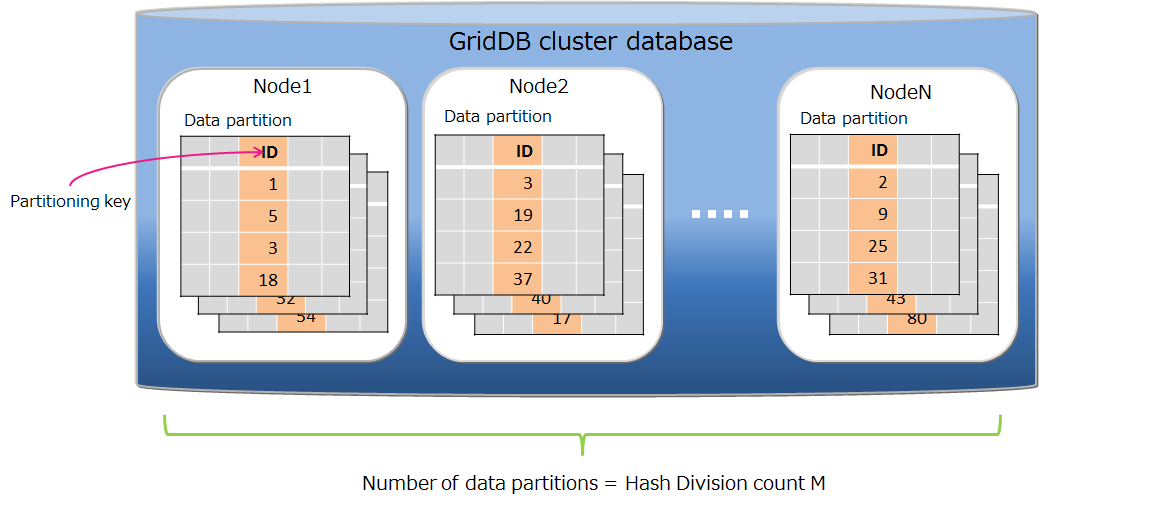

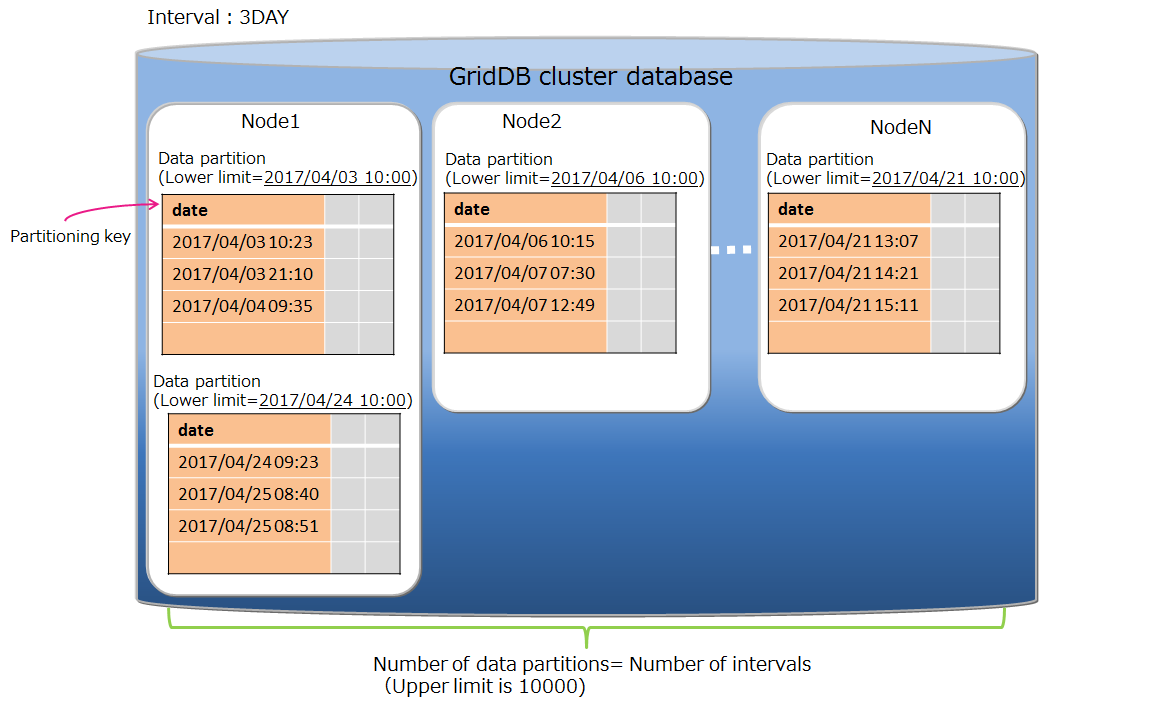

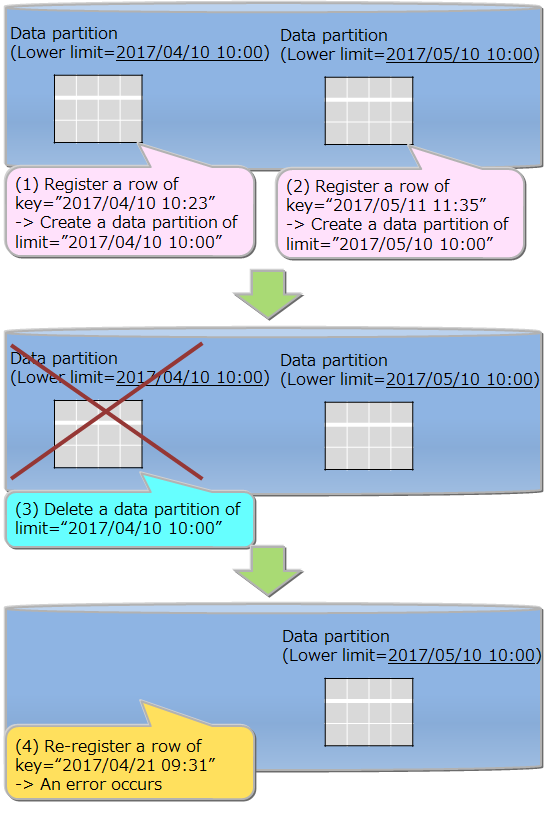

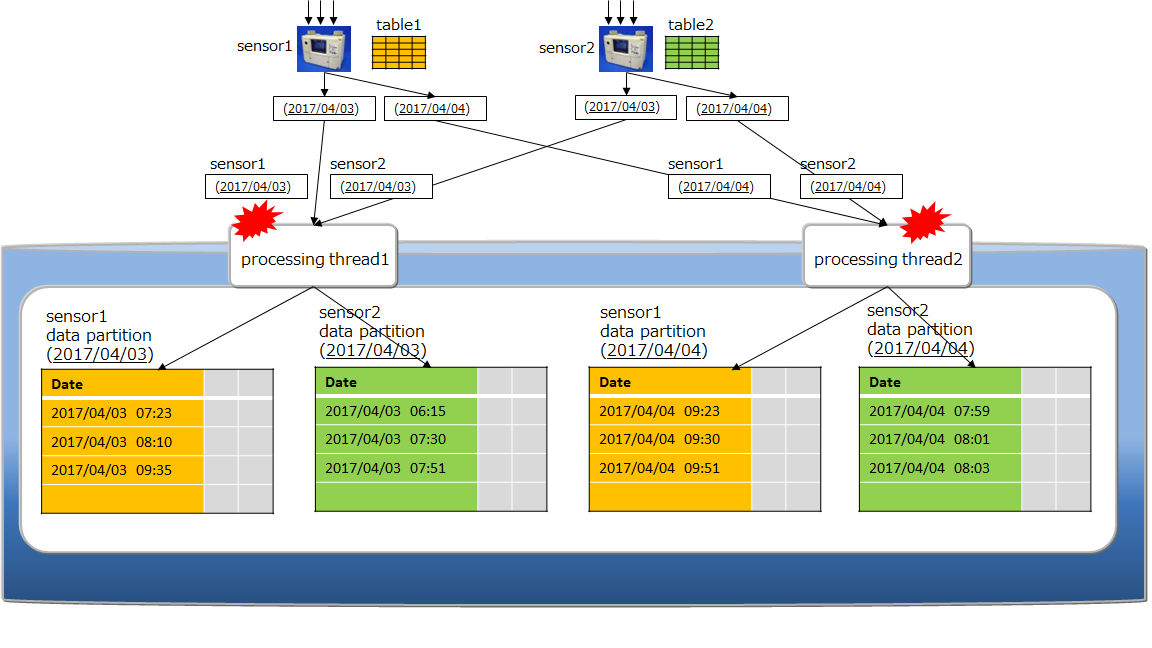

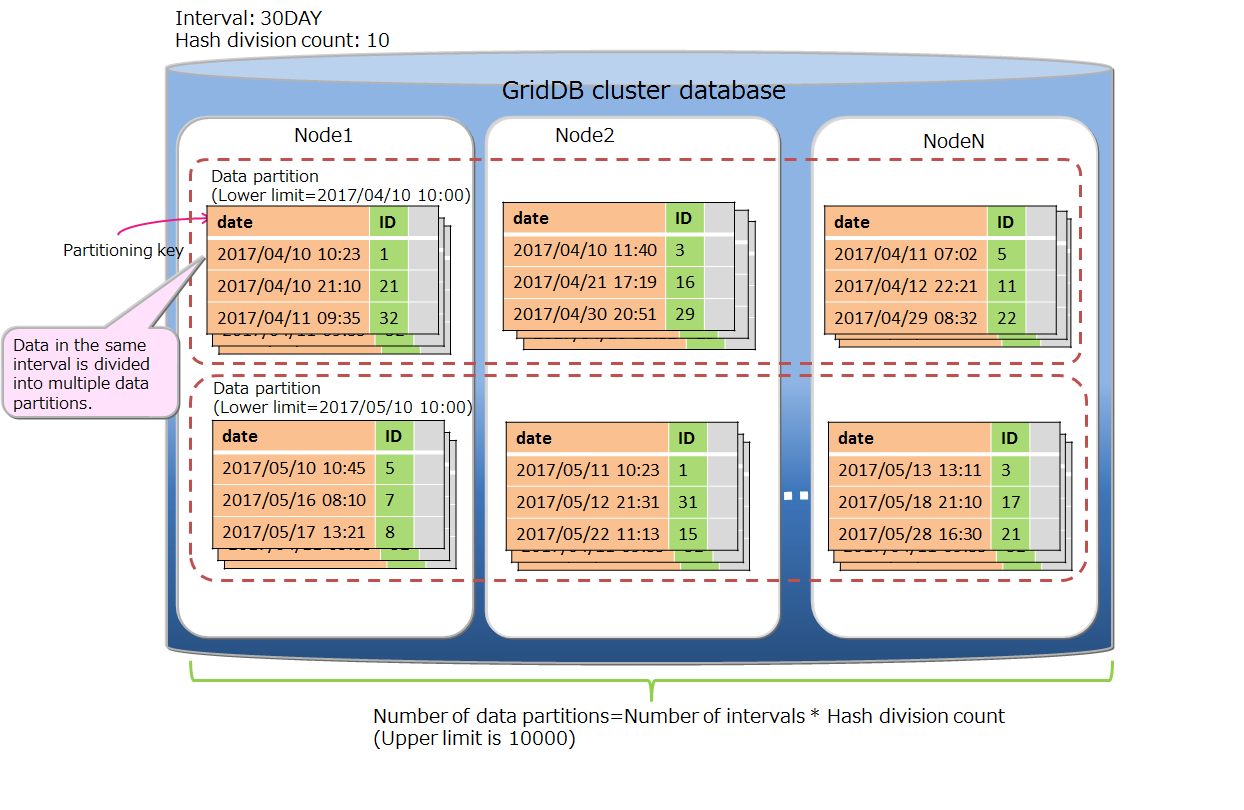

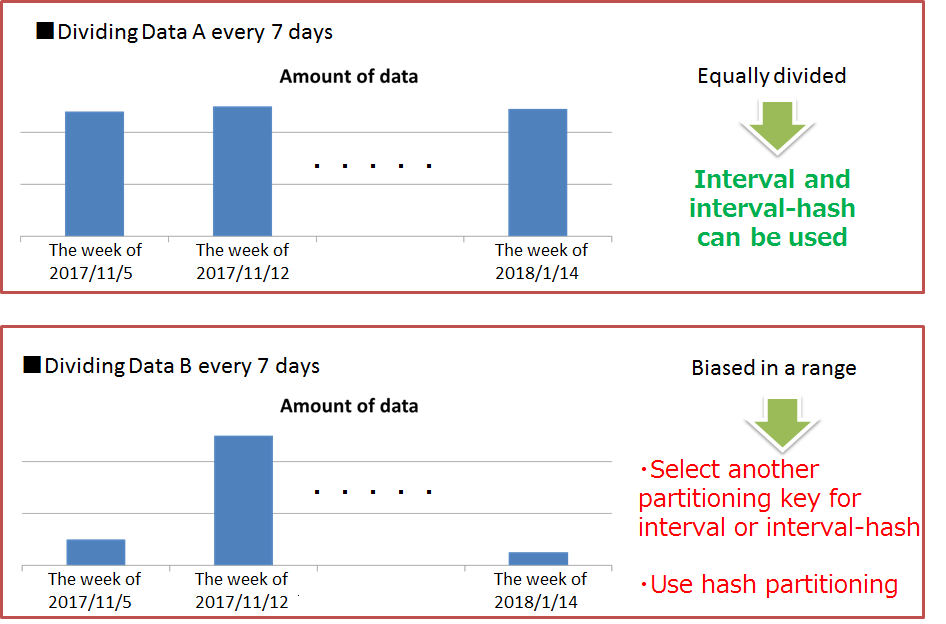

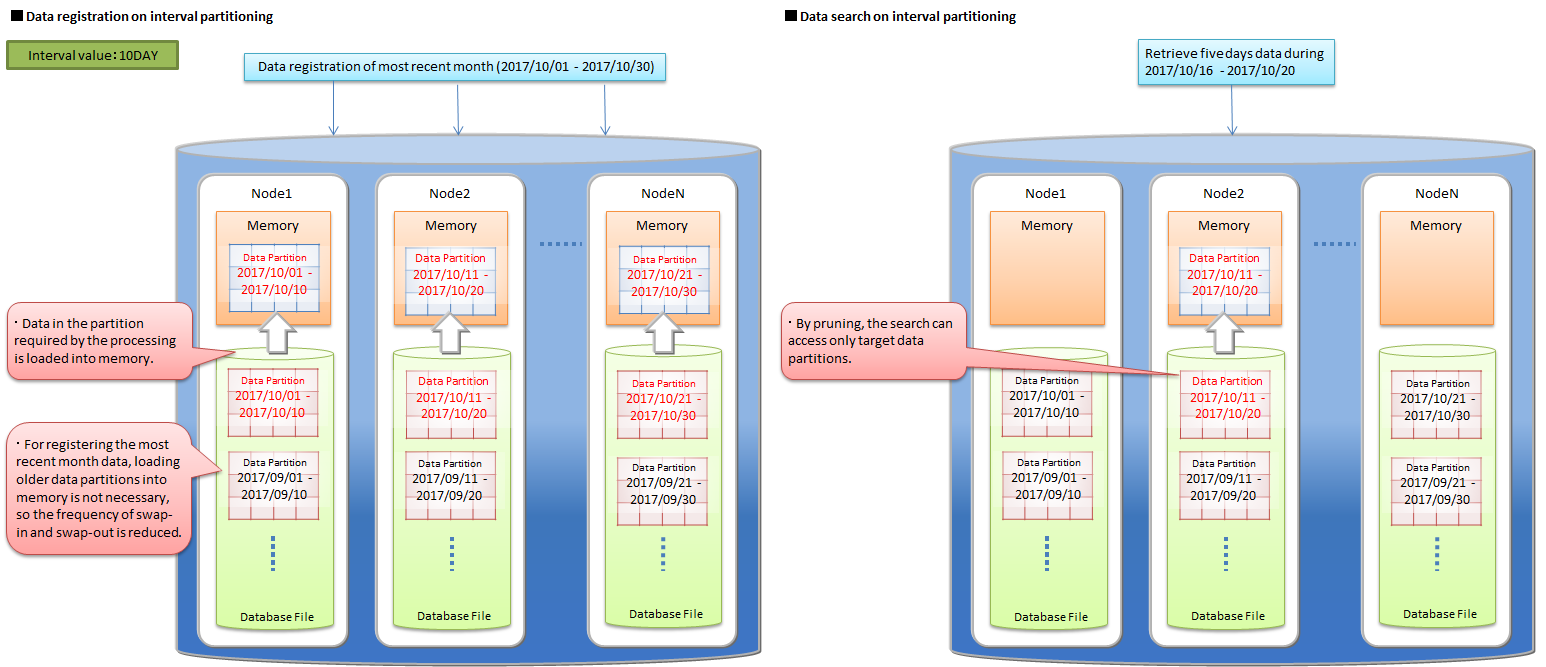

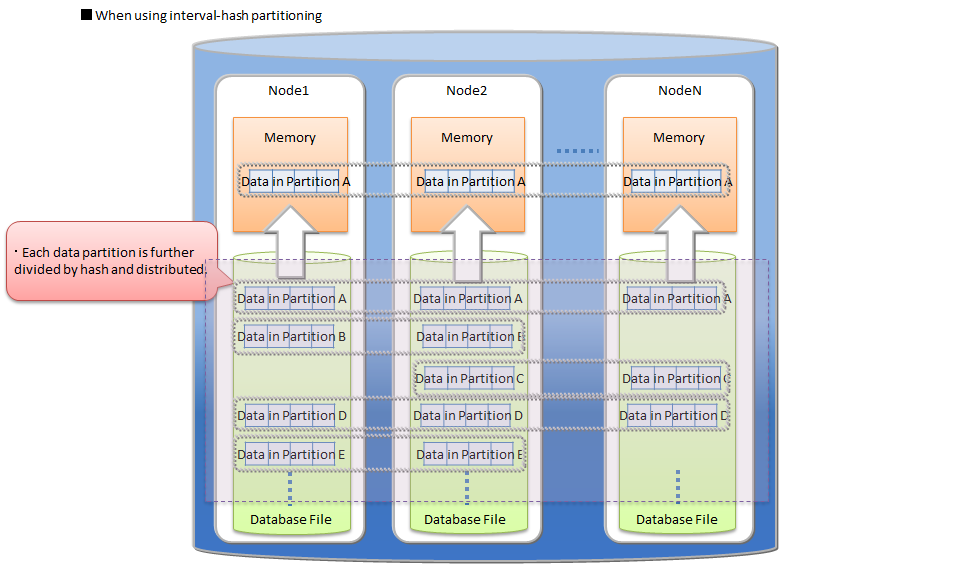

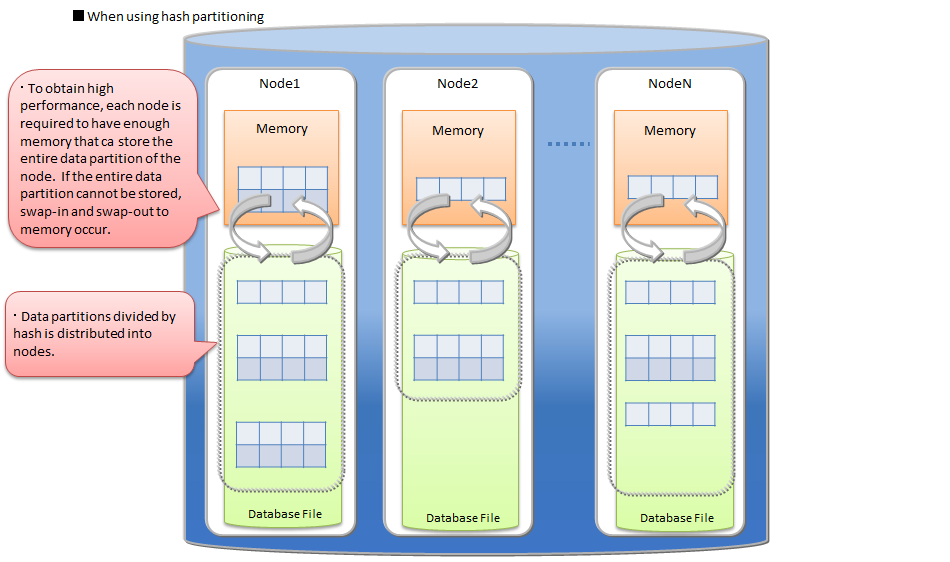

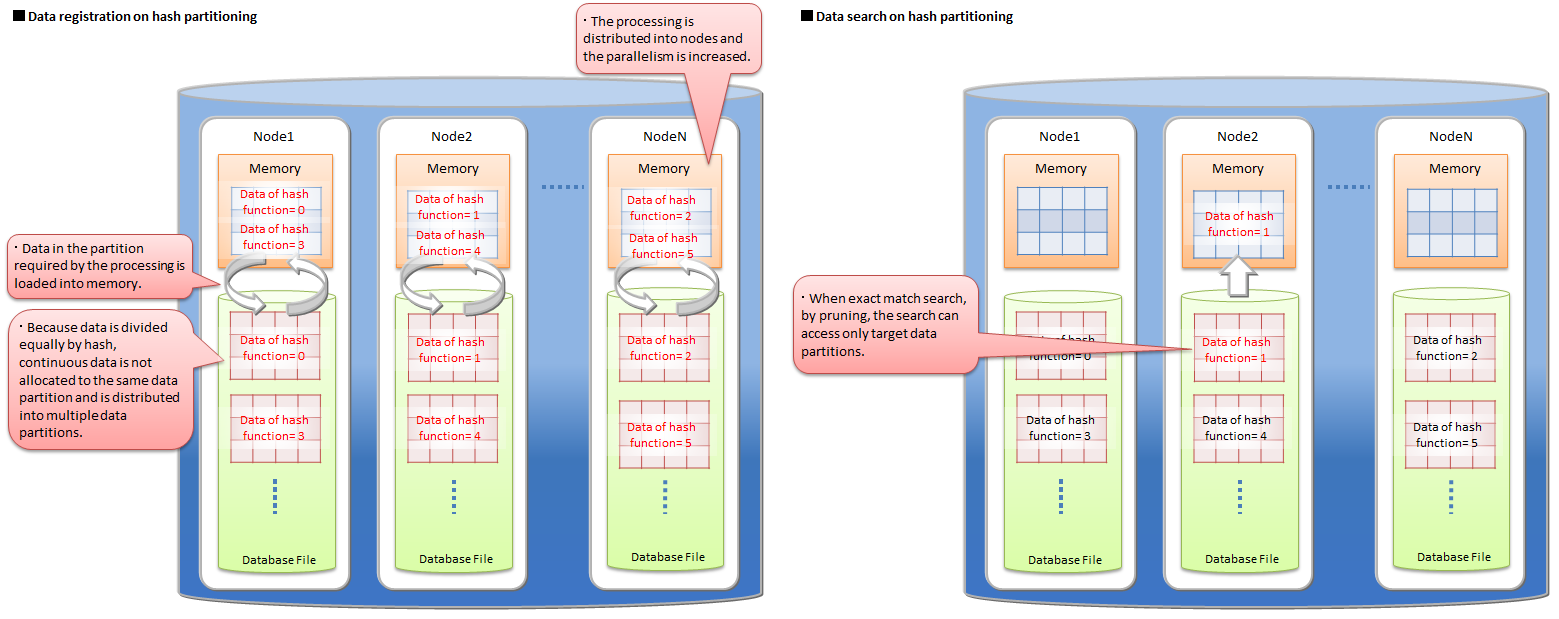

| Table partitioning | Function to access a huge table quickly by allowing concurrent execution by processors of multiple nodes, and the memory of multiple nodes to be used effectively by distributing the placement of a large amount of table data with multiple data registrations in multiple nodes. |

| Data partition | General name of data storage divided by table partitioning. Multiple data partitions are created for a table by table partitioning. Data partitions are distributed to the nodes like normal containers. The number of data partitions and the range of data stored in each data partition are depending on the type of table partitioning (hash, interval, or interval-hash). |

| Data Affinity | A function to raise the memory hit rate by placing highly correlated data in a container in the same block and localizing data access. |

| Placement of container/table based on node affinity | A function to reduce the network load during data access by placing highly correlated containers in the same node. |

Structure of GridDB

Describes the cluster operating structure in GridDB.

Composition of a cluster

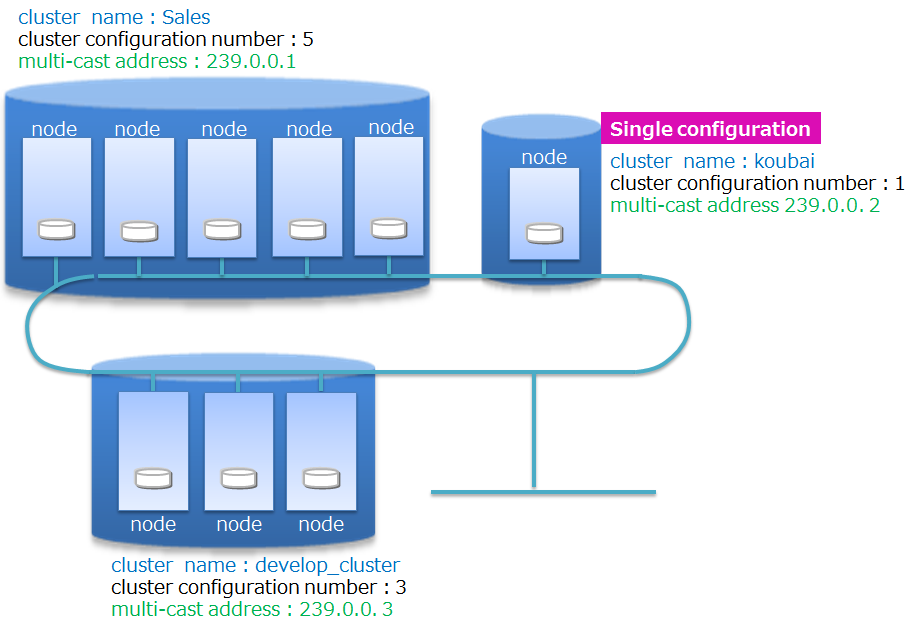

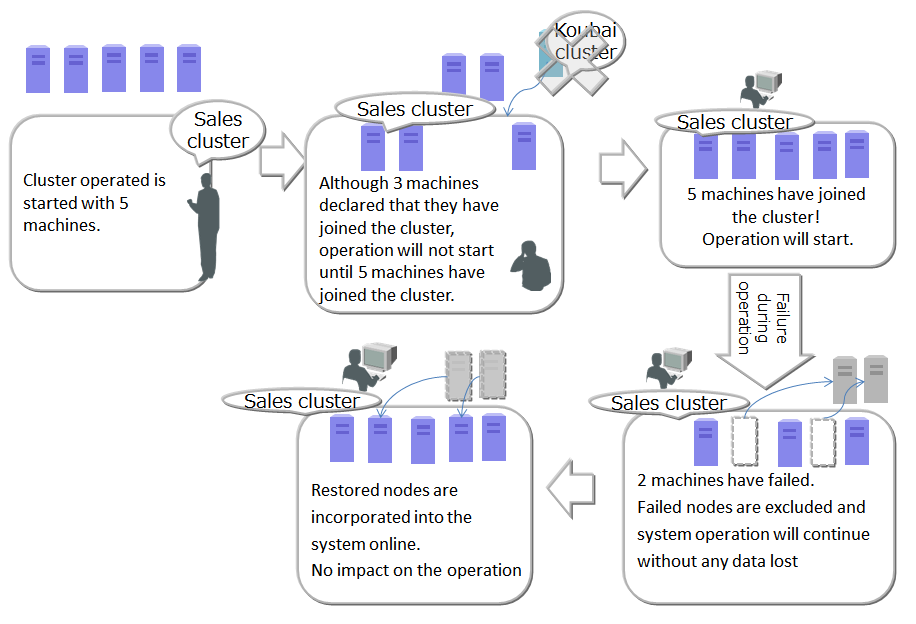

GridDB is operated by clusters which are composed of multiple nodes. Before accessing the database from an application system, nodes must be started and the cluster must be constituted, that is, cluster service is executed.

A cluster is formed and cluster service is started when a number of nodes specified by the user joins the cluster. Cluster service will not be started and access from the application will not be possible until all nodes constituting a cluster have joined the cluster.

A cluster needs to be constituted even when operating GridDB with a single node. In this case, the number of nodes constituting a cluster is 1. A composition that operates a single node is known as a single composition.

A cluster name is used to distinguish a cluster from other clusters so as to compose a cluster using the right nodes selected from multiple GridDB nodes on a network. Using cluster names, multiple GridDB clusters can be composed in the same network. A cluster is composed of nodes with the following features in common: cluster name, the number of nodes constituting a cluster, and the connection method setting. A cluster name needs to be set in the cluster definition file for each node constituting a cluster, and needs to be specified as a parameter when composing a cluster as well.

The method of constituting a cluster using multicast is called the multicast method. See Consideration of cluster configuration for details about cluster configuration methods.

The operation of a cluster composition is shown below.

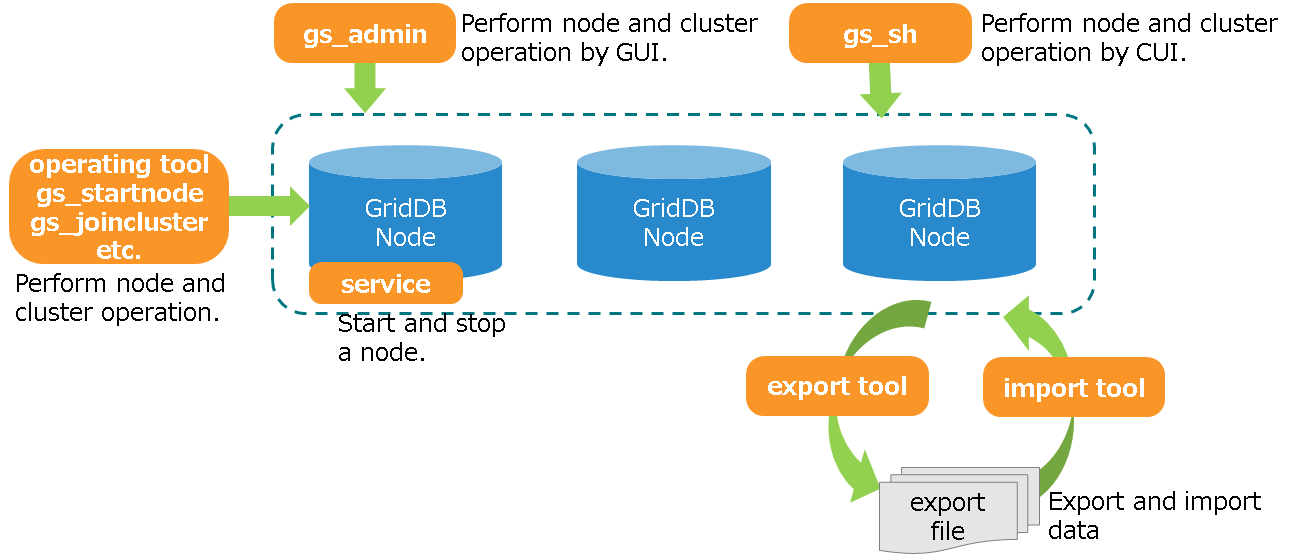

To start up a node and compose a cluster, the operation commands gs_startnode/gs_joincluster command or gs_sh are used. In addition, there is a service control function to start up the nodes at the same time as the OS and to compose the cluster.

To compose a cluster, the number of nodes joining a cluster (number of nodes constituting a cluster) and the cluster name must be the same for all the nodes joining the cluster.

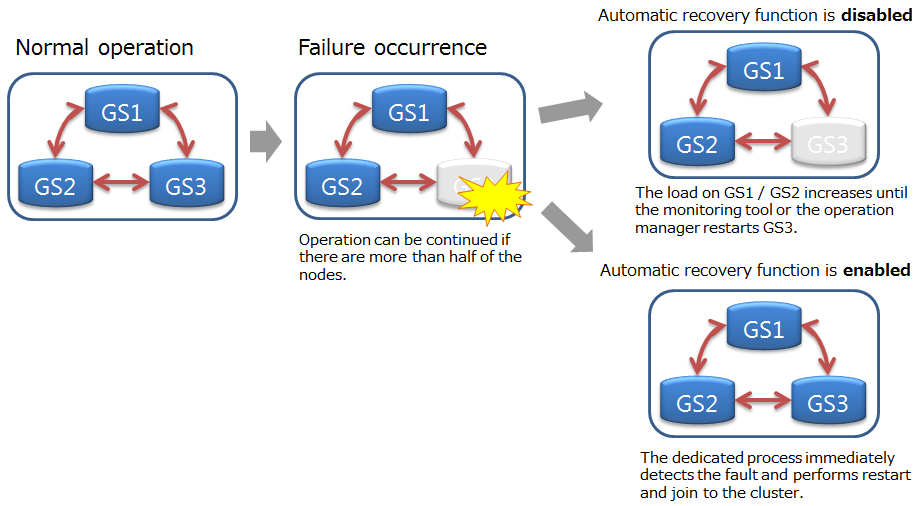

Even if a node fails and is separated from the cluster after operation in the cluster started, cluster service will continue so long as the majority of the number of nodes is joining the cluster.

Since cluster operation will continue as long as the majority of nodes is in operation, a node can be separated from the cluster online for maintenance while keeping the cluster in operation, and included in the cluster online after the maintenance completes. Nodes can also be added online to reinforce the system.

Status of node

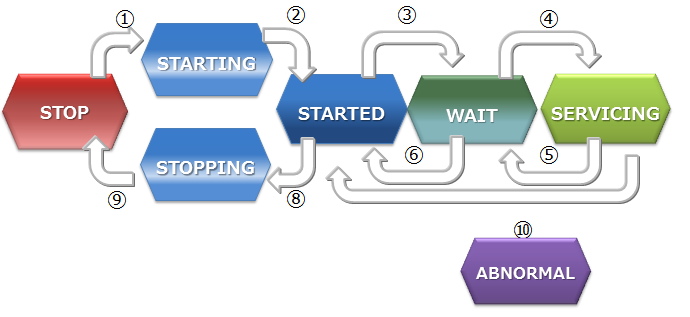

Nodes have several types of status that represent their status. The status changes by user command execution or internal processing of the node. The status of a cluster is determined by the status of the nodes in a cluster.

This section explains types of node status, status transition, and how to check the node status.

-

Types of node status

Node status Description STOP The GridDB server has not been started in the node. STARTING The GridDB server is starting in the node. Depending on the previous operating state, start-up processes such as recovery processing of the database are carried out. The only possible access from a client is checking the status of the system with a gs_stat command or gs_sh command. Access from the application is not possible. STARTED The GridDB server has been started in the node. However, access from the application is not possible as the node has not joined the cluster. To obtain the cluster composition, execute a cluster operating command, such as gs_joincluster or gs_sh to join the node to the cluster. WAIT The system is waiting for the cluster to be composed. Nodes have been informed to join a cluster but the number of nodes constituting a cluster is insufficient, so the system is waiting for the number of nodes constituting a cluster to be reached. WAIT status also indicates the node status when the number of nodes constituting a cluster drops below the majority and the cluster service is stopped. SERVICING A cluster has been constituted and access from the application is possible. However, access may be delayed if synchronization between the clusters of the partition occurs due to a re-start after a failure when the node is stopped or the like. STOPPING Intermediate state in which a node has been instructed to stop but has not stopped yet. ABNORMAL The state in which an error is detected by the node in SERVICING state or during state transition. A node in the ABNORMAL state will be automatically separated from the cluster. After collecting system operation information, it is necessary to forcibly stop and restart the node in the ABNORMAL state. By re-starting the system, recovery processing will be automatically carried out. -

Transition in the node status

Node status State transition State transition event Description ① Command execution Start a node by executing the commands such as gs_startnode command, gs_sh, and service start-up. ② System Status changes automatically at the end of recovery processing or loading of database files. ③ Command execution Joining a node to a cluster by executing the commands such as gs_joincluster/gs_appendcluster command, gs_sh, and service start-up. ④ System Status changes automatically when the required number of component nodes join a cluster. ⑤ System Status changes automatically when the rest of the nodes constituting the cluster are detached from the service due to a failure or by some other reasons, and the number of nodes joining the cluster become less than half of the value set in the definition file. ⑥ Command execution Detaches a node from a cluster by executing the commands such as gs_leavecluster command and gs_sh. ⑦ Command execution Detaches a node from a cluster by executing the commands such as gs_leavecluster/gs_stopcluster command or gs_sh. ⑧ Command execution Stop a node by executing the commands such as gs_startnode command, gs_sh, and service stop. ⑨ System Stops the server process once the final processing ends ⑩ System Detached state due to a system failure. In this state, the node needs to be stopped by force once. -

How to check the node status

The node status is determined by the combination of the node status and the node role.

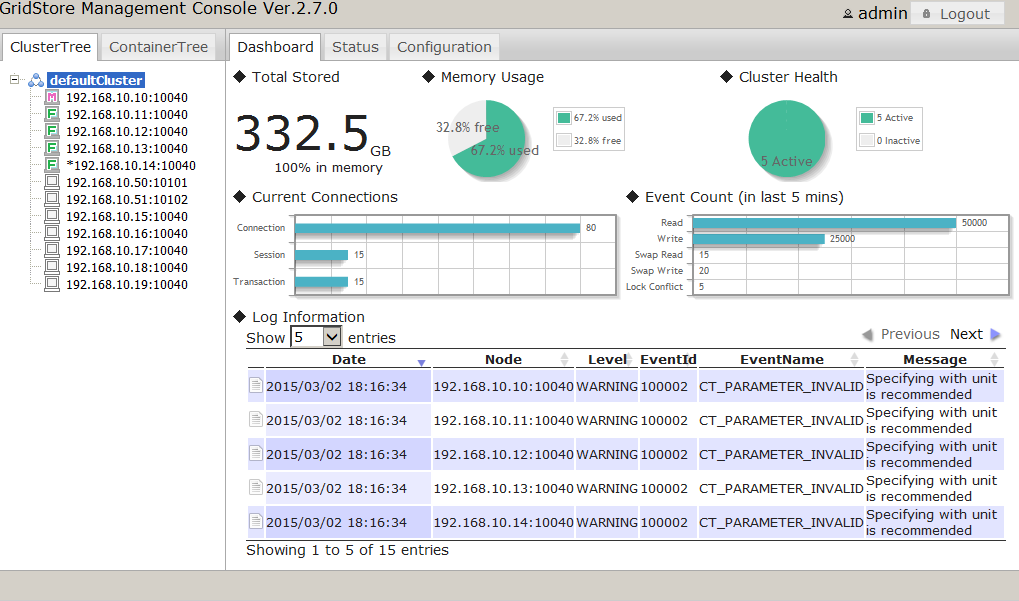

The status of the node can be checked with gs_sh or gs_admin.

The operation status of a node and the role of a node can be checked from the result of the gs_stat command, which is in json format. That is, for the operation status of a node, check the value of /cluster/nodeStatus, for the role of a node, check /cluster/clusterStatus)

The table below shows the node status, determined by the combination of the operation status of a node and the role of a node.

Node status Operation status of a node

(/cluster/nodeStatus)Role of a node

(/cluster/clusterStatus)STOP - (Connection error of gs_stat) - (Connection error of gs_stat) STARTING INACTIVE SUB_CLUSTER STARTED INACTIVE SUB_CLUSTER WAIT ACTIVE SUB_CLUSTER SERVICING ACTIVE MASTER or FOLLOWER STOPPING NORMAL_SHUTDOWN SUB_CLUSTER ABNORMAL ABNORMAL SUB_CLUSTER -

Operation status of a node

The table below shows the operation status of a node. Each state is expressed as the value of /cluster/nodeStatus of the gs_stat command.

Operation status of a node Description ACTIVE Non-active state ACTIVATING In transition to a non-active state. NACTIVE Non-active state DEACTIVATING In transition to a non-active state. NORMAL_SHUTDOWN In shutdown process ABNORMAL Abnormal state -

Role of a node

The table below shows the role of a node. Each state is expressed as the value of /cluster/clusterStatus of the gs_stat command.

A node has two types of roles: “master” and “follower”. To start a cluster, one of the nodes which constitute the cluster needs to be a “master.” The master manages the whole cluster. All the nodes other than the master become “followers.” A follower performs cluster processes, such as a synchronization, following the directions from the master.

Role of a node Description MASTER Master FOLLOWER Follower SUB_CLUSTER/SUB_MASTER Role undefined

-

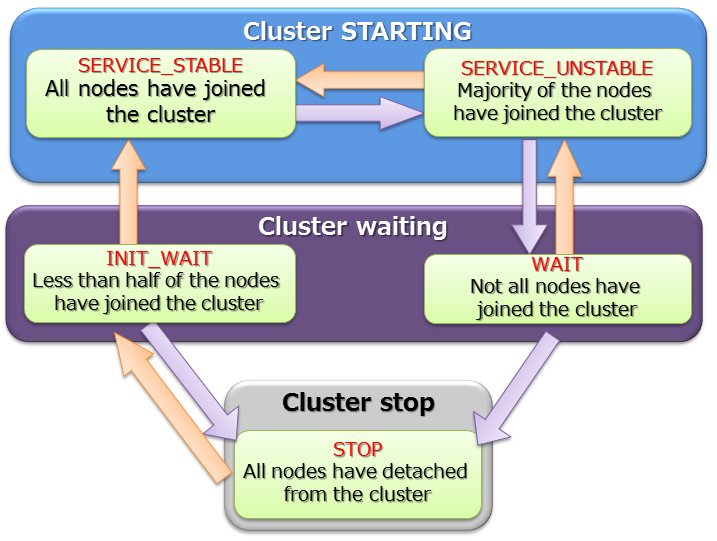

Status of cluster

The cluster operating status is determined by the state of each node, and the status may be one of 3 states - IN OPERATION/INTERRUPTED/STOPPED.

During the initial system construction, cluster service starts after all the nodes, the number of which was specified by the user as the number of nodes constituting a cluster, have joined the cluster.

During initial cluster construction, the state in which the cluster is waiting to be composed when all the nodes that make up the cluster have not been incorporated into the cluster is known as [INIT_WAIT]. When the number of nodes constituting a cluster has joined the cluster, the state will automatically change to the operating state.

Operation status includes two states, [STABLE] and [UNSTABLE].

- [STABLE] state

- State in which a cluster has been formed by the number of nodes specified in the number of nodes constituting a cluster and service can be provided in a stable manner.

- [UNSTABLE] state

- A cluster in this state is joined by the nodes less than “the number of the nodes constituting the cluster” but more than half the constituting clusters are in operation.

- Cluster service will continue for as long as a majority of the number of nodes constituting a cluster is in operation.

A cluster can be operated in an [UNSTABLE] state as long as a majority of the nodes are in operation even if some nodes are detached from a cluster due to maintenance and for other reasons.

Cluster service is interrupted automatically in order to avoid a split brain when the number of nodes constituting a cluster is less than half the number of nodes constituting a cluster. The status of the cluster will become [WAIT].

-

What is split brain?

A split brain is an action where multiple cluster systems performing the same process provide simultaneous service when a system is divided due to a hardware or network failure in a tightly-coupled system that works like a single server interconnecting multiple nodes. If the operation is continued in this state, data saved as replicas in multiple clusters will be treated as master data, causing data inconsistency.

To resume the cluster service from a [WAIT] state, add the node, which recovered from the abnormal state, or add a new node, by using a node addition operation. After the cluster is joined by all the nodes, the number of which is the same as the one specified in “the number of nodes constituting a cluster”, the status will be [STABLE], and the service will be resumed.

Even when the cluster service is disrupted, since the number of nodes constituting a cluster becomes less than half due to failures in the nodes constituting the cluster, the cluster service will be automatically restarted once a majority of the nodes joins the cluster by adding new nodes and/or the nodes restored from the errors to the cluster.

A STABLE state is a state in which the value of the json parameter shown in gs_stat, /cluster/activeCount, is equal to the value of /cluster/designatedCount. (Output content varies depending on the version.)

$ gs_stat -u admin/admin

{

"checkpoint": {

:

:

},

"cluster": {

"activeCount":4, // Nodes in operation within the cluster

"clusterName": "test-cluster",

"clusterStatus": "MASTER",

"designatedCount": 4, // Number of nodes constituting a cluster

"loadBalancer": "ACTIVE",

"master": {

"address": "192.168.0.1",

"port": 10040

},

"nodeList": [ // Node list constituting a cluster

{

"address": "192.168.0.1",

"port": 10040

},

{

"address": "192.168.0.2",

"port": 10040

},

{

"address": "192.168.0.3",

"port": 10040

},

{

"address": "192.168.0.4",

"port": 10040

},

],

:

:

The status of the cluster can be checked with gs_sh or gs_admin. An example on checking the cluster status with gs_sh is shown below.

$ gs_sh

gs> setuser admin admin gsadm //Setting a connecting user

gs> setnode node1 192.168.0.1 10040 //Definition of a node constituting the cluster

gs> setnode node2 192.168.0.2 10040

gs> setnode node3 192.168.0.3 10040

gs> setnode node4 192.168.0.4 10040

gs> setcluster cluster1 test150 239.0.0.5 31999 $node1 $node2 $node3 $node4 //Cluster definition

gs> startnode $cluster1 //Start-up of all nodes making up the cluster

gs> startcluster $cluster1 //Instructing cluster composition

Waiting for cluster to start.

The GridDB cluster has been started.

gs> configcluster $cluster1 // Checking status of cluster

Name : cluster1

ClusterName : test-cluster

Designated Node Count : 4

Active Node Count : 4

ClusterStatus : SERVICE_STABLE // Stable state

Nodes:

Name Role Host:Port Status

-------------------------------------------------

node1 M 192.168.0.1:10040 SERVICING

node2 F 192.168.0.2:10040 SERVICING

node3 F 192.168.0.3:10040 SERVICING

node4 F 192.168.0.4:10040 SERVICING

gs> leavecluster $node2

Waiting for a node to separate from cluster.

The GridDB node has leaved the GridDB cluster.

gs> configcluster $cluster1

Name : cluster1

ClusterName : test150

Designated Node Count : 4

Active Node Count : 3

ClusterStatus : SERVICE_UNSTABLE // Unstable state

Nodes:

Name Role Host:Port Status

-------------------------------------------------

node1 M 192.168.0.1:10040 SERVICING // Master node

node2 - 192.168.0.2:10040 STARTED

node3 F 192.168.0.3:10040 SERVICING // Follower node

node4 F 192.168.0.4:10040 SERVICING // Follower node

Status of partition

The partition status represents the status of the entire partition in a cluster, showing whether the partitions in an operating cluster are accessible, or the partitions are balanced.

| Partition status | Description |

|---|---|

| NORMAL | All the partitions are in normal states where all of them are placed as planned. |

| NOT_BALANCE | With no replica_loss, no owner_loss but partition placement is unbalanced. |

| REPLICA_LOSS | Replica data is missing in some partitions (Availability of the partition is reduced, that is, the node cannot be detached from the cluster.) |

| OWNER_LOSS | Owner data is missing in some partitions. (The data of the partition are not accessible.) |

| INITIAL | The initial state no partition has joined the cluster |

Partition status can be checked by executing gs_stat command to a master node. (The state is expressed as the value of /cluster/partitionStatus)

$ gs_stat -u admin/admin

{

:

:

"cluster": {

:

"nodeStatus": "ACTIVE",

"notificationMode": "MULTICAST",

"partitionStatus": "NORMAL",

:

[Notes]

- The value of /cluster/partitionStatus of the nodes other than a master node may not be correct. Be sure to check the value of a master node.

Consideration of cluster configuration

Configuring a cluster requires the following considerations:

- First, determine the configuration method between nodes that make up a cluster and between the cluster and the client. Choose one of the following three: the multicast method, the fixed list method, and the provider method.

- Then, determine whether multiple communication paths are needed in the communication between the cluster and client and set them up as needed.

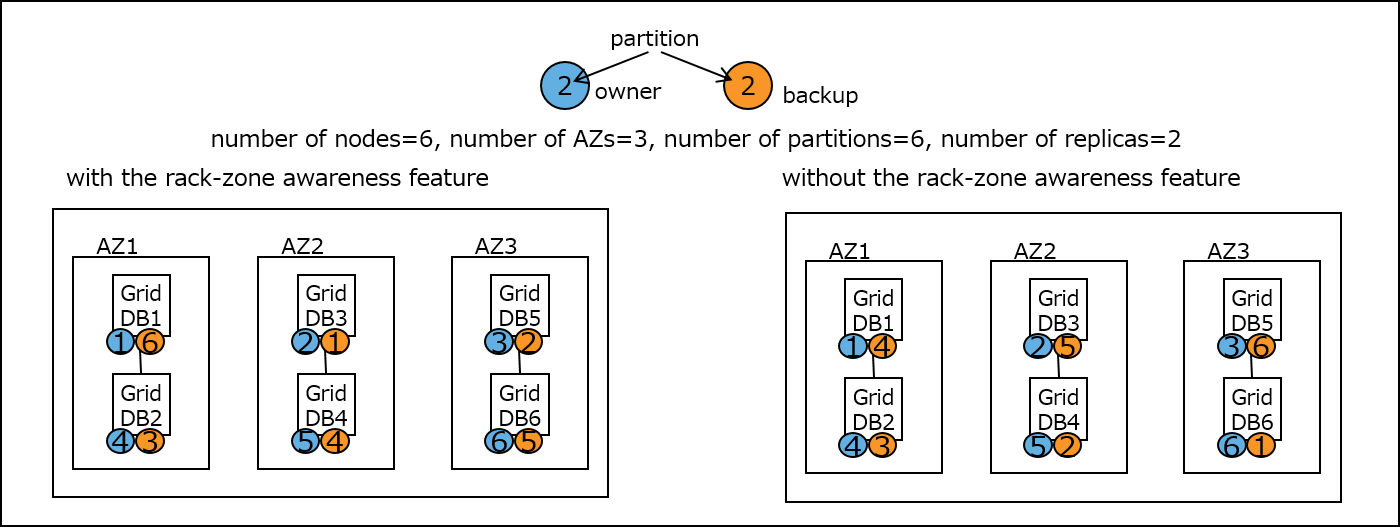

- Next, set the number of replicas depending on the levels of availability. If fault tolerance is required on a per-availability zone basis, enable the rack-zone awareness feature.

Cluster configuration methods

Cluster configuration methods refer to configuration methods for communication between nodes that make up a cluster and between the cluster and the client, while recognizing the address list for each communication, three of which are provided:

- Multicast method

- This is a method in which address lists are recognized through the discovery of nodes using multicast.

- Fixed list method

- This is a method in which address lists are recognized by launching a cluster, specifying the fixed address list that makes up the cluster in the cluster definition file.

- Provider method

- This is a method in which address lists are recognized according to the address lists provided by the address provider.

- The address provider can be configured as a Web service or as a static content.

The table below compares the three cluster configuration methods.

| Property | Multicast method | Fixed list method | Provider method | |

|---|---|---|---|---|

| Parameters | - Multicast address and port | - List of IP addresses and port numbers of all the nodes | - Provider URL | |

| Use case | - When multicast is available. | - When multicast is not available. - System size can be estimated accurately. |

- When multicast is not available. - System size cannot be estimated. |

|

| Cluster operation | - Perform automatic discovery of nodes at a specified time interval. | - Set a common address list for all nodes. - Read that list only once at node startup. |

- Obtain the address list at a specified time interval from the address provider. | |

| Benefits | - No need to restart the cluster to add a node. | - Free from errors because lists are checked for consistency. Easy to grasp the current nodes configuring the cluster. |

- No need to restart the cluster to add a node. | |

| Drawbacks | - Multicast is often unavailable in the cloud environment. Communication across segments is not possible. |

- A restart of the cluster is required to add a node | - An update of the connection setting on the application side is also required. | - There is a need to ensure the availability of the address provider. |

Multiple communication paths

In the GridDB cluster, it is possible to set multiple communication paths to the client. The default cluster-client communication path is the same as the communication path between cluster nodes. By setting multiple communication paths, however, it will be possible to establish a connection using a commutation path other than the default, called an external communication path. The client will be able to specify which communication path to use.

This type of network configuration that uses multiple communication paths is used in cases such as the following:

- To improve convenience by directly referencing or operating GridDB services that are provided on the cloud from the user’s on-premises environment outside the cloud, using an external communication path.

- In addition to using the services from the user’s on-premises environment outside the cloud, to enable fast application processing using the default communication path from the environment within the cloud.

Rack-zone awareness

GridDB provides the rack-zone awareness feature, which would improve availability in the event of a rack failure, an availability zone failure, and other per-physical configuration group failures. Given that the owner and backups of certain data are located within the same configuration group, if a failure occurs in this group, access to the data would be disabled. The rack-zone awareness feature solves this problem by making it possible to pre-define groups that a cluster node belongs to; GridDB then references this definition and controls the allocation of the owner and backups to the node to place the owner and backups in other groups. With this feature, even when a failure occurs in a certain group, access to data is still possible because data backups are placed in other groups. Another advantage of this feature is that in this case, the owner and backups are allocated in such a way that they are placed in each group and node as evenly as possible.

The rack-zone awareness feature effectively improves availability when such configuration groups as racks and availability zones are available. While it is generally required to set up a large number of replicas in order to improve availability, there is a trade-off between the number of replicas and the performance of transactions because increasing the number of replicas decreases the performance. The rack-zone awareness feature computes the placement of owner and backup data as a top priority to guard against the event of a failure that occurs on a per-group basis. For this reason, if configuration groups are available, this feature can improve availability while minimizing the number of replicas (or more specifically, maintaining transaction performance). To use this feature, you need to set rack-zone options in the settings file; no other special operations are necessary.

To illustrate the point, explanation is provided as to how data placement in a cluster consisting of six nodes, three groups, six partitions, and two replicas differs depending on whether the rack-zone awareness feature is used or not. (The figure below shows an example where a cloud availability zone (AZ) is used.) The figure on the left shows typical data placement with the rack-zone awareness feature, and the figure on the right shows that without this feature.

If without the rack-zone awareness feature as shown on the right, there is a possibility that the data owner and backups might be placed within the same AZ. For example, in GridDB2 within AZ1, the owner of cluster partition 4 data (blue circle) is placed. At the same time, data backups for cluster partition 4 (orange circle) are also placed in GridDB1 within AZ1. With this data placement, access to cluster partition 4 is no longer possible once a failure occurs in an AZ1. By contrast, the rack-zone awareness feature guarantees that such data placement is not made as shown on the left. For example, the owner of cluster partition 4 data (blue circle) is placed in AZ1. At the same time, data backups for cluster partition 4 (orange circle) are placed in AZ2, instead of AZ1. The same can be said of other cluster partition data.

In short, by placing the owner and backups for all cluster partition data in a separate AZ, this rack-zone awareness feature enables access to all those cluster partition data even in the event of a failure regardless of in which AZ a failure occurs. Note, however, that in order to constrain data placement in the same rack zone, the number of the owner and backups assigned to each node may vary, albeit slightly. It should also be noted that while the time between when a failure occurs and when the system reaches a cluster-stable state (that is, when data synchronization starts) tends to be slightly longer, GridDB optimizes the allocation of owner and backup data to the node, so that there would not be a great difference between the case with rack-zone awareness and the case without, in terms of imbalanced data as well as the amount of time required to reach the stable state.

[Note] The rack zone awareness feature is a feature that determines rules to assign cluster partitions. It cannot be used with other features that have similar functionalities, namely, the specifying of generation rules (described in 6.12.1) and of stabilization of a placement table (described in 6.12.2). Choose the one that suits your purpose.

Settings methods

Cluster configuration methods

Three cluster configuration methods, namely, the multicast method, the fixed list method, and the provider method, are available. These methods need to be set individually between nodes that make up a cluster and between the cluster and the client. The following describes only the settings between nodes. For the settings between the cluster and the client, see the API references for clients.

Multicast method

In this method, a cluster is configured using multicast by granting a multicast address to start a node. Multicast is defined for three services: cluster, transaction, and SQL services. If multicast is selected for cluster services, multicast should also be selected for the rest of the services, i.e., transaction and SQL services.

To configure a cluster using the multicast method, set the following parameters in the cluster definition file:

Cluster definition file

| Parameter | JSON data type | Description |

|---|---|---|

| /cluster/notificationAddress | string | Specify the multicast IP address needed to configure a cluster. |

| /cluster/notificationPort | int | Specify the multicast port number needed to configure a cluster. |

| /transaction/notificationAddress | string | Specify the multicast IP address needed to process transactions with the client. |

| /transaction/notificationPort | int | Specify the multicast port number needed to process transactions with the client. |

| /sql/notificationAddress | string | Specify the multicast IP address needed to process SQL together with the client. |

| /transaction/notificationPort | int | Specify the multicast port number needed to process SQL together with the client. |

If the multicast method is unavailable, use the fixed list method or the provider method.

{

:

:

"cluster":{

"clusterName":"yourClusterName",

"replicationNum":2,

"heartbeatInterval":"5s",

"loadbalanceCheckInterval":"180s",

"notificationAddress":"239.0.0.1",

"notificationPort":20000

},

"transaction":{

"notificationAddress":"239.0.0.1",

"notificationPort":31999

},

"sql":{

"notificationAddress":"239.0.0.1",

"notificationPort":41999

}

:

:

}

Fixed list method

When a fixed address list is given to start a node, the list is used to compose the cluster.

When composing a cluster using the fixed list method, configure the parameters in the cluster definition file.

cluster definition file

| Parameter | JSON data type | Description |

|---|---|---|

| /cluster/notificationMember | string | Specify the address list when using the fixed list method as the cluster configuration method. |

For the elements of /cluster/notificationMethods above, specify the following for each service: “service name”:{“address”}:”IP address”,”port”:port number

For the elements in /cluster/notificationMethods above, follow the rule below: To use multiple connection paths, add parameters for transactionPublic and sqlPublic and specify the IP addresses and port numbers for external connection for each of the two. These IP addresses must be the same as publicServiceAddress for transaction/sql specified in the node definition file during the node startup. Likewise, the port numbers must be the same as sevicePort for transaction/sql.

| Parameter | JSON data type | Description |

|---|---|---|

| /cluster/address | string | Specify the IP address for cluster service communication. |

| /cluster/port | int | Specify the port number for the IP address above. |

| /sync/address | string | Specify the IP address for sync service communication. |

| /cluster/port | int | Specify the port number for the IP address above. |

| /system/address | string | Specify the IP address for system service communication. |

| /system/port | int | Specify the port number for the IP address above. |

| /transaction/address | string | Specify the IP address for transaction service communication which will be the default communication path. |

| /transaction/port | int | Specify the port number for the IP address above. |

| /sql/address | string | Specify the IP address for SQL service communication which will be the default communication path. |

| /sql/port | int | Specify the port number for the IP address above. |

| /transactionPublic/address | string | Specify the IP address for transaction service communication which will be an external communication path. |

| /transactionPublic/port | int | Specify the port number for the IP address above. |

| /sqlPublic/address | string | Specify the IP address for SQL service communication which will be an external communication path. |

| /sqlPublic/port | int | Specify the port number for the IP address above. |

The following shows a configuration example of the cluster definition file for using the default communication path:

{

:

:

"cluster":{

"clusterName":"yourClusterName",

"replicationNum":2,

"heartbeatInterval":"5s",

"loadbalanceCheckInterval":"180s",

"notificationMember": [

{

"cluster": {"address":"172.17.0.44", "port":10010},

"sync": {"address":"172.17.0.44", "port":10020},

"system": {"address":"172.17.0.44", "port":10040},

"transaction": {"address":"172.17.0.44", "port":10001},

"sql": {"address":"172.17.0.44", "port":20001}

},

{

"cluster": {"address":"172.17.0.45", "port":10010},

"sync": {"address":"172.17.0.45", "port":10020},

"system": {"address":"172.17.0.45", "port":10040},

"transaction": {"address":"172.17.0.45", "port":10001},

"sql": {"address":"172.17.0.45", "port":20001}

},

{

"cluster": {"address":"172.17.0.46", "port":10010},

"sync": {"address":"172.17.0.46", "port":10020},

"system": {"address":"172.17.0.46", "port":10040},

"transaction": {"address":"172.17.0.46", "port":10001},

"sql": {"address":"172.17.0.46", "port":20001}

}

]

},

:

:

}

Provider method[Enterprise Edition]

Get the address list supplied by the address provider to perform cluster configuration.

When composing a cluster using the provider method, configure the parameters in the cluster definition file.

cluster definition file

| Parameter | JSON data type | Description |

|---|---|---|

| /cluster/notificationProvider/url | string | Specify the URL of the address provider when using the provider method as the cluster configuration method. |

| /cluster/notificationProvider/updateInterval | string | Specify the interval to get the list from the address provider. Specify the value more than 1 second and less than 231 seconds. |

The format of the node list returned by the provider follows the same rule as that for the fixed list.

A configuration example of a cluster definition file is shown below.

{

:

:

"cluster":{

"clusterName":"yourClusterName",

"replicationNum":2,

"heartbeatInterval":"5s",

"loadbalanceCheckInterval":"180s",

"notificationProvider":{

"url":"http://example.com/notification/provider",

"updateInterval":"30s"

}

},

:

:

}

The address provider can be configured as a Web service or as a static content. The address provider needs to provide the following specifications.

- Compatible with the GET method.

- When accessing the URL, the node address list of the cluster containing the cluster definition file in which the URL is written is returned as a response.

- Response body: Same JSON as the contents of the node list specified in the fixed list method

- Response header: Including Content-Type:application/json

Below is an example of a response sent from the address provider. Note that when multiple communication paths are specified, transactionPublic and sqlPublic should also be specified.

$ curl http://example.com/notification/provider

[

{

"cluster": {"address":"172.17.0.44", "port":10010},

"sync": {"address":"172.17.0.44", "port":10020},

"system": {"address":"172.17.0.44", "port":10040},

"transaction": {"address":"172.17.0.44", "port":10001},

"sql": {"address":"172.17.0.44", "port":20001}

},

{

"cluster": {"address":"172.17.0.45", "port":10010},

"sync": {"address":"172.17.0.45", "port":10020},

"system": {"address":"172.17.0.45", "port":10040},

"transaction": {"address":"172.17.0.45", "port":10001},

"sql": {"address":"172.17.0.45", "port":20001}

},

{

"cluster": {"address":"172.17.0.46", "port":10010},

"sync": {"address":"172.17.0.46", "port":10020},

"system": {"address":"172.17.0.46", "port":10040},

"transaction": {"address":"172.17.0.46", "port":10001},

"sql": {"address":"172.17.0.46", "port":20001}

}

]

[Note]

- Set one of the following in the cluster definition file to match the cluster configuration method used: /cluster/notificationAddress, /cluster/notificationMember, or /cluster/notificationProvider.

Multiple communication paths

To enable multiple communication paths in the GridDB cluster, specify the IP address for an external communication path in the node definition file in each of the nodes configuring a cluster and configure the cluster. There is no need to specify the port number again because the port number will be the same as the port number specified for servicePort.

Node definition file

| Parameter | JSON data type | Description |

|---|---|---|

| /transaction/publicServiceAddress | string | Specify the IP address corresponding to the external communication path for transaction services. |

| /sql/publicServiceAddress | string | Specify the IP address corresponding to the external communication path for SQL services. |

The following is an example for node definition file settings.

{

:

:

"transaction":{

"serviceAddress":"172.17.0.44",

"publicServiceAddress":"10.45.1.10",

"servicePort":10001

},

"sql":{

"serviceAddress":"172.17.0.44",

"publicServiceAddress":"10.45.1.10",

"servicePort":20001

},

:

:

Below is a sample node list for enabling multiple communication paths.

{

:

:

"cluster":{

"clusterName":"yourClusterName",

"replicationNum":2,

"heartbeatInterval":"5s",

"loadbalanceCheckInterval":"180s",

"notificationMember": [

{

"cluster": {"address":"172.17.0.44", "port":10010},

"sync": {"address":"172.17.0.44", "port":10020},

"system": {"address":"172.17.0.44", "port":10040},

"transaction": {"address":"172.17.0.44", "port":10001},

"sql": {"address":"172.17.0.44", "port":20001},

"transactionPublic": {"address":"10.45.1.10", "port":10001},

"sqlPublic": {"address":"10.45.1.10", "port":20001}

},

{

"cluster": {"address":"172.17.0.45", "port":10010},

"sync": {"address":"172.17.0.45", "port":10020},

"system": {"address":"172.17.0.45", "port":10040},

"transaction": {"address":"172.17.0.45", "port":10001},

"sql": {"address":"172.17.0.45", "port":20001},

"transactionPublic": {"address":"10.45.1.11", "port":10001},

"sqlPublic": {"address":"10.45.1.11", "port":20001}

},

{

"cluster": {"address":"172.17.0.46", "port":10010},

"sync": {"address":"172.17.0.46", "port":10020},

"system": {"address":"172.17.0.46", "port":10040},

"transaction": {"address":"172.17.0.46", "port":10001},

"sql": {"address":"172.17.0.46", "port":20001},

"transactionPublic": {"address":"10.45.1.12", "port":10001},

"sqlPublic": {"address":"10.45.1.12", "port":20001}

}

]

},

:

:

}

Rack-zone awareness

Cluster definition file

| Parameter | Data type | Definition |

|---|---|---|

| /cluster/rackZoneAwareness | bool | Specifies whether to adopt a data placement strategy using the rack-zone awareness feature. If so, make sure to select “true” and specify rackZoneId. |

| /cluster/rackZoneId | string | an identifier needed for the rack-awareness feature that is granted per group. It should be one to 64 alphanumeric characters. |

The following is an example for node definition file settings.

{

:

:

"cluster":{

"servicePort":10010

"rackZoneAwareness":true,

"rackZoneId":"zone-01",

},

:

:

}

Data model

GridDB is a unique Key-Container data model that resembles Key-Value. It has the following features.

- A concept resembling a RDB table that is a container for grouping Key-Value has been introduced.

- A schema to define the data type for the container can be set. An index can be set in a column.

- Transactions can be carried out on a row basis within the container. In addition, ACID is guaranteed on a container basis.

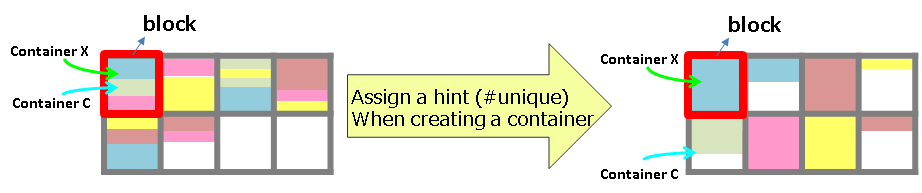

GridDB manages data in blocks, containers, tables, rows, and partitions.

-

Block

A block is a data unit for data persistence processing in a disk (hereinafter referred to a checkpoint) and is the smallest physical data management unit in GridDB. Multiple container data are arranged in a block. Block size is set up in a definition file (cluster definition file) before the initial startup of GridDB.

As a database file is created during initial startup of the system, the block size cannot be changed after initial startup of GridDB.

-

Container (Table)

A container is a data structure that serves as an interface with the user. A container consists of multiple blocks. Data structure serving as an I/F with the user. Container to manage a set of rows. It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F. 2 data types exist, collection (table) and timeseries container (timeseries table).

Before registering data in an application, make sure to create a container (table) beforehand.

-

Row

A row refers to a row of data to be registered in a container or table. Multiple rows can be registered in a container or table but this does not mean that data is arranged in the same block. Depending on the registration and update timing, data is arranged in suitable blocks within partitions.

A row includes columns of more than one data type.

-

Partition

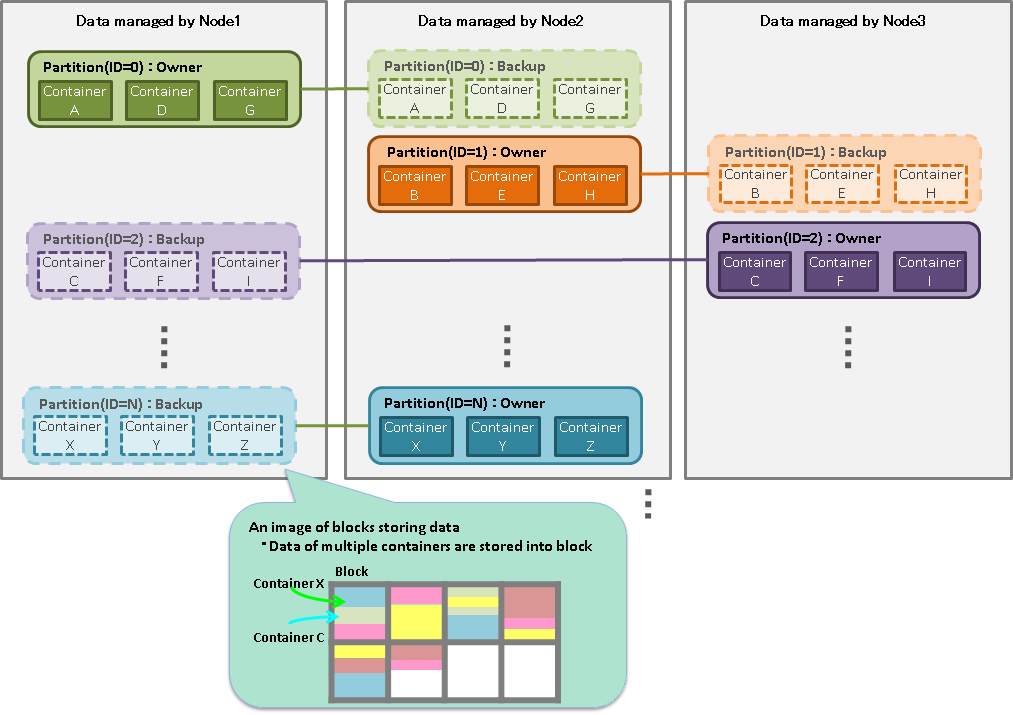

A partition is a data management unit that includes 1 or more containers or tables.

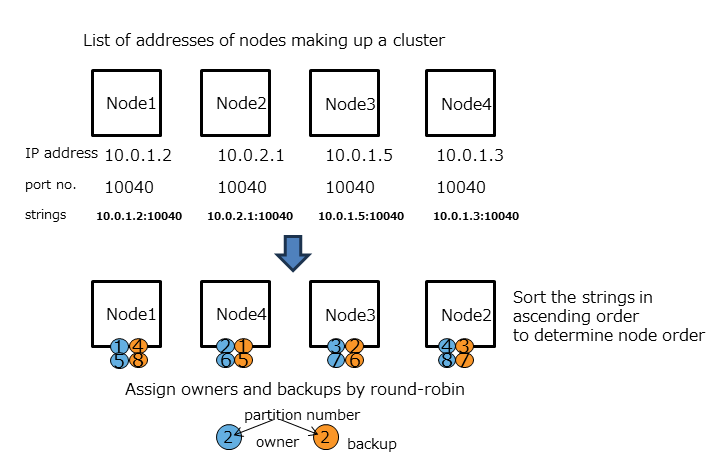

A partition is a data arrangement unit between clusters for managing the data movement to adjust the load balance between nodes and data multiplexing (replica) in case of a failure. Data replica is arranged in a node to compose a cluster on a partition basis.

A node that can update a container in a partition is called an owner node and one owner node is allocated to one partition. A node that maintains replicas other than the owner node is a backup node. Master data and multiple backup data exist in a partition, depending on the number of replicas set.

The relationship between a container and a partition is persistent and the partition which has a specific container is not changed. The relationship between a partition and a node is temporary and the autonomous data placement may cause partition migration to another node.

Data retained by a partition is saved in an OS disk as a physical database file.

Container

To register and search for data in GridDB, a container (table) needs to be created to store the data. Data structure serving as an I/F with the user. Container to manage a set of rows. It is called a container when operating with NoSQL I/F, and a table when operating with NewSQL I/F.

The naming rules for containers (tables) are the same as those for databases.

- A string consisting of alphanumeric characters, the underscore mark, the hyphen mark, the dot mark, the slash mark, and the equal mark can be specified. The container name should not start with a number.

- Although the name is case sensitive, a container (table) cannot be created if it has the same name as an existing container when they are case insensitive.

[Notes]

- Avoid the name already used for naming a view in the same database.

Type

There are 2 container (table) data types. A timeseries container (timeseries table) is a data type which is suitable for managing hourly data together with the occurrence time while a collection (table) is suitable for managing a variety of data.

Data type

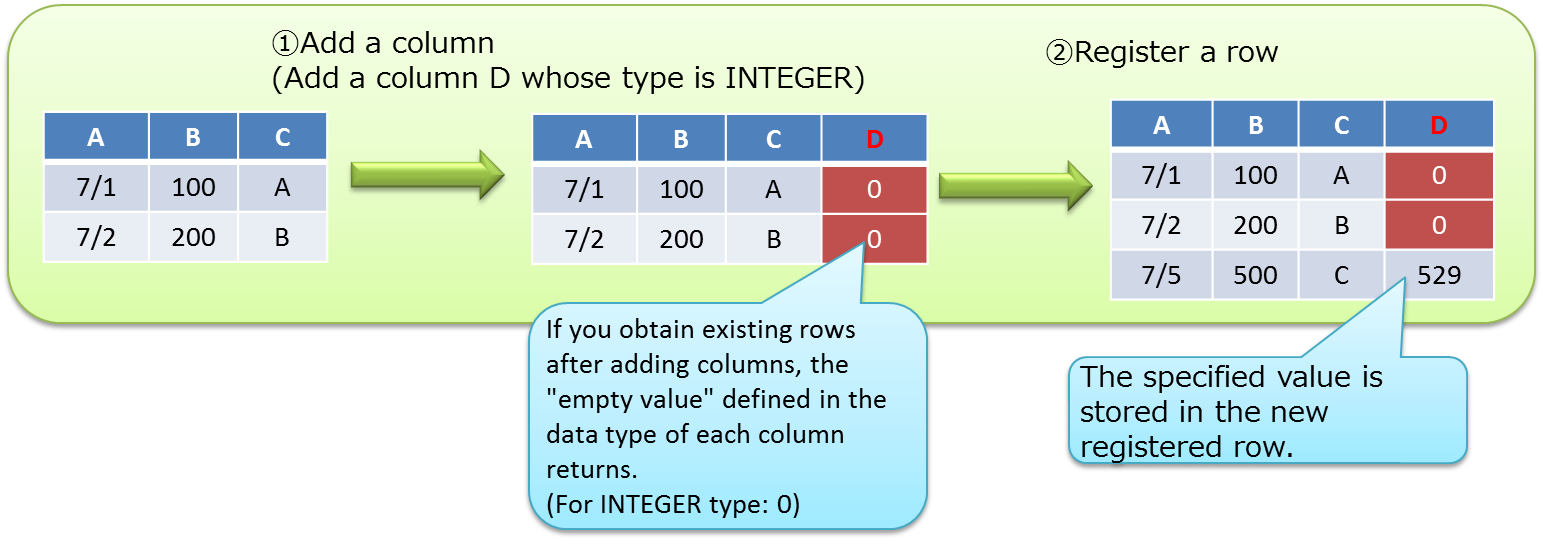

The schema can be set in a container (table). The basic data types that can be registered in a container (table) are the basic data type and array data type .

Basic data types

Describes the basic data types that can be registered in a container (table). A basic data type cannot be expressed by a combination of other data types.

| JSON data type | Description |

|---|---|

| BOOL | True or false |

| STRING | Composed of an arbitrary number of characters using the unicode code point |

| BYTE | Integer value from -27to 27-1 (8bits) |

| SHORT | Integer value from -215to 215-1 (16bits) |

| INTEGER | Integer value from -231to 231-1 (32bits) |

| LONG | Integer value from -263to 263-1 (64bits) |

| FLOAT | Single precision (32 bits) floating point number defined in IEEE754 |

| DOUBLE | Double precision (64 bits) floating point number defined in IEEE754 |

| TIMESTAMP | Data type expressing the date and time Data format maintained in the database is UTC, and accuracy is in milliseconds |

| GEOMETRY | Data type to represent a space structure |

| BLOB | Data type for binary data such as images, audio, etc. |

The following restrictions apply to the size of the data that can be managed for STRING, GEOMETRY and BLOB data. The restriction value varies according to the block size which is the input/output unit of the database in the GridDB definition file (gs_node.json).

| Data type | Block size (64KB) | Block size (from 1MB to 32MB) |

|---|---|---|

| STRING | Maximum 31KB (equivalent to UTF-8 encode) | Maximum 128KB (equivalent to UTF-8 encode) |

| GEOMETRY | Maximum 31KB (equivalent to the internal storage format) | Maximum 128KB (equivalent to the internal storage format) |

| BLOB | Maximum 1GB - 1Byte | Maximum 1GB - 1Byte |

GEOMETRY-type (Spatial-type)

GEOMETRY-type (Spatial-type) data is often used in map information system and available only for a NoSQL interface, not supported by a NewSQL interface.

GEOMETRY type data is described using WKT (Well-known text). WKT is formulated by the Open Geospatial Consortium (OGC), a nonprofit organization promoting standardization of information on geospatial information. In GridDB, the spatial information described by WKT can be stored in a column by setting the column of a container as a GEOMETRY type.

GEOMETRY type supports the following WKT forms.

- POINT

- Point represented by two or three-dimensional coordinate.

- Example: POINT(0 10 10)

- LINESTRING

- Set of straight lines in two or three-dimensional space represented by two or more points.

- Example: LINESTRING(0 10 10, 10 10 10, 10 10 0)

- POLYGON

- Closed area in two or three-dimensional space represented by a set of straight lines. Specify the corners of a POLYGON counterclockwise. When building an island in a POLYGON, specify internal points clockwise.

- Example: POLYGON((0 0,10 0,10 10,0 10,0 0)), POLYGON((35 10, 45 45, 15 40, 10 20, 35 10),(20 30, 35 35, 30 20, 20 30))

- POLYHEDRALSURFACE

- Area in the three-dimensional space represented by a set of the specified area.

- Example: POLYHEDRALSURFACE(((0 0 0, 0 1 0, 1 1 0, 1 0 0, 0 0 0)), ((0 0 0, 0 1 0, 0 1 1, 0 0 1, 0 0 0)), ((0 0 0, 1 0 0, 1 0 1, 0 0 1, 0 0 0)), ((1 1 1, 1 0 1, 0 0 1, 0 1 1, 1 1 1)), ((1 1 1, 1 0 1, 1 0 0, 1 1 0, 1 1 1)), ((1 1 1, 1 1 0, 0 1 0, 0 1 1, 1 1 1)))

- QUADRATICSURFACE

- Two-dimensional curved surface in a three-dimensional space represented by defining equation f(X) = <AX, X> + BX + c.

The space structure written by QUADRATICSURFACE cannot be stored in a container, only can be specified as a search condition.

Operations using GEOMETRY can be executed with API or TQL.

With TQL, management of two or three-dimensional spatial structure is possible. Generating and judgement function are also provided.

SELECT * WHERE ST_MBRIntersects(geom, ST_GeomFromText('POLYGON((0 0,10 0,10 10,0 10,0 0))'))

See GridDB TQL Reference for details of the functions of TQL.

HYBRID

A data type composed of a combination of basic data types that can be registered in a container. The only hybrid data type in the current version is an array.

-

Array

Expresses an array of values. Among the basic data types, only GEOMETRY and BLOB data cannot be maintained as an array. The restriction on the data volume that can be maintained in an array varies according to the block size of the database.

Data type Block size (64KB) Block size (from 1MB to 32MB) Number of arrays 4000 65000

[Note]

The following restrictions apply to TQL operations in an array column.

-

Although the i-th value in the array column can be compared, calculations (aggregation) cannot be performed on all the elements.

-

(Example) When column A was defined as an array

-

The elements in an array such as select * where ELEMENT (0, column A) > 0 can be specified and compared. However, a variable cannot be specified instead of “0” in the ELEMENT.

-

Aggregation such as select SUM (column A) cannot be carried out.

-

Primary key

A primary key can be set in a container (table), The uniqueness of a row with a set ROWKEY is guaranteed. NULL is not allowed in the column ROWKEY is set.

In NewSQL I/F, ROWKEY is called as PRIMARY KEY.

- For a timeseries container (timeseries table)

- A ROWKEY can be set in the first column of the row. (This is set in Column No. 0 since columns start from 0 in GridDB.)

- ROWKEY (PRIMARY KEY) is a TIMESTAMP

- Must be specified.

- For a collection (table)

- ROWKEY (PRIMARY KEY) can be set to multiple columns that are continuous from the first column. The ROWKEY set to multiple columns is called composite ROWKEY, which can be set up to 16 columns.

- Example: ROWKEY can be set to str1, str2, str3, which are consecutive from the first column.

CREATE TABLE sample_table1 (str1 string, str2 string, str3 string, str4 string, str5 string, int1 integer, PRIMARY KEY(str1, str2, str3)); - Example: ROWKEY cannot be set to str1, str3, str4, which are not consecutive columns. Executing the following SQL will cause an error.

CREATE TABLE sample_table2 (str1 string, str2 string, str3 string, str4 string, str5 string, int1 integer, PRIMARY KEY(str1, str3, str4));

- Example: ROWKEY can be set to str1, str2, str3, which are consecutive from the first column.

- A ROWKEY (PRIMARY KEY) is either a STRING, INTEGER, LONG or TIMESTAMP column.

- Need not be specified.

- ROWKEY (PRIMARY KEY) can be set to multiple columns that are continuous from the first column. The ROWKEY set to multiple columns is called composite ROWKEY, which can be set up to 16 columns.

A default index prescribed in advance according to the column data type can be set in a column set in ROWKEY (PRIMARY KEY).

In the current version GridDB, the default index of all STRING, INTEGER, LONG or TIMESTAMP data that can be specified in a ROWKEY (PRIMARY KEY) is the TREE index.

[Notes]

- Refer to “Handling composite row keys” in GridDB Programing Guide for an example of setting composite row keys with NoSQL interface.

View

View provides reference to data in a container.

Define a reference (SELECT statement) to a container when creating a view. A view is an object similar to a container, but it does not have real data. When executing a query containing a view, the SELECT statement, which was defined when the view was created, is evaluated, and a result is returned.

Views can only be referenced (SELECT), neither adding data (INSERT), updating (UPDATE), nor deletion data (DELETE) are not accepted.

[Notes]

- Avoid the name already used for naming a container in the same database.

- The naming rule of a view is the same as the naming rule of a container.

Database function

Resource management

Besides the database residing in the memory, other resources constituting a GridDB cluster are perpetuated to a disk. The perpetuated resources are listed below.

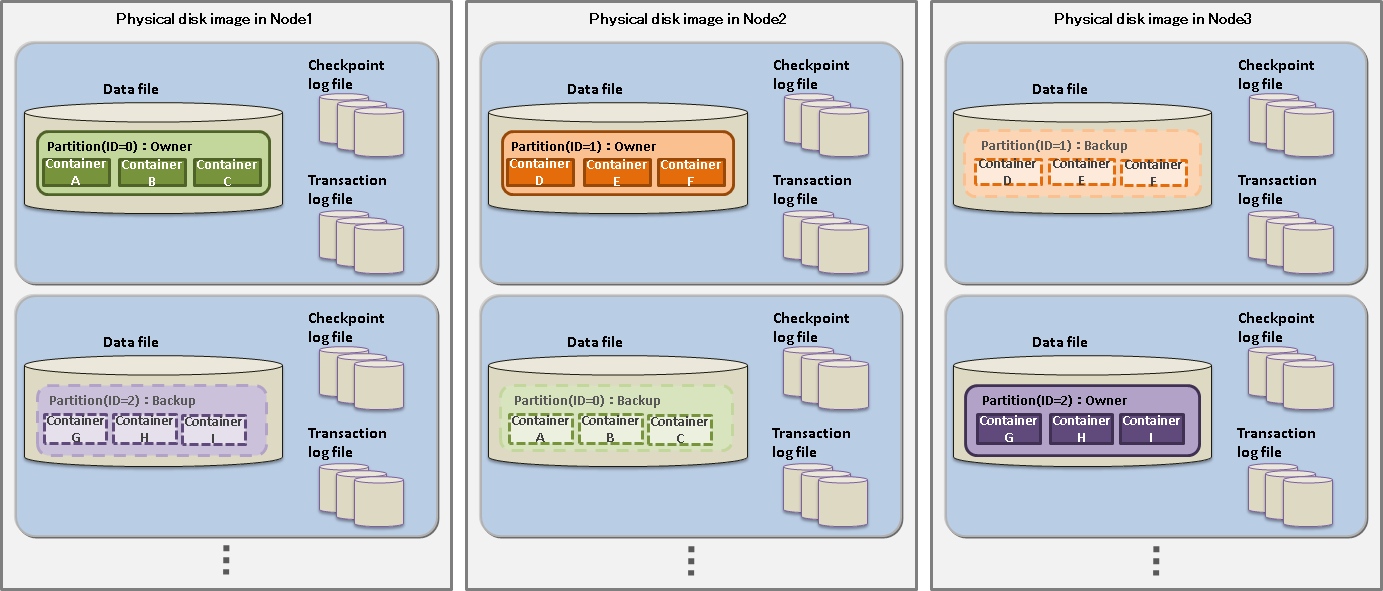

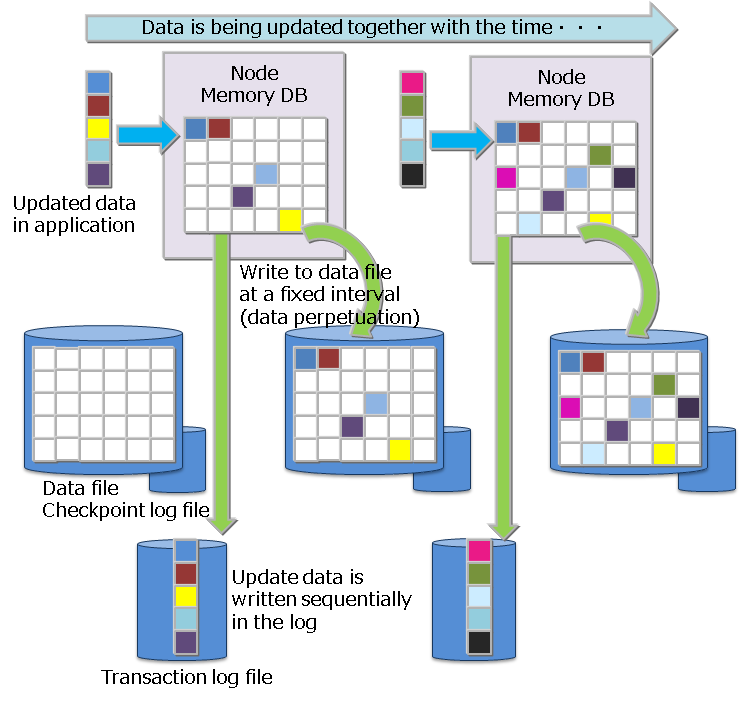

-

Database file

A database file is a collective term for data files and checkpoint log files, where databases on the memory are periodically written to, and transaction log files that are saved every time data is updated.

-

Data file

A checkpoint file is a file where partitions are persisted to a disk. Updated information is reflected in the memory by a cycle of the node definition file (/checkpoint/checkpointInterval). The file size expands relative to the data capacity. Once expanded, the size of a data file does not decrease even when data including container data and row data is deleted. In this case, GridDB reuses the free space instead. A data files can be split into smaller ones.

-

Checkpoint log file

A checkpoint log file is a file where block management information for partitions is persisted to a disk. Block management information is written in smaller batches at the interval (/checkpoint/checkpointInterval) specified in the node definition file. By default, a maximum of ten files are created for each partition. The number of split files can be adjusted by the number of batches (splits) /checkpoint/partialCheckpointInterval in the node definition file.

-

Transaction log file

Transaction data that are written to the database in memory is perpetuated to the transaction log file by writing the data sequentially in a log format. One file stores the logs of transactions executed from the start of the last checkpoint to the start of the next checkpoint. By default, a maximum of three files are created for each partition, consisting of the current log file and the previous two generations of log files.

-

Definition file

Definition file includes two types of parameter files: gs_cluster.json, hereinafter referred to as a cluster definition file, used when composing a cluster; gs_node.json, hereinafter referred to as a node definition file, used to set the operations and resources of the node in a cluster. It also includes a user definition file for GridDB administrator users.

-

Event log file

The event log of the GridDB server is saved in this file, including messages such as errors, warnings and so on.

-

Audit log file

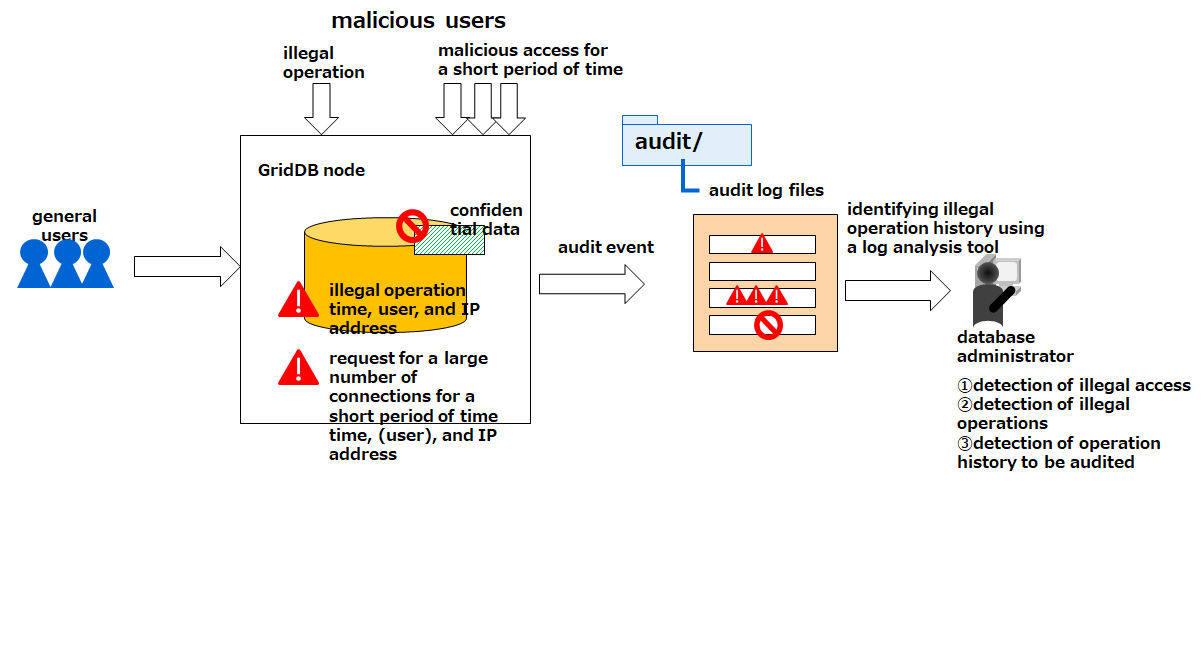

Audit logs of the GridDB server, which include access logs, operational logs, and messages that record error logs, are saved in this file.

-

Backup file

Backup data in the data file of GridDB is saved.

The placement of these resources is defined in GridDB home (path specified in environmental variable GS_HOME). In the initial installation state, the /var/lib/gridstore directory is GridDB home, and the initial data of each resource is placed under this directory.

The directories are placed initially as follows.

/var/lib/gridstore/ # GridDB home directory path

admin/ # gs_admin home directory

backup/ # Backup directory

conf/ # Definition files directory

gs_cluster.json # Cluster definition file

gs_node.json # Node definition file

password # User definition file

data/ # data files and checkpoint log directory

txnlog/ # Transaction log directory

expimp/ # Export/Import directory

log/ # Log directory

audit/ # Server audit log directory (only when audit logs are set)

The location of GridDB home can be changed by setting the .bash_profile file of the OS user gsadm. If you change the location, please also move resources in the above directory accordingly.

The .bash_profile file contains two environment variables, GS_HOME and GS_LOG.

vi .bash_profile

# GridStore specific environment variables

GS_LOG=/var/lib/gridstore/log

export GS_LOG

GS_HOME=/var/lib/gridstore // GridDB home directory path

export GS_HOME

The database directory, backup directory, server event log directory, and server audit log directory can be changed by changing the settings of the node definition file as well.

See Parameters for the contents that can be set in the cluster definition file and node definition file.

Data access function

To access GridDB data, there is a need to develop an application using NoSQL interface or NewSQL interface. Data can be accessed simply by connecting to the cluster database of GridDB without having to consider location information on where the container or table is located in the cluster database. The application system does not need to consider which node constituting the cluster the container is placed in.

In the GridDB API, when connecting to a cluster database initially, placement hint information of the container is retained (cached) on the client end together with the node information (partition).

Communication overheads are kept to a minimum as the node maintaining the container is connected and processed directly without having to access the cluster to search for nodes that have been placed every time the container used by the application is switched.

Although the container placement changes dynamically due to the rebalancing process in GridDB, the position of the container is transmitted as the client cache is updated regularly. For example, even when there is a node mishit during access from a client due to a failure or a discrepancy between the regular update timing and re-balancing timing, relocated information is automatically acquired to continue with the process.

TQL and SQL

TQL and SQL-92 compliant SQL are supported as database access languages.

-

What is TQL?

A simplified SQL prepared for GridDB. The support range is limited to functions such as search, aggregation, etc., using a container as a unit. TQL is employed by using the client API (Java, C language) of GridDB.

The TQL is adequate for the search in the case of a small container and a small number of hits. For that case, the response is faster than SQL. The number of hits can be suppressed by the LIMIT clause of TQL.

For the search of a large amount of data, SQL is recommended.

TQL is available for the containers and partitioned tables created by operations through the NewSQL interface. The followings are the limitations of TQL for the partitioned tables.

-

Filtering data by the WHERE clause is available. But aggregate functions, timeseries data selection or interpolation, min or max function and ORDER BY clause, etc. are not available.

-

It is not possible to apply the update lock.

See GridDB TQL Reference for details.

-

-

What is SQL?

Standardization of the language specifications is carried out in ISO to support the interface for defining and performing data operations in conformance with SQL-92 in GridDB. SQL can be used in NewSQL I/F.

SQL is also available for the containers created by operations through the NoSQL interface.

See GridDB SQL reference for details.

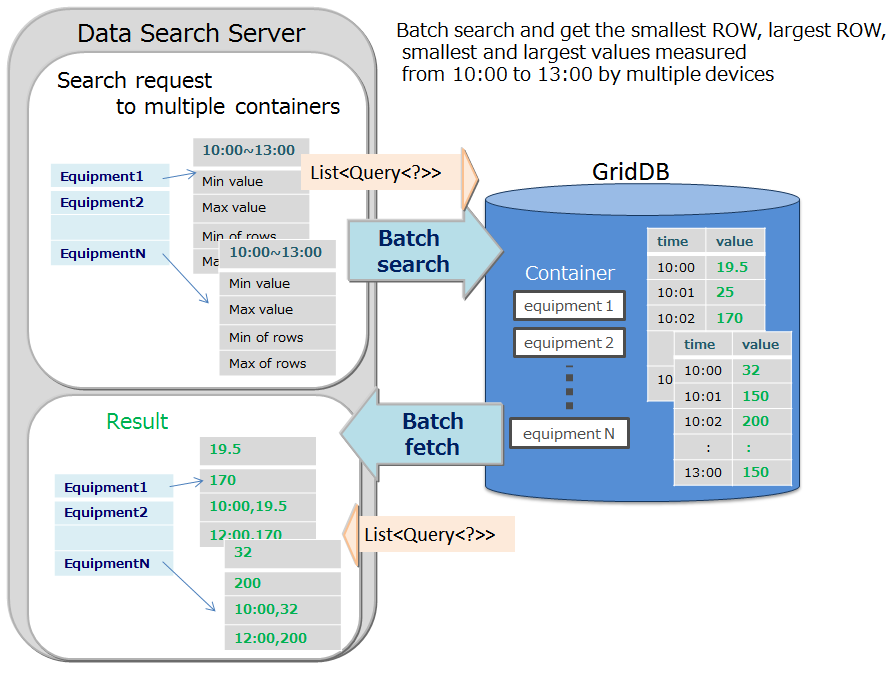

Batch-processing function to multiple containers

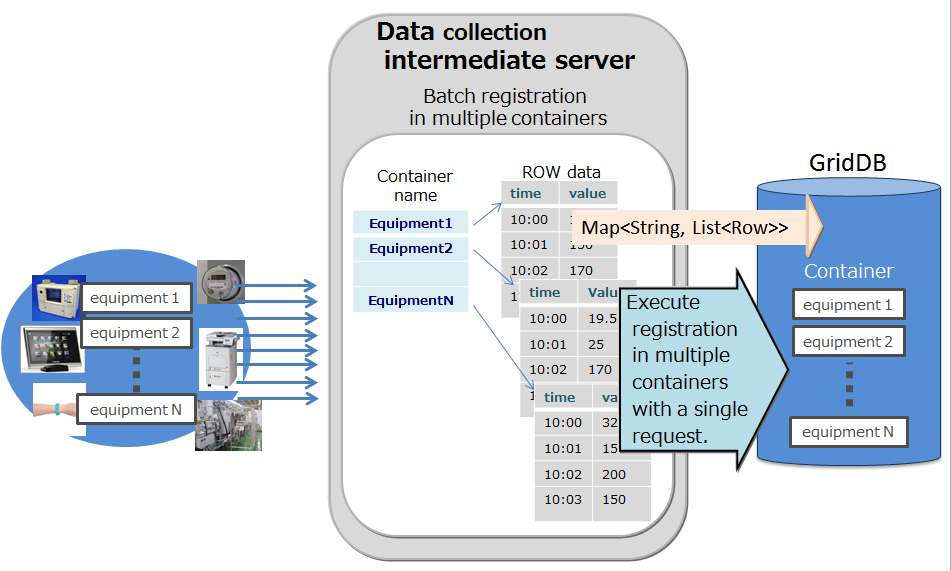

An interface to quickly process event information that occurs occasionally is available in NoSQL I/F.

When a large volume of events is sent to the database server every time an event occurs, the load on the network increases and system throughput does not increase. Significant impact will appear especially when the communication line bandwidth is narrow. Multi-processing is available in NoSQL I/F to process multiple row registrations for multiple containers and multiple inquiries (TQL) to multiple containers with a single request. The overall throughput of the system rises as the database server is not accessed frequently.

An example is given below.

-

Multi-put

-

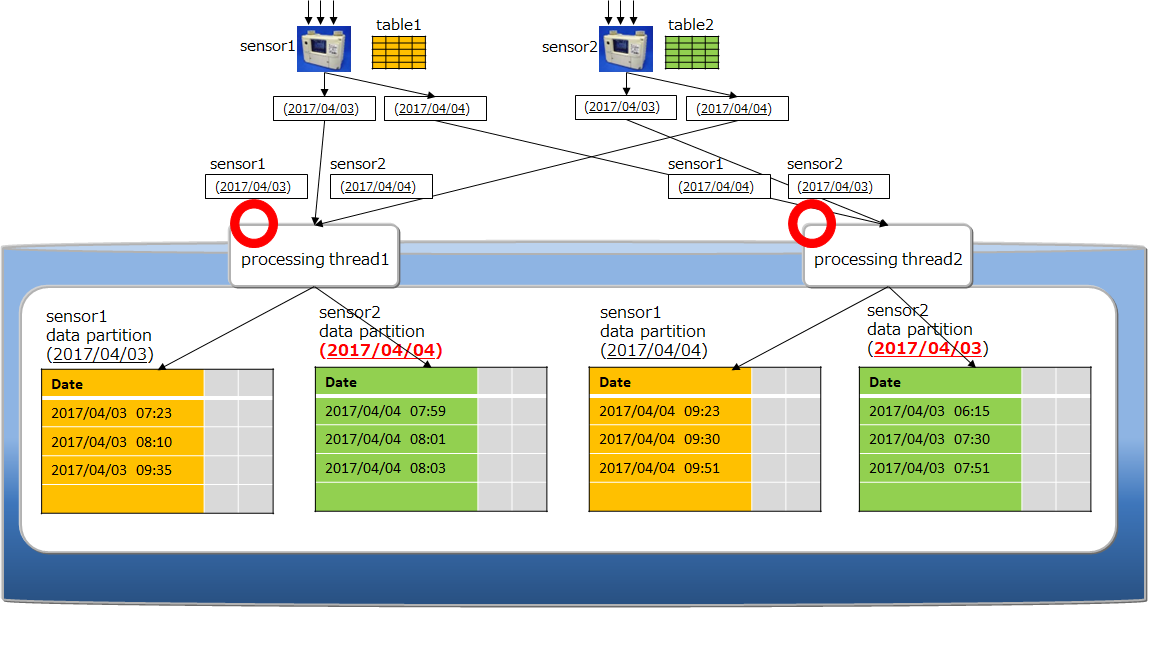

A container is prepared for each sensor name as a process to register event information from multiple sensors in the database. The sensor name and row array of the timeseries event of the sensor are created and a list (map) summarizing the data for multiple sensors is created. This list data is registered in the GridDB database each time the API is invoked.

-

Multi-put API optimizes the communication process by combining requests of data registration into multiple containers to a node in GridDB, which is formed by multiple clusters. In addition, multi-registrations are processed quickly without performing MVCC when executing a transaction.

-

In a multi-put processing, transactions are committed automatically. Data is confirmed on a single case basis.

-

-

Multi-query (fetchAll)

- Instead of executing multiple queries to a container, these can be executed in a single query by aggregating event information of the sensor. For example, this is most suitable for acquiring aggregate results such as the daily maximum, minimum and average values of data acquired from a sensor, or data of a row set having the maximum or minimum value, or data of a row set meeting the specified condition.

-

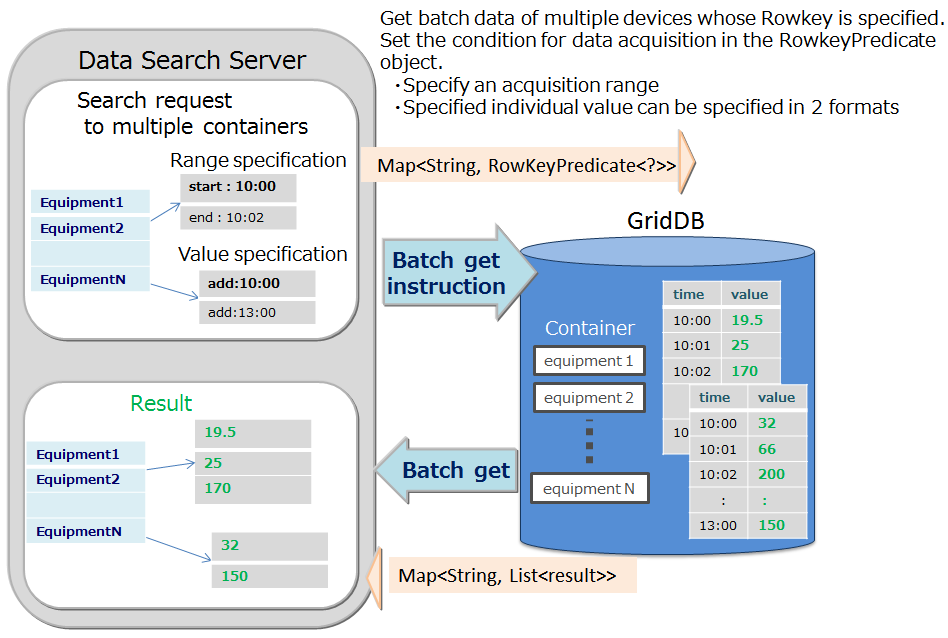

Multi-get

-

Instead of executing multiple queries to a sensor, these can be executed in a single query by consolidating event information of the sensor. For example, this is most suitable for acquiring aggregate results such as the daily maximum, minimum and average values of data acquired from a sensor, or data of a row set having the maximum or minimum value, or data of a row set meeting the specified condition.

-

In a RowKeyPredicate object, the acquisition condition is set in either one of the 2 formats below.

- Specify the acquisition range

- Specified individual value

-

Index function

A condition-based search can be processed quickly by creating an index for the columns of a container (table).

Two types of indexes are available: tree indexes (TREE) and spatial indexes (SPATIAL). The index that can be set differs depending on the container (table) type and column data type.

- TREE INDEX

- A tree index is used for an equality search and a range search (including greater than/equal to, and less than/equal to).

- This can be set for columns of the following data type in any type of container (table), except for columns corresponding to a rowkey in a timeseries container (timeseries table).

- STRING

- BOOL

- BYTE

- SHORT

- INTEGER

- LONG

- FLOAT

- DOUBLE

- TIMESTAMP

- Only a tree index allows an index with multiple columns, which is called a composite index. A composite index can be set up to 16 columns, where the same column cannot be specified more than once.

- SPATIAL INDEX

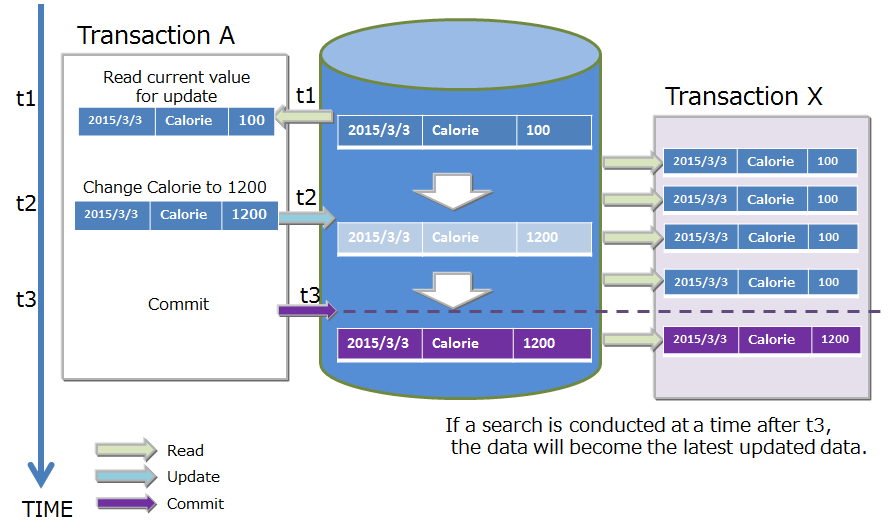

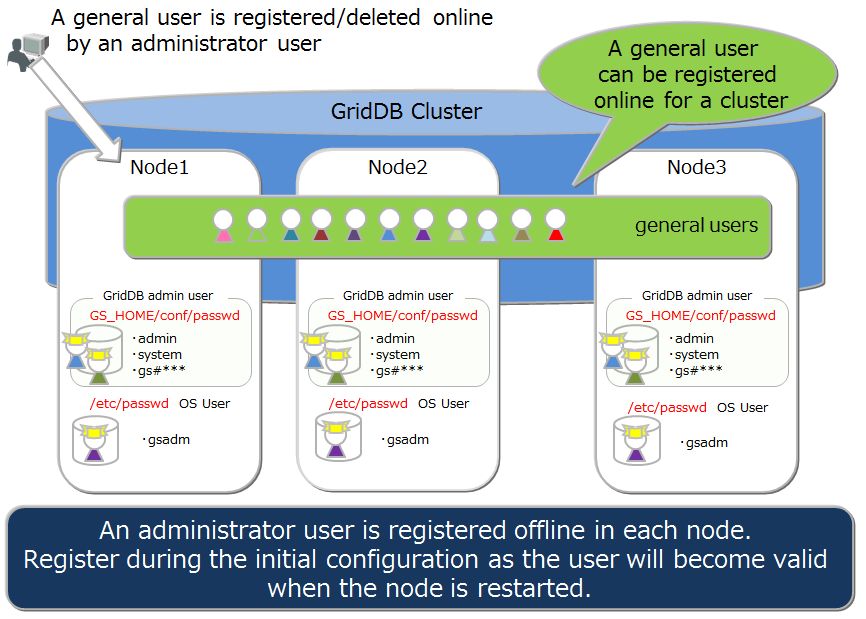

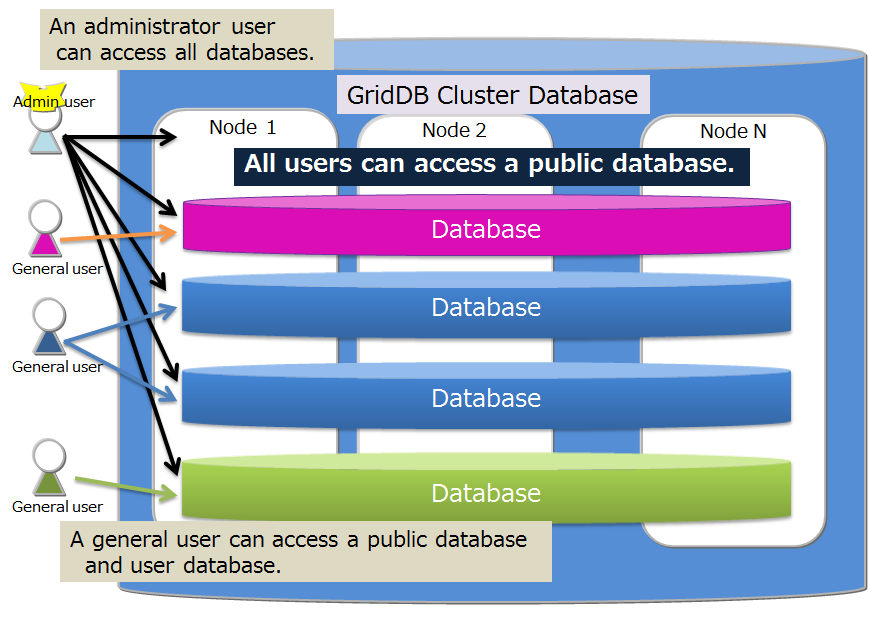

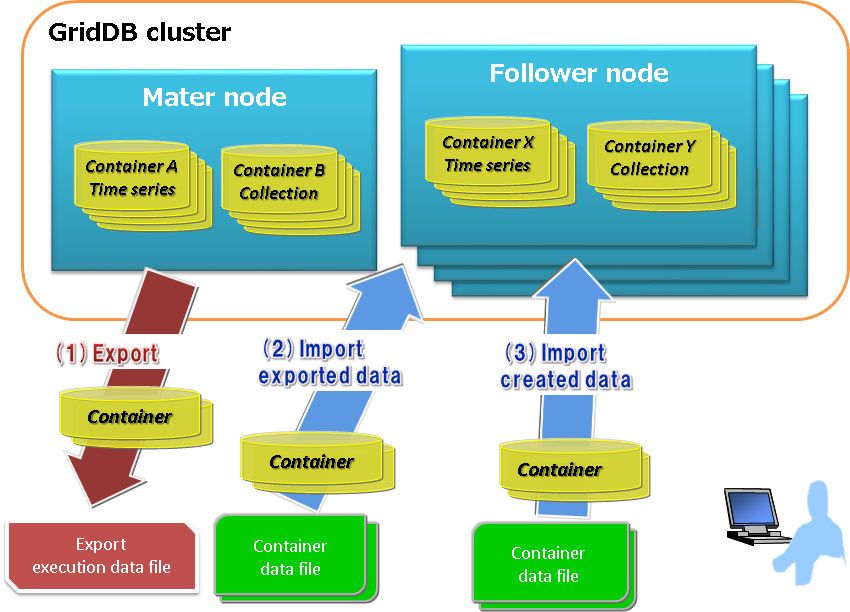

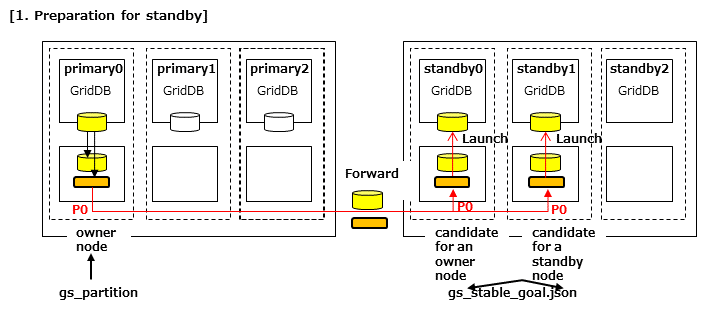

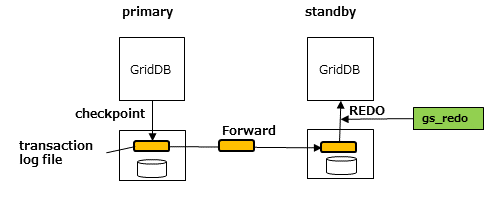

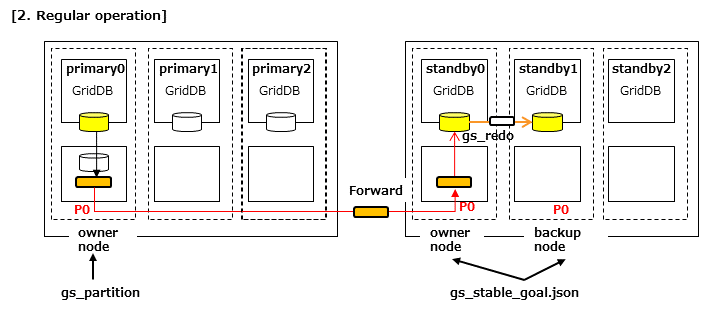

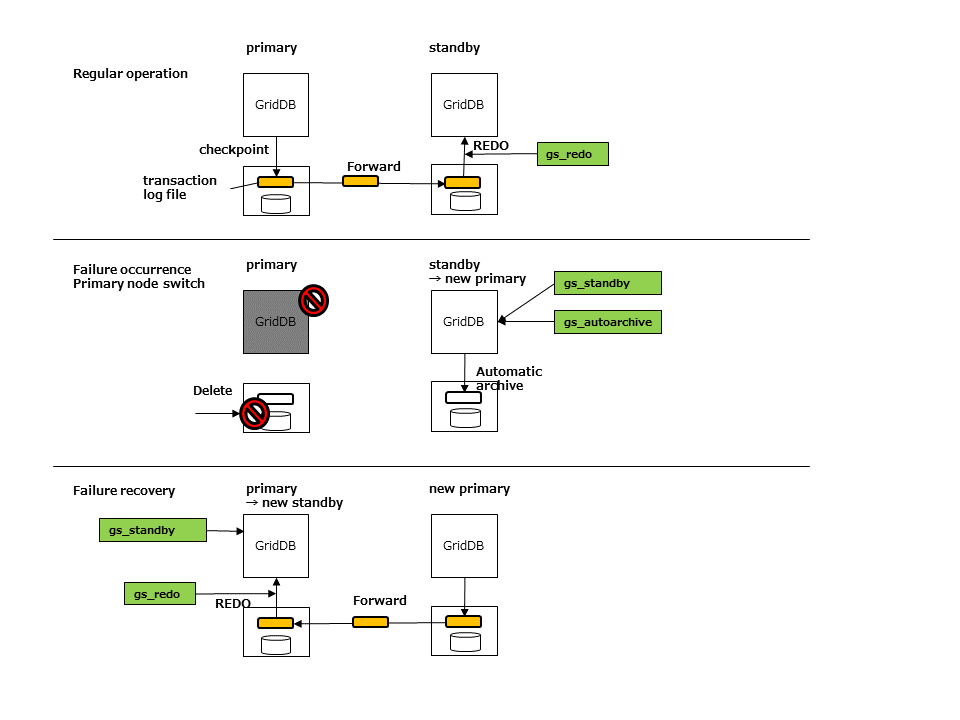

- Can be set for only GEOMETRY columns in a collection. This is specified when conducting a spatial search at a high speed.