griddb.github.io

— Introduction —

This document describes design, build, and operations provided by GridDB.

It is for designers involved in system development with GridDB and administrators involved in operations management of GridDB.

This document is organized as follows:

- Overview

- Describes an overview of the features and the architecture of GridDB required for its design, build, and operation.

- Physical design

- Describes the design items for GridDB needed to implement the non-functional requirements of the system

- Build

- Describes the installation and uninstallation of GridDB.

- Operation

- Describes operations management, including monitoring and responses returned when a failure occurs

— Overview —

Features of GridDB

GridDB is a high performance distributional NoSQL-type database that delivers expandability and availability, featuring:

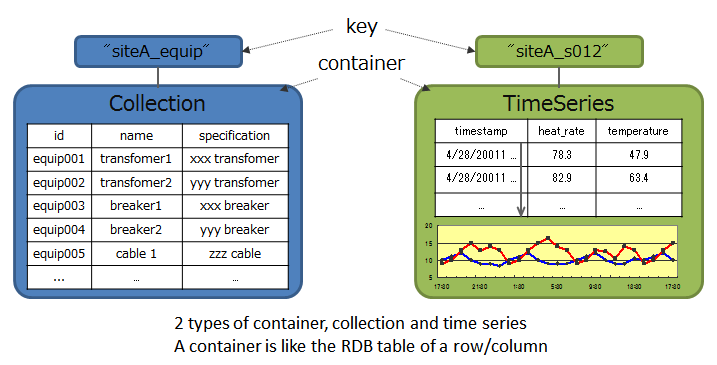

Data models and supporting interfaces

GridDB has a data model that evolved out of a key-value database. It stores data in a container corresponding to RDB tables.

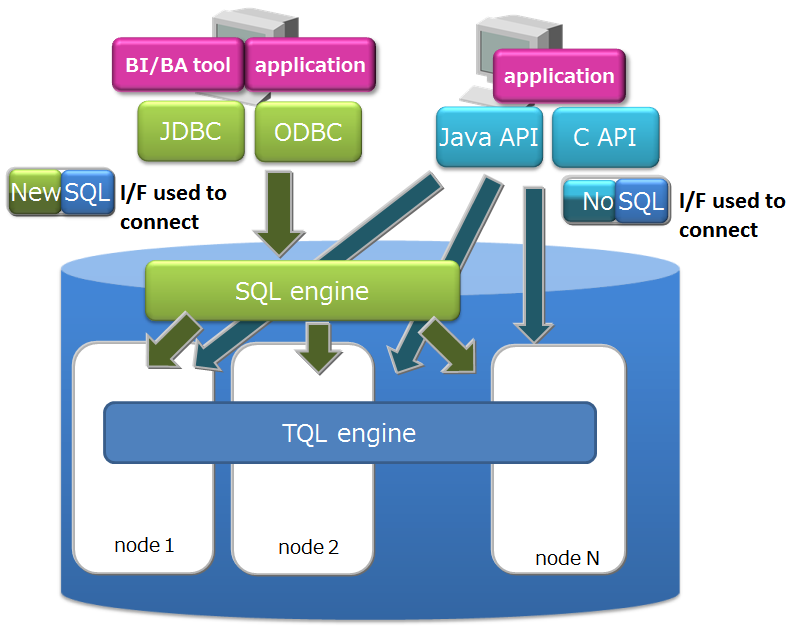

It supports client APIs and an SQL-like query language called TQL as the NoSQL interface, and JDBC/ODBC and SQL as the NewSQL interface.

Availability

GridDB works with the clusters consisting of multiple nodes (server process).

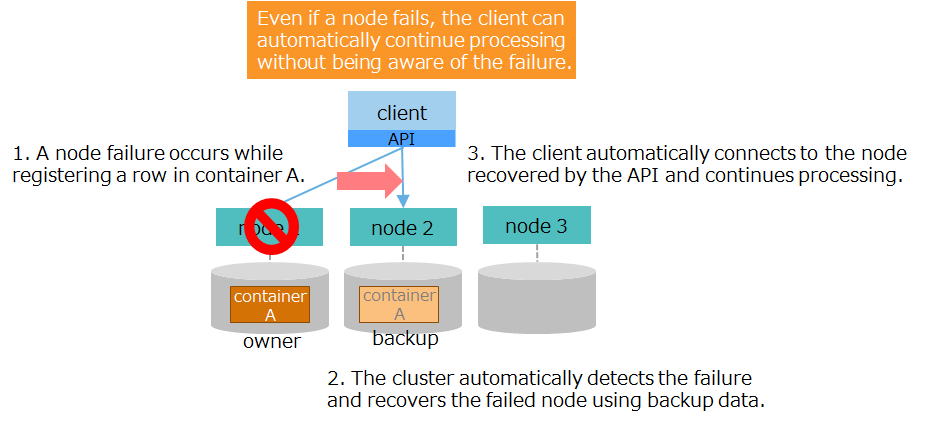

When a node fails, a cluster automatically detects a failure and moves the role of the failed node to another node to allow the cluster to continue running. Further, within clusters, GridDB duplicates and multi-configures (replicate) data on multiple nodes. As such, even after a failure has occurred, clusters automatically relocate replicas (autonomous data placement), which enables continuous data access.

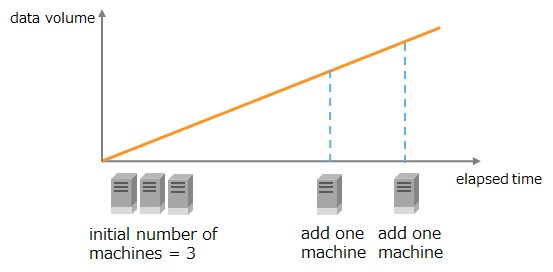

Scalability

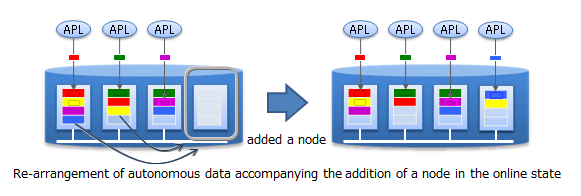

One way to improve the performance of a cluster in GridDB is to scale up the server where the nodes are running; another is to perform a scale-out operation by adding nodes to a cluster.

In a scale-out operation, the system can be expanded online. Those nodes added to a system during a scale-out operation are easy to operate; data in the nodes will be properly placed depending on the system load.

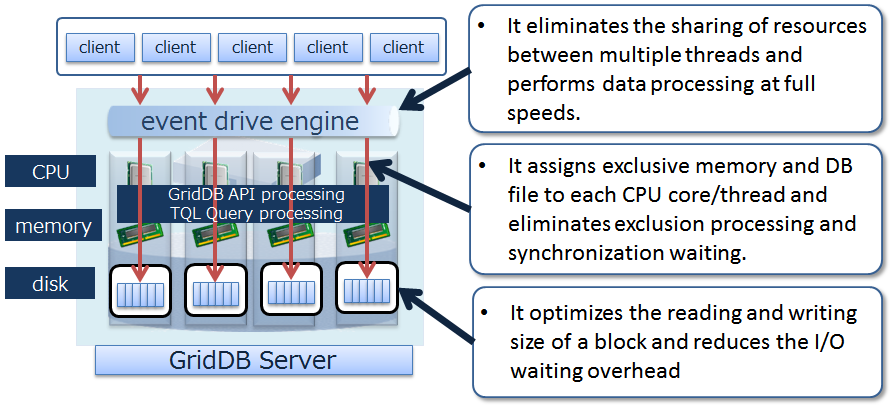

High speed

To minimize latency for executing database, GridDB allocates memory and database files that threads occupy for each CPU core thread, which eliminates latency for assignment, exclusion, and synchronization.

To achieve high speed, GridDB additionally has the following features:

- index feature

- table partitioning

- data compression

- expiration

- affinity

For details, see the GridDB Features Reference.

GridDB: Architectural overview

This section gives an overview of the architecture of GridDB required for its design, build, and operation.

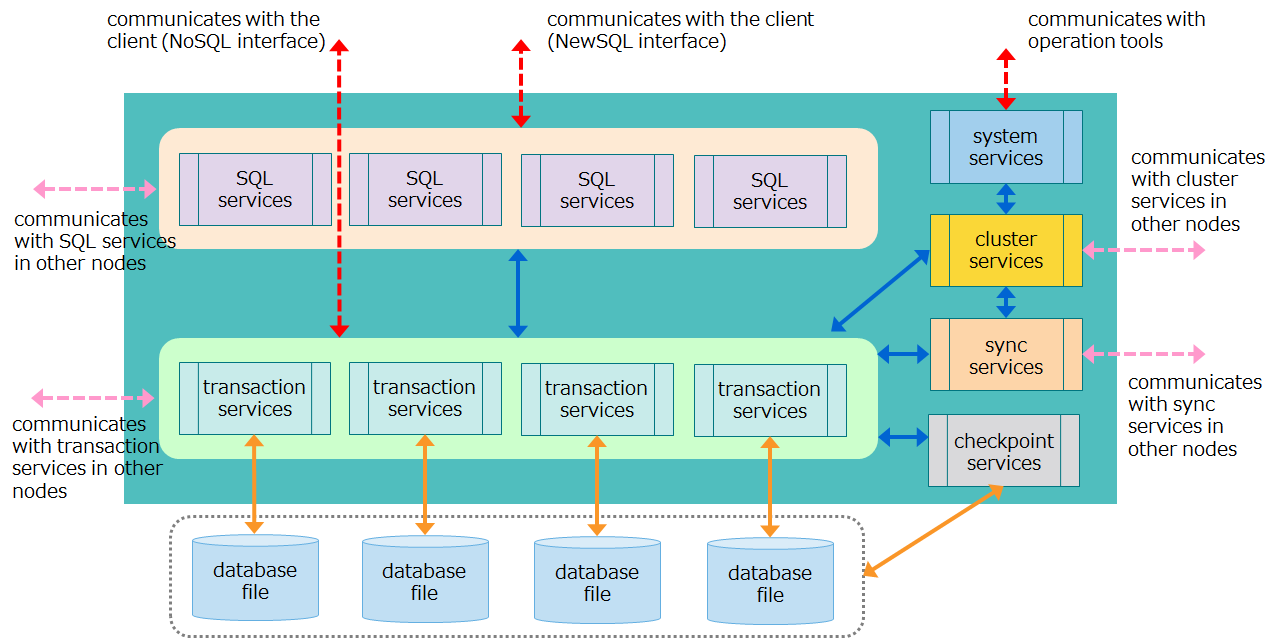

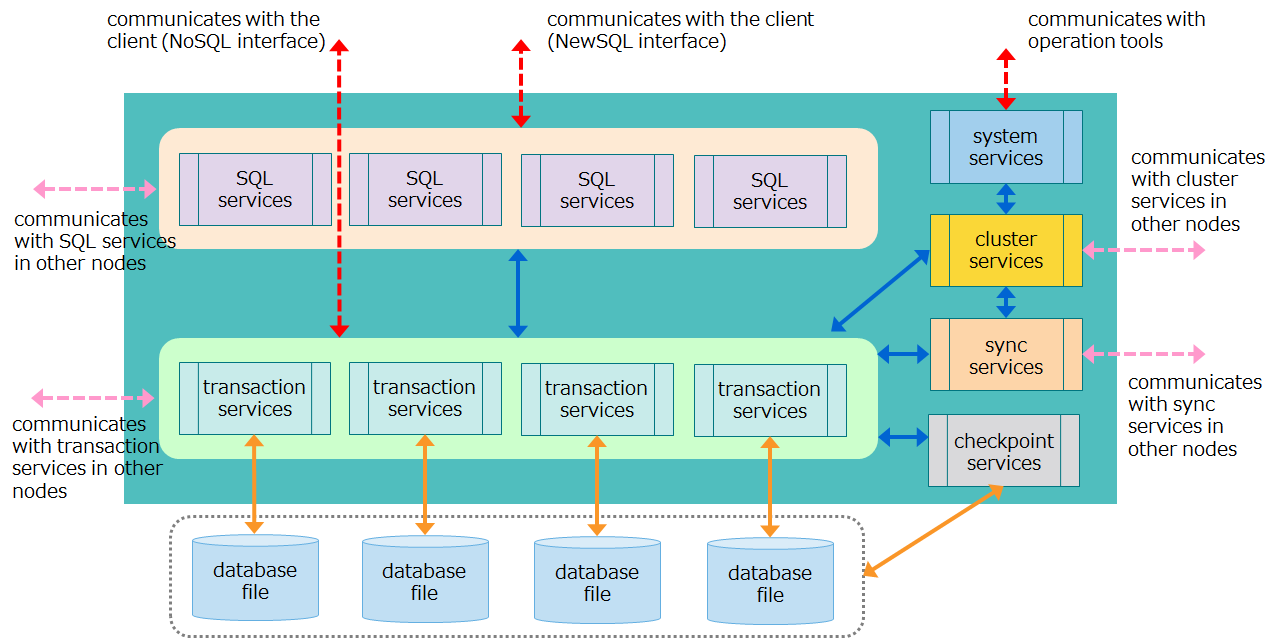

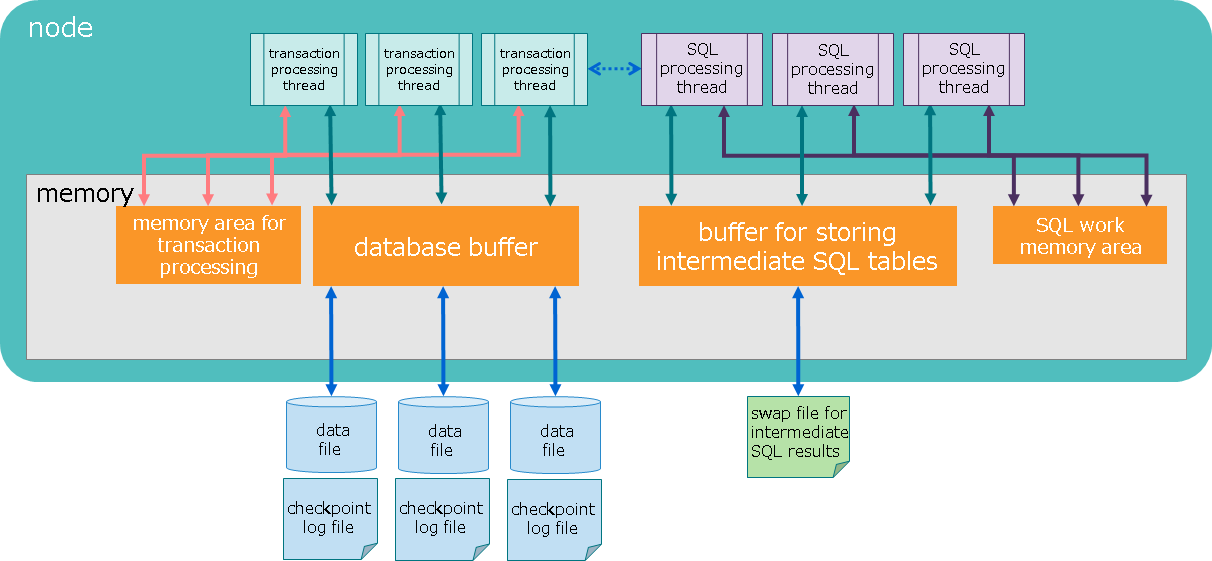

Thread configuration

Nodes contain multiple threads (called services) which execute various types of processing. This section describes thread configuration and what each thread does.

| Thread name | What thread do |

|---|---|

| Transaction services | Executes transaction processing, including row registration and search operations, and database file write operations, among others. |

| Checkpoint services | Executes checkpoint processing. |

| Cluster services | Executes cluster configuration processing, including heartbeat and node participation and departure. |

| Sync services | During partition relocation, executes long-term and short-term synchronization operations for data synchronization. |

| System services | Accepts requests for production and management operations. |

| SQL services | Executes SQL processing |

-

Flow of processing from a client

- Requested processing from a NoSQL client is assigned to transaction services where row registration, search processing, and other types of processing are executed.

- Requested processing from a NewSQL client is first handled by SQL services to perform SQL processing, and then is assigned to transaction services.

Data management

Database files and transaction services both of which manage data have a one-to-one relationship.

These database files have an internal structure called partition which manages multiple containers. Each transaction service operates on only one partition; it can be executed without the need for exclusive processing from other transaction services.

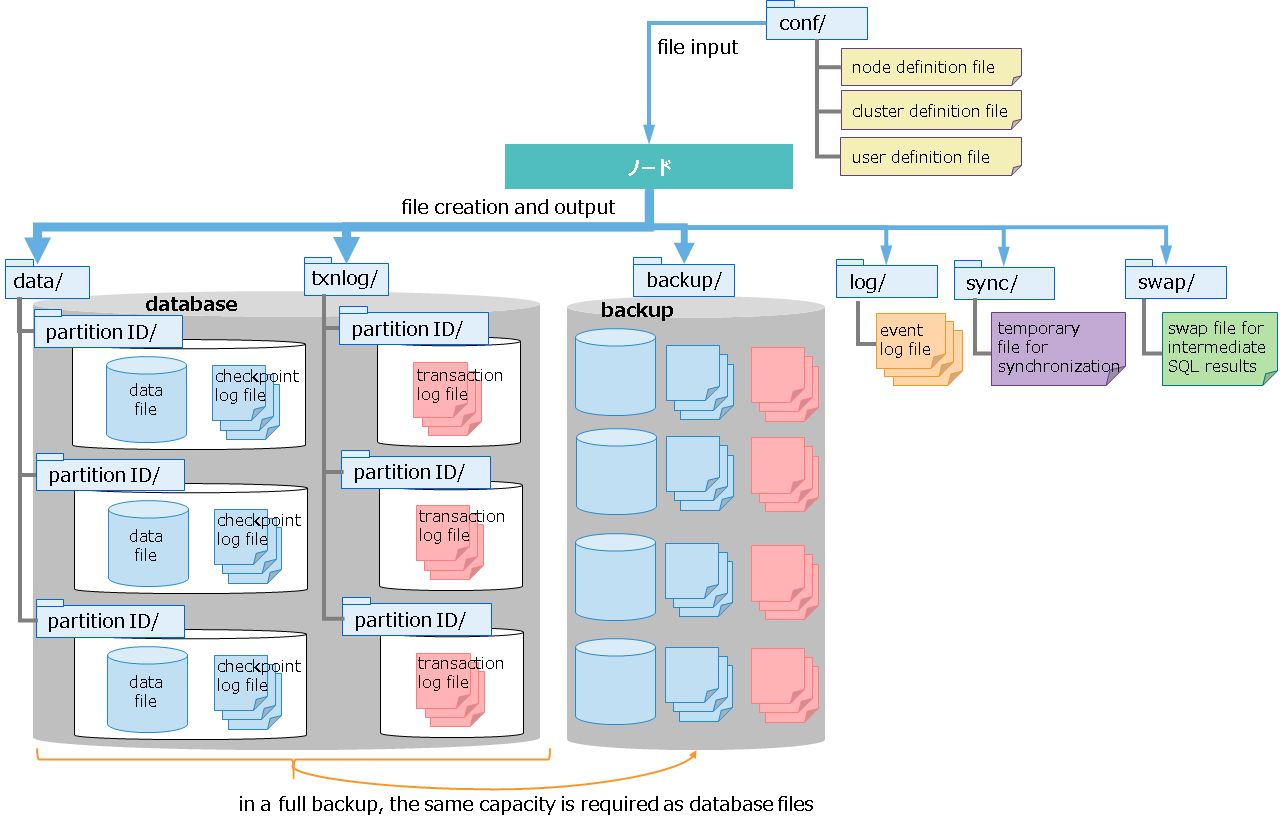

File configuration

Files that nodes manage include definition files and database files. This section describes file configuration and content.

-

definition files

name description node definition file

(gs_node.json)definition file which sets node resources and behavior. cluster definition file

(gs_cluster.json)definition file which sets cluster resources and behavior for cluster configuration. user defined file

(password)file for registering administrative users. -

database files

name description data file This is a file for storing data.

A data file can be placed after splitting it into multiple files.

number of files

- One data file is created for each partition.

-To place a data file after splitting it, as many files as the number of splits are created for each partition.checkpoint log file This is a file for storing block management information for a database.

Block management information is written in smaller batches each time checkpoint is performed.

By default, a maximum of ten files are created for each partition. The number of files can be adjusted in /checkpoint/partialCheckpointInterval in the node definition file.transaction log file This is a file for storing transaction logs.

Each file stores logs for those transactions executed between the start of a previo checkpoint and the start of the next checkpoint.

By default, a maximum of three files (current log file and previous two generations of log files) are created per partition. -

other files

name description backup files files created when an online backup is started. Backed-up database files and files for backup information are collectively called backup files. event log files files for storing logs for node behavior, to which error and warning messages are issued. temporary file for synchronization file for temporarily storing synchronous data during synchronization through partition relocation. swap file for intermediate SQL results file for temporarily storing intermediate SQL results. Files are deleted in the event of node outage.

As mentioned above, each partition has its own database files consisting of one data file, checkpoint log files, and transaction log files.

In transaction log processing, data is sequentially written onto transaction log files to sync update, which provides transaction assurance. In checkpoint processing, blocks of updated data on memory is regularly saved in data files and checkpoint log files, which provides data persistence.

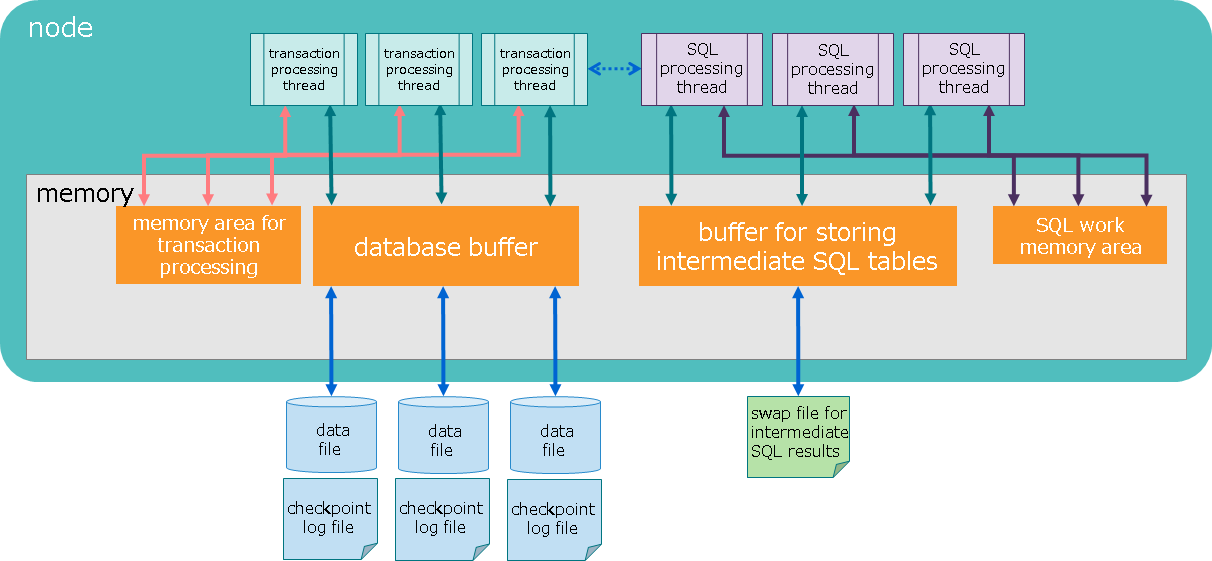

Memory structure

GridDB is provided with various memory areas to reduce disk I/O and make processing efficient.

| name | description |

|---|---|

| database buffer | I/O buffer for caching retrieved data. It caches data image (block) retrieved from data files in memory. A larger database buffer size means blocks are more easily cached in memory, which improves search and registration performance. Each node has one database buffer area; as such, multiple transaction processing threads share in its use. |

| memory area for transaction processing | area used by transaction processing threads which perform row registration and search processing. |

| buffer for storing intermediate SQL results | area for storing intermediate results while SQL processing is taking place. If the size of the intermediate results exceeds the buffer memory size limit, those results are temporarily exported in swap files for intermediate SQL results. |

| SQL work memory area | area for temporarily storing intermediate results in SQL processing, including join and aggregation operations. |

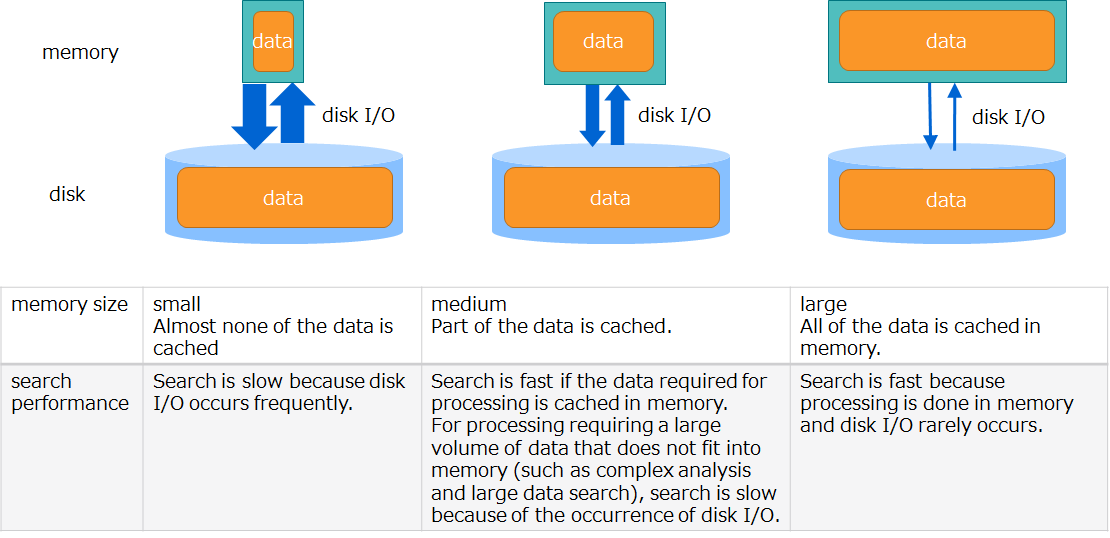

GridDB is a hybrid-type database which uses both memory and disks. It accelerates access using memory as data cache and stores data in disks to manage large volumes of data. A larger memory size means larger volumes of data are cached in memory, which reduces disk I/O and improves performance.

Cluster management

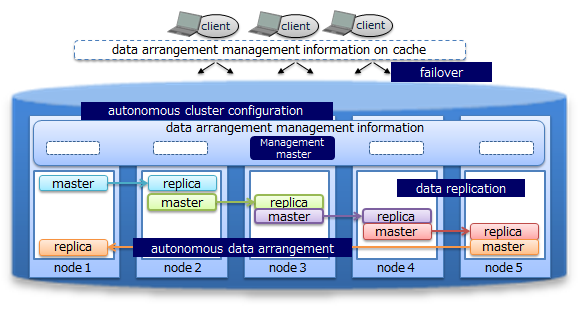

GridDB configures a cluster with multiple nodes

Nodes have two roles: “master” and “follower.” A master manages the entire cluster. All non-master nodes act as “followers.”

When a cluster starts, one of the nodes composing a cluster will always be a “master.” Followers then perform cluster processing including synchronous processing according to the instructions from the master. A master node is automatically selected by GridDB. If a master node fails, a new master will be automatically selected from a set of follower nodes to keep the cluster running.

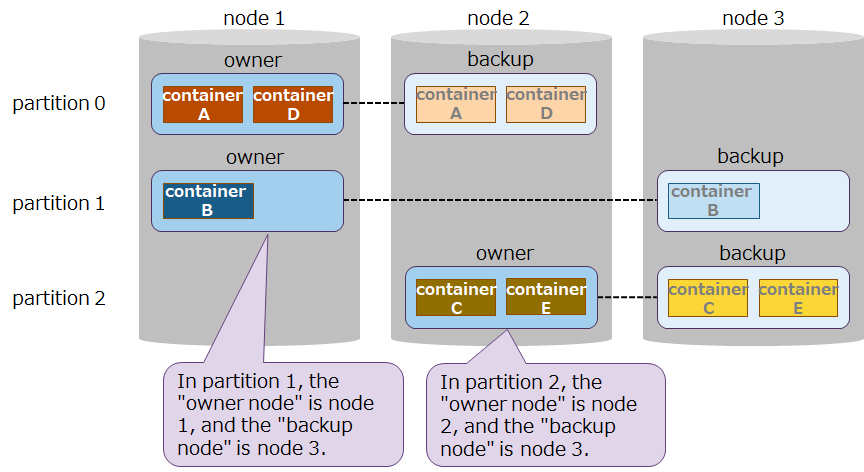

Further, within a cluster, GridDB duplicates and multi-configures data (replicas) on multiple nodes. Among replicas, master data is referred to as an owner, whereas duplicated data is referred to as backups. Regardless of which node composing a cluster fails, GridDB will always continue processing through the use of replicas. Moreover, the system automatically relocates data after a node failure (autonomous data placement) without a need for special production operations. Data placed in the failed nodes is restored from replicas and then relocated to reach the automatically set number of replicas.

Replicas are created per partition. If nodes are either added to a cluster or deleted from a cluster, partitions will automatically be reassigned and relocated to distribute replicas to each node.

— Physical design —

Describes the design items for GridDB needed to implement the non-functional requirements of the system

Points to consider when designing

As non-functional requirements an established system should meet, IPA (Information-Technology Promotion Agency, Japan) has formulated the “Non-Functional Requirements Grades,” part of which are cited below:

“(source: Non-Functional Requirements Grades Usage Guide [Description Manual], p. 7) (c) 2010 IPA

| Non-functional requirements: Major category | Example requirements | Example implementation methods |

|---|---|---|

| Availability | - Operation schedule (operating hours, outage plans, etc.) - Operation objectives for failure occurrences, disasters, etc. |

- Equipment redundancy, backup center establishment, etc. -Restoration/recovery methods, and restoration/recovery structure establishment |

| Performance and scalability | - Business volume, and estimates on future growth - Attributes of business which will be systematized (peak times, normal operation, degraded operation, etc.) |

- Sizing based on performance objectives - Capacity planning (future-oriented equipment and network sizing and allocation) |

| Operability and maintainability | - System operation levels required for system operation - Response levels in the event that a problem occurs |

- Establishment of monitoring methods and backup methods - Distribution of roles, organizational structure, training, and preparation of manuals in anticipation of problems that may arise |

| Migratability | - System migration period and schemes - Types of assets to be migrated, and volume of migration |

- Migration schedule establishment, migration tool development - Migration organization establishment, migration rehearsal |

| Security | Usage restrictions - Unauthorized access prevention |

- Access restrictions, data confidentiality - Fraud tracking, monitoring, detection - Information security training for operators, etc. |

To satisfy these non-functional requirements, GridDB needs the following design items:

- Data space design

- Memory space design

- Container design

- Cluster configuration design

- Network design

- Security design

- Monitoring design

- Backup design

Specific design varies considerably depending on the level and content of system requirements; this chapter focuses on points of design that should be considered to leverage “availability” and “performance and scalability” in the table above characterizing GridDB.

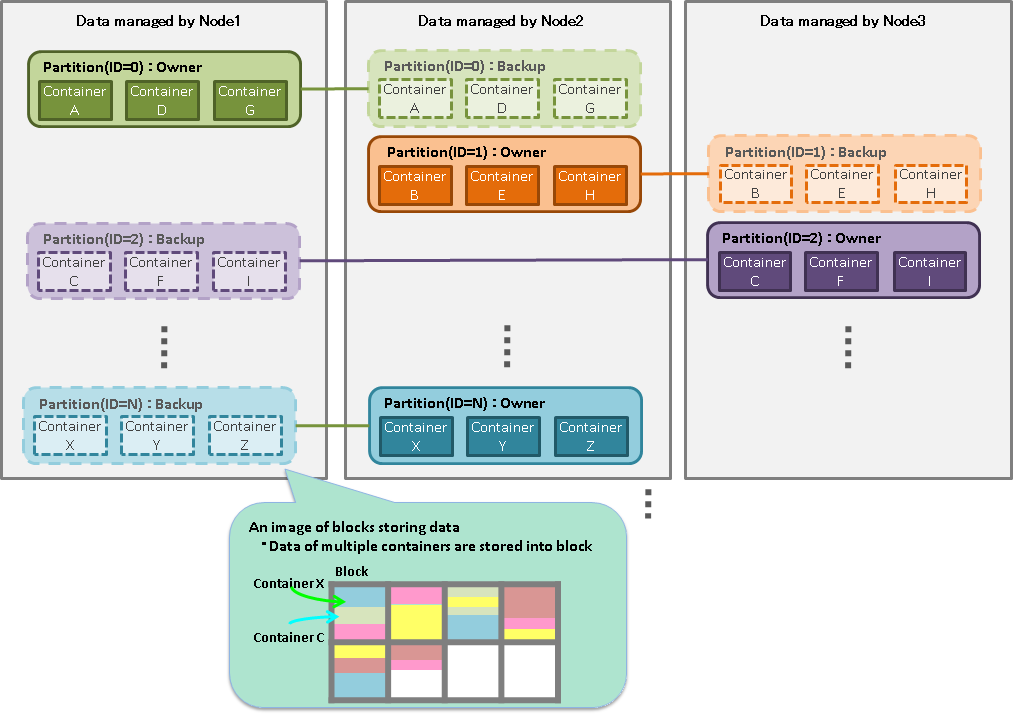

Data space design

In GridDB, data is managed per unit of block, container, table, row, and partition. Data space design using these data units will be a crucial factor in determining “availability” and “performance and scalability”.

- Relationship between a block and a container

- A block is the smallest unit of data management where row data and meta information for containers are stored. A block stores data for multiple containers.

- Relationship between containers and a partition

- A partition which a container belong to is determined by a cluster based on a hash value calculated from a container name. The relationship between a container and a partition is permanent and does not change once a partition is determined upon creating a container.

- Relationship between a partition and a node

- A partition is a unit of data placement between clusters. The placement of a partition is automatically determined by a cluster. The relationship between a partition and a node is fluid and a partition may move to other nodes through autonomous data placement on the occurrence of a failure.

- Access to a container in the same partition

- Operations on a set of containers belonging to the same partition are processed by one processing thread (transaction service). Therefore, operations on this set of containers are subsequently executed.

[notes]

- Node affinity features can force related containers to be stored in the same partition. This can reduce load during network access by tables placed in other nodes within the same cluster when SQL tables are joined in GridDB.

The next several subsections summarize points of design for the following items:

- block size

- number of partitions

- number of processing threads (transaction services and SQL services)

- checkpoint processing

- file configuration

Block size

A block is a physical data unit for storing row data and meta information for containers among others and is the smallest unit of disk I/O.

Select the block size from 64KB, 1MB, 4MB, 8MB, 16MB and 32MB. The default is 64 KB. Normally, the default works fine; no change is necessary.

Note that the limit of the number of columns in a container varies depending on the block size. To create more columns than the limit of the number of columns for the block size of 64 KB, change the block size. For details about upper limits, see the section “System limiting values” in the GridDB Features Reference.

Data stored in a block

Data stored in a block are of several types, including row data, meta information for containers, and index data. Data is categorically organized by the types of data and stored in a block.

| block type | description |

|---|---|

| metaData | block for storing meta information for containers. |

| rowData | block for storing row data for containers (without expiration settings). |

| mapData | block for storing index data for containers (without expiration settings). |

| batchFreeRowData | block for storing row data for containers (with expiration settings). |

| batchFreeMapData | block for storing index data for containers (with expiration settings). |

One block stores data for multiple containers. Data is stored in the order that it was registered or updated by an application. Storing data which are close in time or type in the same block will localize data and improve memory efficiency. It executes search processing for time-series data on the condition of elapsed time with less resources and at high speed.

related parameters

-

cluster definition file (gs_cluster.json)

parameter initial value description changes made after startup /dataStore/storeBlockSize 64KB block size not allowed

[notes]

- After the initial launch of nodes, block size cannot be changed.

- Specify the same block size for the entire nodes configuring a cluster.

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- not allowed: Once nodes are launched, the parameter cannot be changed. Initialization of database files is necessary to change parameters.

- The data affinity feature makes it possible to store only those data that are highly relevant to each block. This facilitates the localization of data access and thereby can increase the memory hit rate.

- Also, by specifying the string “#unique” for hint information provided by the data affinity feature, it is possible to occupy blocks on a per-container basis and place data. This can speed up scanning and data deletion performed for each container.

- For details on data affinity, see the “GridDB Features Reference” .

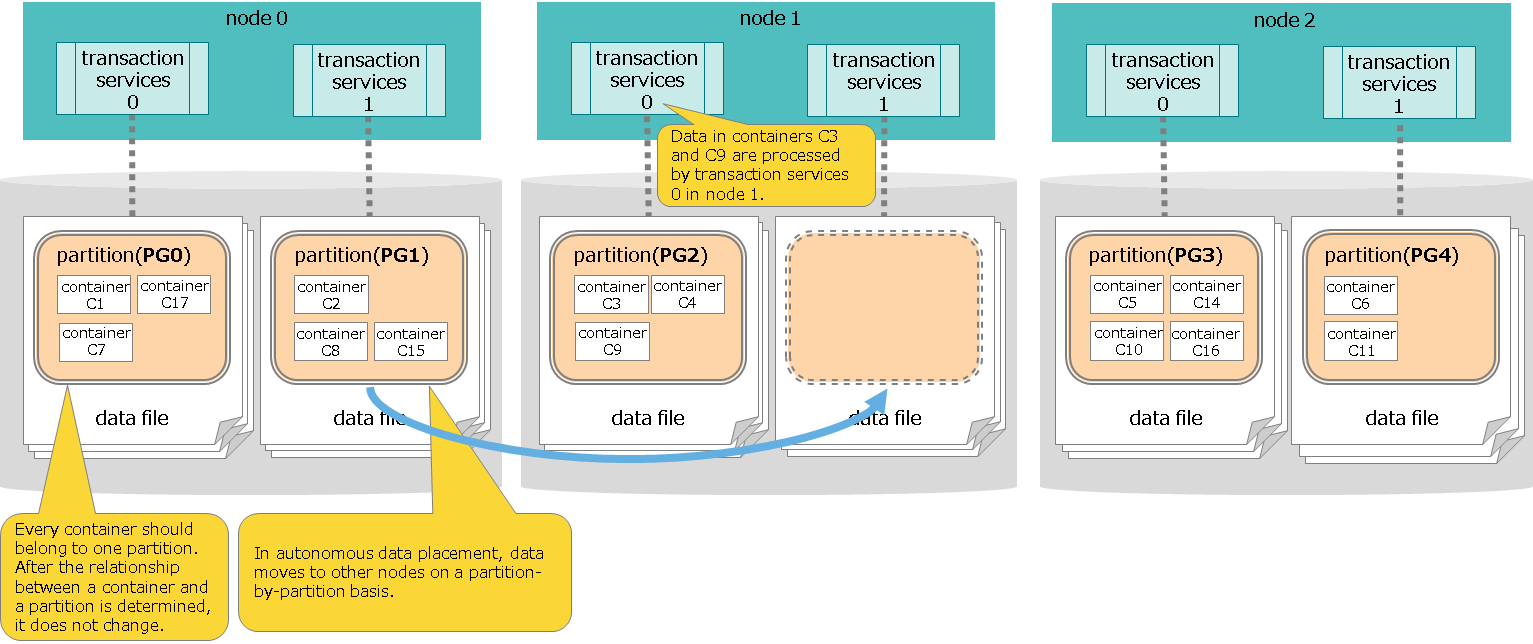

Number of partitions

As one way to manage data in a cluster, containers are managed using data boxes called partitions.

- Every container should belong to one partition, which stores multiple containers.

- Containers are assigned to transaction services and database files on a per-partition basis.

The default number of partitions is 128. Normally, the default works fine. If, however, the following conditional expression is not met, increase the number of partitions.

number of partitions >= concurrency of transaction services X number of configuration nodes in a cluster

- If the conditional expression is not met, transaction services with no partitions assigned will be created.

Set the number of partitions so as to meet the expression above.

- [Example] If concurrency = 32 and number of nodes =5,

- The default number of partitions (128) does not meet the conditional expression: 128 < (32 x 5 =160)

- Set the number of partitions to 160 or over.

- [Example] If concurrency = 32 and number of nodes =5,

related parameters

-

cluster definition file (gs_cluster.json)

parameter initial value description changes made after startup /dataStore/partitionNum 128 number of partitions not allowed -

node definition file (gs_node.json)

parameter initial value description changes made after startup /dataStore/concurrency 4 concurrency of transaction processing threads possible (Restart nodes)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- not allowed: Once nodes are launched, the parameter cannot be changed. Initialization of database files is necessary to change parameters.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

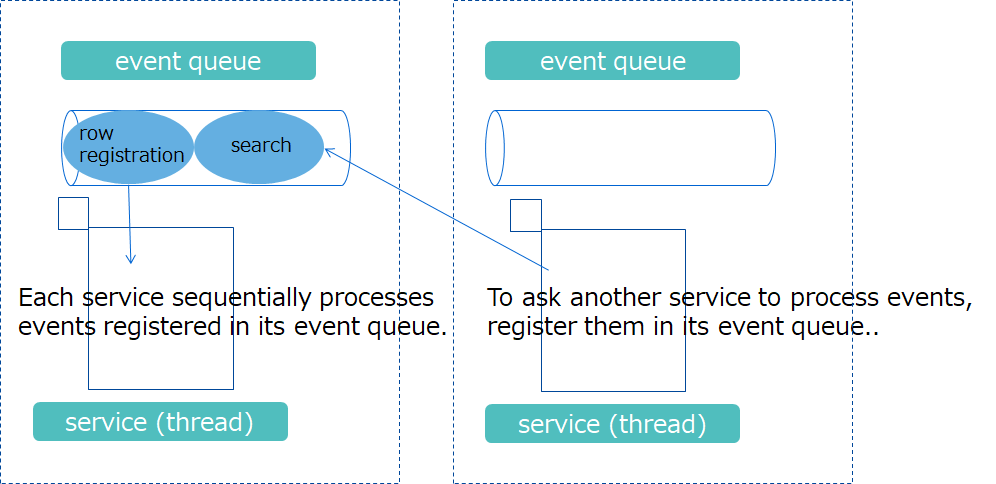

Number of processing threads

A GridDB node consists of one process. Within a process are multiple threads (called services) running various operations.

This subsection describes how to determine the concurrency of “transaction services” and “SQL services” among the services available.

| Thread name | Number of threads (default) |

What threads do |

|---|---|---|

| Transaction services | 4 (default can be set) | Executes transaction processing, including row registration and search operations, and database file write operations, among others. |

| Checkpoint services | 1 | Executes checkpoint processing |

| Cluster services | 1 | Executes cluster configuration processing, including heartbeat and node participation and departure. |

| Sync services | 1 | During partition relocation, executes long-term and short-term synchronization operations for data synchronization. |

| System services | 1 | Accepts requests for production and management operations. |

| SQL services | 4 (default can be set) | Executes SQL processing |

A processing done by each is called an “event.” Each service has one event queue and sequentially processes those events registered in the event queue. For one service A to ask another service B to process events, service A registers them in the event queue which service B has.

- Types of events that can be registered in an event queue vary depending on the types of threads.

- Events registered in an event queue are sequentially processed by the corresponding threads. They are not processed in parallel.

- Information on ongoing events can be checked using the operation tool gs_sh. For details, see GridDB Operation Tools Reference.

[notes]

- Only transaction services and SQL services can set the number of threads using a node definition file.

The threads that especially affect the parallel performance in search and registration are the threads that run transaction processing (transaction services) and threads that run SQL processing (SQL services). Set the concurrency of these processing threads to match the number of CPU cores of the machine that runs nodes.

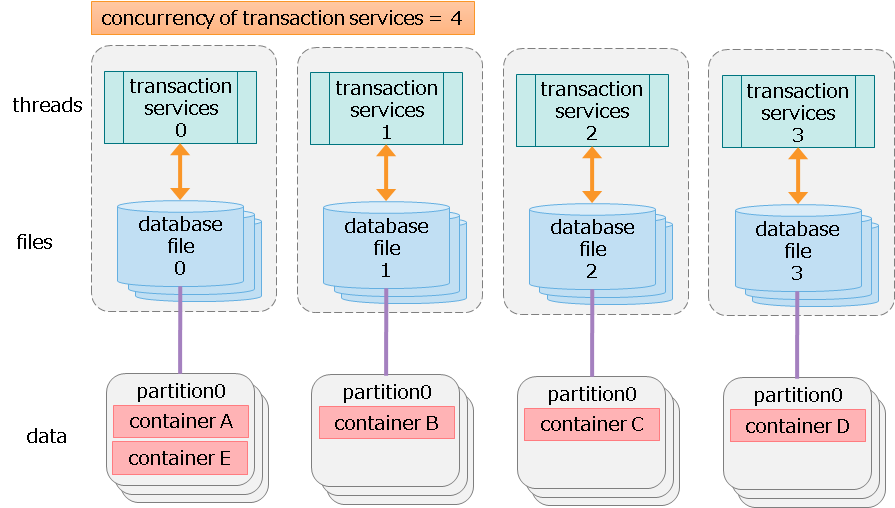

- concurrency of transaction services

- Transaction services are the threads that execute row registration and search operations, database file write processing, and other processing.

- Increasing the concurrency of transaction services improves parallel accessibility to multiple containers.

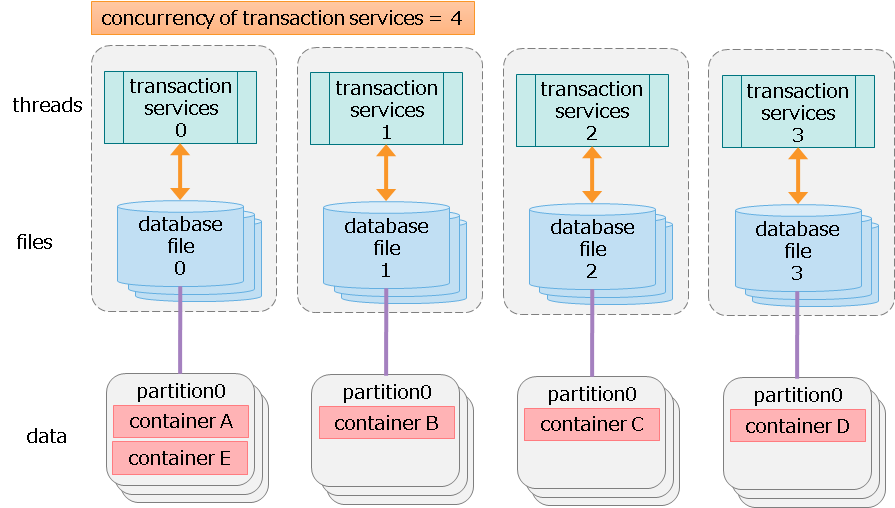

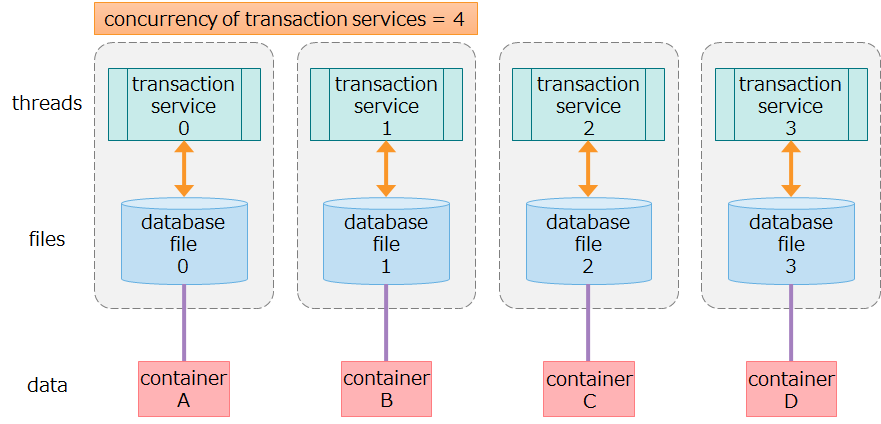

- Transaction services (threads) and database files (files) are in a one-to-one relationship, the latter of which are also in a one-to-one relationship with containers (data) as shown in the figure below:

- Data in container A is processed by transaction service 0 and stored in database file 0. Transaction services 0 and 1 never access data in container A simultaneously.

- Separating resources for data, threads, and files eliminates resource exclusion and locking conflicts, enabling fast processing.

Relationship of data, threads, and files managed by one node

- concurrency of SQL services

- SQL services are the threads that run SQL processing.

- In SQL services, data and files are not related with each other as in transaction services. To access database files or run other transaction services, use transaction services.

Set the concurrency considering the following points:

- It is recommended to increase the concurrency of transaction services to match the number of CPU cores of the machine running the nodes.

- Increasing concurrency improves parallel accessibility to multiple containers.

- Note that increasing concurrency does not improve the processing performance of the operation on the same container, which is handled by one thread.

- It is recommended to make sure that the total of the concurrency of transaction services and SQL services does not exceed the number of the machine’s CPU cores.

- concurrency of transaction services (/dataStore/concurrency) + concurrency of SQL services (/sql/concurrency)

[notes]

- Concurrency of transaction and SQL services can changed by restarting the node.

related parameters

-

node definition file (gs_node.json)

parameter initial value description changes made after startup /dataStore/concurrency 4 concurrency of transaction processing threads possible (Restart nodes) /sql/concurrency 4 concurrency of SQL processing threads possible (Restart nodes)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- not allowed: Once nodes are launched, the parameter cannot be changed. Initialization of database files is necessary to change parameters.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

Checkpoint processing

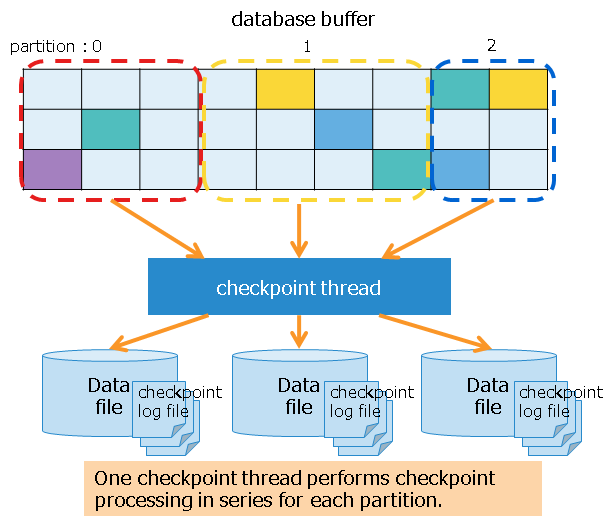

Checkpoint processing refers to a processing in which updated blocks in a database buffer are written to data files and checkpoint log files.

In checkpoint processing, parameters are available to set the run cycle of checkpoints.

While checkpoint is running, block management information is split into chunks and written to checkpoint log files. The number of splits can be specified by the parameter, but in most cases, you do not need to change the default value. Setting a higher value can increase the amount of writing done to checkpoint log files for each writing session, but at the same time could possibly increase the recovery time taken during a node startup.

A checkpoint processing is run on the following occasions:

| occasion | description |

|---|---|

| regular run | automatically run at regularly scheduled intervals (Specify the cycle using a parameter. Regular run can be temporarily deactivated.). |

| manual run | run when the user runs the gs_checkpoint command.). |

| node start/outage | automatically run after a recovery process during a node startup or when stopping nodes in a normal manner. |

| long-term synchronization start/end | automatically run when starting/ending long-term synchronization. |

Additionally, it is possible to specify whether to compress data files. Compressing data files can reduce the storage cost that increases in proportion to the amount of data. For details on the data compression feature, see the section on “data block compression” in GridDB Features Reference.

related parameters

-

node definition file (gs_node.json)

parameter initial value description changes made after startup /checkpoint/checkpointInterval 60s run cycle of checkpoints possible (Restart nodes) /checkpoint/partialCheckpointInterval 5 number of splits for processing to write block management information to checkpoint log files possible (Restart nodes) /dataStore/storeCompressionMode NO_COMPRESSION setting for exporting compressed data files

do not compress: “NO_COMPRESSION” or 0

compress: “COMPRESSION” or 1possible (Restart nodes)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

File configuration

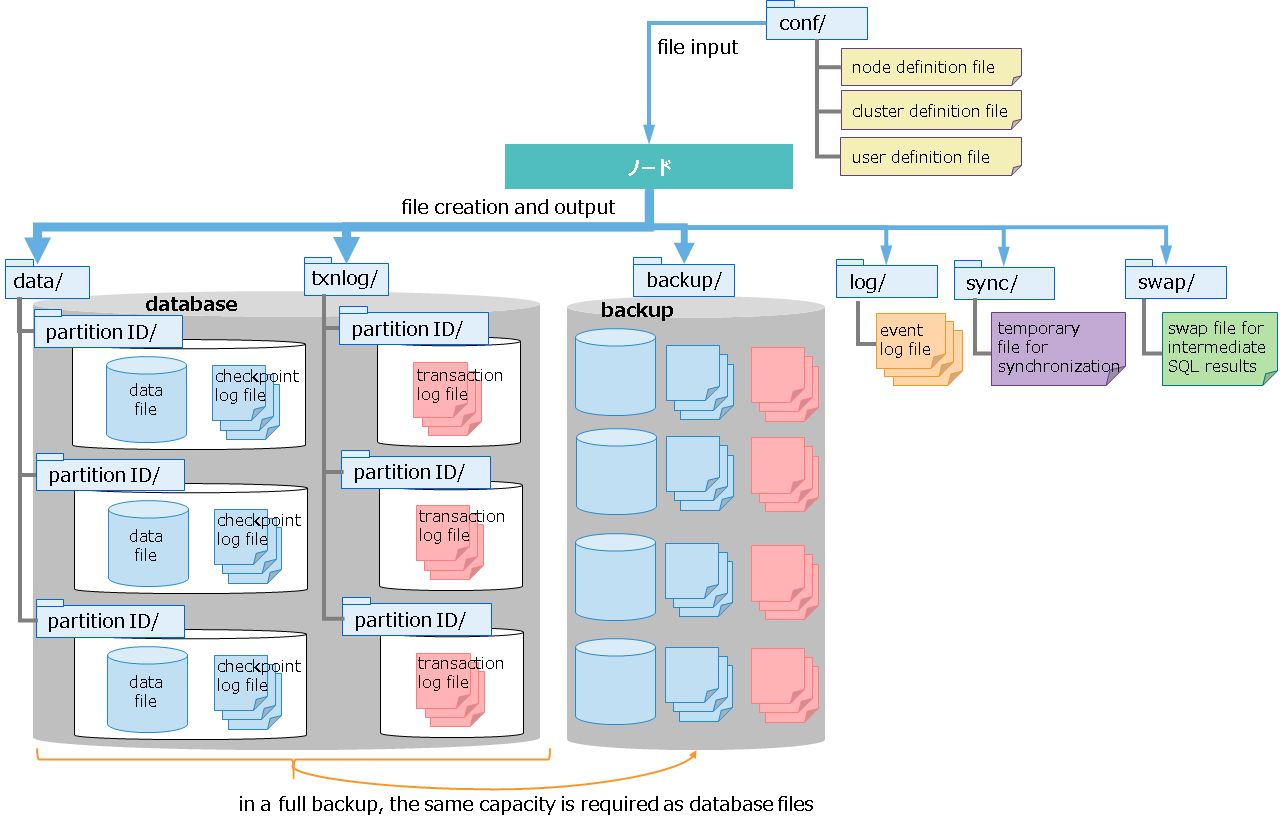

Files that GridDB nodes create or output while running include database files and backup files.

Database files and backup files are characterized by a large file size, and their disk I/O have a great impact on performance; specify the appropriate deployment directory for these files, considering storage capacity and I/O performance.

The default deployment directory is the directory under the GridDB home directory (/var/lib/gridstore).

- deployment directory for database files

- Data files, checkpoint log files, and transaction log files are collectively called database files.

- Specify the DB directory to store data files and checkpoint log files, and specify the txnlog directory to store transaction files. Placing the DB and txnlog directories on separate physical disks can enable load balancing among disks.

- The number of directories equal to the that of partition IDs are automatically created under DB and txnlog directories. Placing each directory thus created on separate physical disks enables load balancing among disks.

- deployment directory for backup files

- Set up the deployment directory for backup files to deploy them in a separate physical disk from that of database files, considering physical disk failures.

- Performing a full backup requires the same capacity as database files.

list of output files

-

database files

name description file name data file file for storing data.

number of files

-One data file is created for each partition by default.

-The data file can be split by modifying the settings. The number of splits of the data file can be defined in /dataStore/dbFileSplitCount in the node definition file.

file size

- If the free space in the file runs out, the size of the file is automatically extended./<PartitionID>/<PartitionID>_part_<n>.dat

(n: consecutive number from 0)checkpoint log file file for storing block management information for a database.

When checkpoint is running, block management information is split into chunks and then written to checkpoint log files.

number of files

- By default, a maximum of 10 files are retained for each partition. The number of files can be adjusted by /checkpoint/partialCheckpointInterval in the node definition file./<PartitionID>/<PartitionID>_<CheckpointNumber>.cplog transaction log file file for storing transaction log.

Each file stores logs for those transactions executed between the start of a previous checkpoint and the start of the next checkpoint.

number of files

- By default, a maximum of three files (current log file and previous two generations of log files) are retained per partition./<PartitionID>/<PartitionID>_<CheckpointNumber>.xlog -

other files

name description backup files files created when an online backup is started. Backed-up data files, checkpoint log files, transaction log files, and files for backup information are collectively called backup files. event log files files for storing logs for node behavior, to which error and warning messages are issued. temporary file for synchronization file for temporarily storing synchronous data during synchronization through partition relocation. swap file for intermediate SQL results file for temporarily storing intermediate SQL results. Files are deleted in the event of node outage.

related parameters

-

node definition file (gs_node.json)

The file origin of a node definition file is the GridDB home directory if the directory path is relative.

parameter initial value description changes made after startup /dataStore/dbPath data deployment directory for data files and checkpoint log files possible (Restart nodes) /dataStore/transactionLogPath txnlog deployment directory for transaction log files possible (Restart nodes) /dataStore/backupPath backup deployment directory for backup files possible (Restart nodes) /dataStore/syncTempPath sync deployment directory for temporary files for synchronization possible (Restart nodes) /system/eventLogPath log deployment directory for event log files possible (Restart nodes) /sql/storeSwapFilePath swap deployment directory for swap files for intermediate SQL results possible (Restart nodes) /dataStore/dbFileSplitCount none number of data file splits

Example: If the number of data file splits is equal to four, data files are split into four files for each partition to create /<PartitionID>/<PartitionID>_part_0.dat -/<PartitionID>/<PartitionID>_part_3.dat.not allowed

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- not allowed: Once nodes are launched, the parameter cannot be changed. Initialization of database files is necessary to change parameters.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

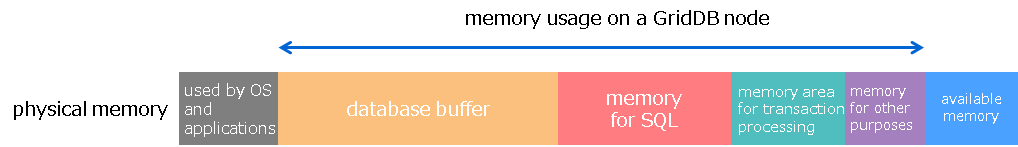

Memory space design

GridDB is a hybrid-type database which uses both memory and disks. It accelerates access using memory as data cache and stores data in disks to manage large volumes of data.

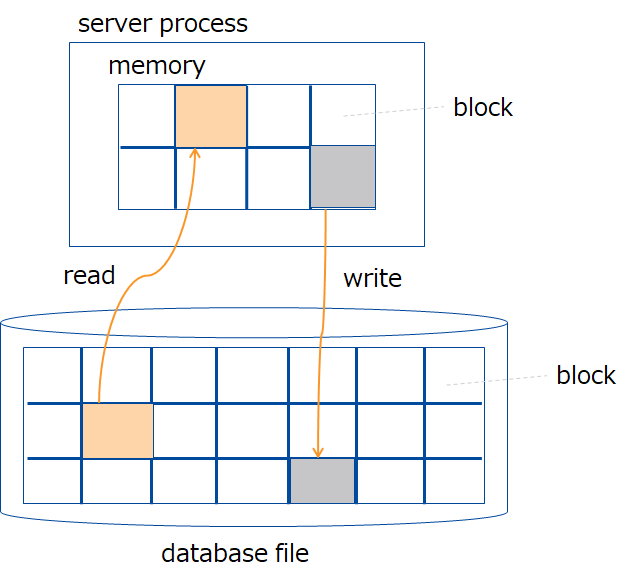

As shown in the figure below, a larger memory size means larger volumes of data are cached in memory, which reduces disk I/O and improves performance. Memory size thus has a great impact on the performance of GridDB. Therefore, memory space should be designed with the system’s performance requirements and the amount of data in mind.

GridDB nodes have various types of buffers and memory areas for different uses. The main types of memory are:

| name | description |

|---|---|

| database buffer | I/O buffer for caching retrieved data. caches data image (block) retrieved from data files in memory. A larger database buffer size means blocks are more easily cached in memory, which improves search and registration performance. Each node has one database buffer area; as such, multiple transaction processing threads share in its use. |

| memory area for transaction processing | area used by transaction processing threads which perform row registration and search processing. |

| buffer for storing intermediate SQL results | area for storing intermediate results while SQL processing is taking place. If the size of the intermediate results exceeds the buffer memory size limit, those results are temporarily exported in swap files for intermediate SQL results. |

| SQL work memory area | area for temporarily storing intermediate results in SQL processing, including join and aggregation operations. |

In designing memory space, it is necessary to design the upper size limit and other related parameters for each memory area. A key point here is the memory sizes of “database buffers” and “memory areas for SQL processing.”

The size of “database buffers” has the greatest impact on the system’s performance. If there is space in physical memory, it is recommended to assign as much memory size as possible to database buffers.

-

Considering the characteristics of data stored in GridDB, classify data into frequently used data and infrequently used data.

- Often, it is advisable to classify data based on the elapsed time for time-series data.

- Examples

- frequently used data → all sensor data from the previous hour

- infrequently used data → a year-old data

- Data for the past year is stored, but the search range used for regular tasks is limited to the data from the previous month.

- Production data on five factories is stored, but only one factory’s data requires high-speed searching.

- To classify data based on the elapsed time, look for the data which most requires performance and examine the type and age of such data.

-

Set the size of a database buffer so as to allow for the amount of frequently used data.

As for the size of a memory buffer for SQL processing, conduct its assessment with a typical SQL query used by the system and set the memory size.

- required design items

-

Determine the buffer size to match the amount of data to be used.

buffer name Guidelines for design database buffer Estimate the size of the data in the range frequently searched for and set the memory size. SQL-related buffer Conduct its assessment with an SQL query used by the system and set the memory size.

-

-

design items to be changed as needed

-

Change the buffer size depending on the operational status.

buffer name The buffer size needs to be changed, if: memory area for transaction processing a memory shortage error [CM_MEMORY__LIMIT] occurs due to TQL or SQL operations.

-

The next several subsections summarize points of design for the following items:

- database buffer

- memory area for transaction processing

- memory area for SQL processing

- Memory for other purposes

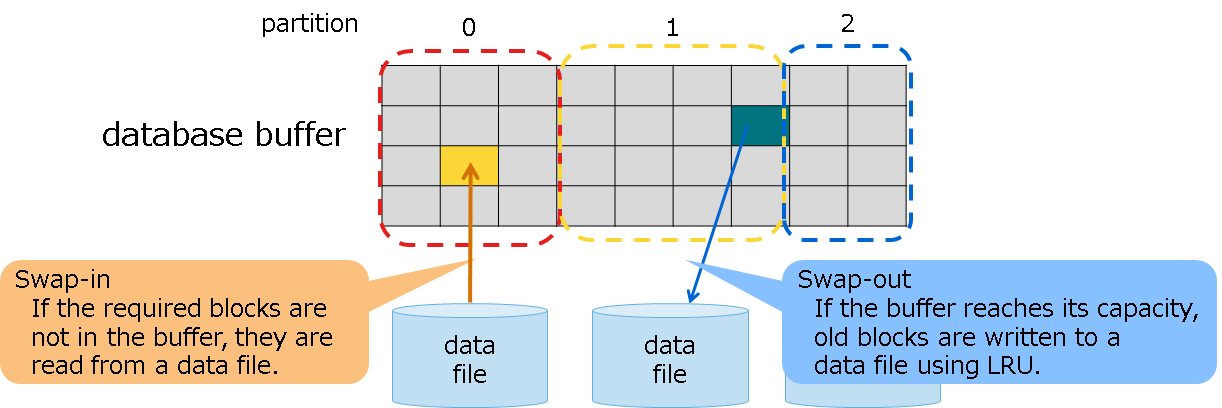

Database buffer

This is an area for caching in memory the blocks read from a data file.

A larger database buffer size means data blocks reside in memory, which improves search and registration performance.

Specify the upper buffer size limit using a node definition file. If the buffer reaches its capacity, old blocks are written to a data file using LRU to create a space and read the remaining blocks from files. Read operations from files and write operations to files are called swapping. If blocks are in use and hence cannot be loaded to create a space, the buffer size is temporarily expanded. If blocks are in use and hence cannot be loaded to create a space, the buffer size is temporarily expanded. When the processing ends and a space is no longer needed, the buffer size is reduced to its upper limit.

The internal buffer is partitioned on a per-partition basis before use. The size allocated to each partition is dynamically determined by nodes according to the amount of data and accessibility status.

Immediately after starting up nodes, no blocks are yet read into a database buffer. Therefore, in search and registration processing immediately after a startup, swap processing occurs frequently as a result of reading blocks, which may degrade the speed performance.

related parameters

-

node definition file (gs_node.json)

parameter initial value description changes made after startup /dataStore/storeMemoryLimit 1024MB upper size limit of a database buffer allowed (online) [notes]

- The upper size limit can also be changed online while starting nodes. To make the change permanent, stop nodes and modify the node definition file.

- To the upper size limit of a database buffer, allocate 60 to 70% of the physical memory available for GridDB. After launching, check the amount of swapping. If there is an excessive amount of swapping, a buffer shortage occurs which may downgrade performance. Consider adding memory and changing the upper size limit of a database buffer.

- For details about swapping, see the column “How to check related information” in the table below to check information about read and write in swapping.

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- allowed (online): The parameter can be changed online while starting nodes. To make the change permanent, modify the node definition file.

How to check related information

-

Information on the setup value and the current value can be checked by running the operation command gs_stat while running nodes.

display item for gs_stat in JSON format setup value/current value description /performance/storeMemoryLimit setup value upper size limit of a database buffer (in bytes) /performance/storeMemory current value current size of a database buffer (in bytes) /performance/swapRead current value number of reads from files in swapping (total) (unit: number of times) /performance/swapReadSize current value read size from files in swapping (total) (in bytes) /performance/swapReadTime current value load time from files in swapping (total) (in milliseconds) /performance/swapWrite current value number of writes to files in swapping (total) (unit: number of times) /performance/swapWriteSize current value write size to files in swapping (total) (in bytes) /performance/swapWriteTime current value write time to files in swapping (total) (in milliseconds) [example of executing the command]

$ gs_stat -u admin/admin -s 192.168.0.1:10040 { : "performance": { "storeMemory": 4294967296, "storeMemoryLimit": 4294967296, "storeTotalUse": 9792126976, "swapRead": 2072705, "swapReadSize": 135836794880, "swapReadTime": 3920574, "swapWrite": 2172, "swapWriteSize": 142344192, "swapWriteTime": 601, :

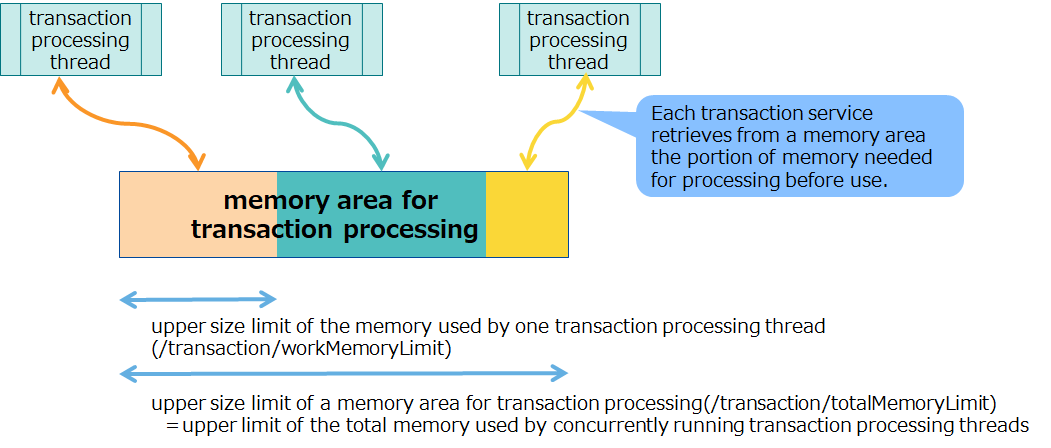

Memory area for transaction processing

This is a memory area used in transaction services where row registration and search processing are performed. Each transaction service retrieves from a memory area the portion of memory needed for processing before use. After one transaction process ends, the memory that has been made available is released back to the memory area.

The number of transaction services (concurrency) is equivalent to /dataStore/concurrency (4 by default).

If all the memory in a memory area is in use, and memory required for transaction services cannot be reclaimed, an error will occur.

A transaction may use a large amount of memory in such processing as running tens of millions of hits of queries using TQL, registering huge sized BLOBs, and registering a bulk of huge sized data using MultiPut. Set the upper size limit of a memory area according to the content of transaction processing and the number of transaction services (concurrency: /dataStore/concurrency).

related parameters

-

node definition file (gs_node.json)

parameter initial value description changes made after startup /transaction/totalMemoryLimit 1024MB upper size limit of a memory area for transaction processing possible (Restart nodes) /transaction/workMemoryLimit 128MB upper size limit of the memory used by one transaction processing thread possible (Restart nodes) [notes]

- The upper size limit cannot be changed online. To change the upper size limit, stop nodes and modify the node definition file.

- If the memory needed for processing cannot be reclaimed, an error will occur. Check the current value set for the upper memory size limit and consider adding memory and changing the upper limit.

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

How to check related information

-

Information on the setup value can be checked by running the operation command gs_paramconf while running nodes.

display item for gs_paramconf in JSON format setup value/current value description /transaction/totalMemoryLimit setup value upper size limit of a memory area for transaction processing (in bytes) /transaction/workMemoryLimit setup value upper size limit of the memory used by one transaction processing thread (in bytes)

Memory area for SQL processing

There are two different types of memory areas for SQL processing: the buffer for storing intermediate SQL results and SQL work memory areas.

The buffer for storing intermediate SQL results is a memory for storing the data in the tables for intermediate results of such tasks in SQL processing as scan and join. If the size of the intermediate results exceeds the buffer memory size limit, those results are temporarily exported in swap files for intermediate SQL results.

When executing analytical queries on large-volume data using an SQL, it is recommended to set the value of this buffer as high as possible, considering the balance with the database buffer.

SQL work memory is memory used in processing tasks in SQL processing. There is no need to change the default value for work memory.

However, be sure to set the sizes of a buffer for storing intermediate results and of work memory so as to satisfy the following expression:

- upper limit of a buffer for storing intermediate results (storeMemoryLImit) >= upper limit of work memory (workMemoryLImit) * concurrency for SQL processing

Unless the above expression is satisfied, exporting to swap files for intermediate results takes place often.

related parameters

-

node definition file (gs_node.json)

parameter initial value description changes made after startup /sql/storeMemoryLimit 1024MB upper size limit of buffer for storing intermediate SQL results possible (Restart nodes) /sql/workMemoryLimit 32MB upper size limit of an SQL work memory buffer possible (Restart nodes) /sql/workCacheMemory 128MB of the memory specified in workMemoryLimit, the portion of the memory to cache instead of releasing it after use possible (Restart nodes) /sql/storeSwapFilePath swap directory for deploying files for storing intermediate SQL results possible (Restart nodes) /sql/concurrency 4 concurrency for SQL processing possible (Restart nodes) [notes]

- The upper size limit cannot be changed online. To change the upper size limit, stop nodes and modify the node definition file.

- If the memory for SQL processing runs out, swapping (write operations to and read operation from swap files) will be performed.

- If excessive swapping is occurring, increase the size of the memory for SQL processing. See the second table under “How to check related information” below and check the items sqlStoreSwapXXXX to track how much swapping is occurring.

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

How to check related information

-

Information on the setup value can be checked by running the operation command gs_paramconf while running nodes.

display item for gs_stat in JSON format setup value/current value description /sql/storeMemoryLimit setup value upper size limit of buffer for storing intermediate SQL results /sql/workMemoryLimit setup value upper size limit of an SQL work memory buffer /sql/workCacheMemory setup value of the memory specified in workMemoryLimit, the portion of the memory to cache instead of releasing it after use /sql/storeSwapFilePath setup value directory for deploying files for storing intermediate SQL results -

Information on the current value can be checked by running the operation command gs_stat while running nodes.

display item for gs_stat in JSON format setup value/current value description /performance/sqlStoreSwapRead current value number of reads from files in swapping (total) (unit: number of times) /performance/sqlStoreSwapReadSize current value read size from files in swapping (total) (in bytes) /performance/sqlStoreSwapReadTime current value load time from files in swapping (total) (in milliseconds) /performance/sqlStoreSwapWrite current value number of writes to files in swapping (total) (unit: number of times) /performance/sqlStoreSwapWriteSize current value write size to files in swapping (total) (in bytes) /performance/sqlStoreSwapWriteTime current value write time to files in swapping (total) (in milliseconds)

Memory for other purposes

Memory for retaining container and data file management information

Memory is not only used for a buffer for processing, but also used to retain the management information on containers and files. The size of the next two types of memory (memory for container management and memory for data management) increases relative to the number of containers and the amount of data, respectively.

- Memory for container management

- Always uses memory approximately amounting to (number of containers in the node) * 64 bytes.

- For example, if the number of containers is 10 million, then approximately 640 MB of memory would be used.

- Memory for data file management

- Always uses memory approximately amounting to (total amount of data) divided by (block size) * 32 bytes.

- For example, if the total amount of data is 1 TB and the block size is 64 KB, then approximately 500 MB of memory would be used.

Container design

The optimal container design largely depends on system and application requirements. This section describes the basics that help you with container design and provides related information.

Recommended design

Store data generated daily in time-series containers.

Containers are of two types: collections and time-series containers.

First, see if time-series containers can be used to store data; only when time-series containers are not available, consider using collections.

Use time-series containers when device sensor data and logs are generated on a minute-by-minute basis that results in a continuous increase, like IoT systems. Time-series containers are optimized for storing and referencing time-series data. As such, database buffer is more efficiently used than when collections are used.

The features of time-series containers include operation specific to time-series data. Gaining a better understanding of these features helps you with container design.

[References]

- This documentation (GridDB Database Administrators Guide)

- GridDB Features Reference

- GridDB Programming Guide

- GridDB TQL Reference

Use a large number of containers rather than small.

Processing for one container is handled on one processing thread for one node server. As such, designing containers to store a large amount of data in every container using a few containers cannot take advantage of node processing concurrency (multicore). For another thing, such design leads to excessive access to particular nodes, which means performance does not scale out when adding nodes.

For example, for time-series data, create time-series containers for each data source as below:

- For sensor data, create one time-series container for each individual sensor.

- For production data, create one time-series container for each product.

Even in case of the same kind of data, it is recommended to split the container into as many containers as the number of cluster nodes multiplied by the processing concurrency per node.

Running operations of multiple containers serially can degrade performance. Process split containers in parallel as much as possible using batch processing.

[References]

- This documentation (GridDB Database Administrators Guide)

- GridDB Features Reference

- GridDB Programming Guide

Split containers in a way that suits data.

Three methods are available to split containers: row splitting, column splitting, and table partitioning.

-

Row splitting

This method splits the same kind of data to match the search criteria. For example, do not continue to store sensor data of a particular type in one container; store only one day’s sensor data in one container. Storing data like this will make it easier to process in parallel such search as all data for a certain day, or one week’s data for a certain sensor and thus improve search performance. Storing data like this will make it easier to process in parallel such search as all data for a certain day, or one week’s data for a certain sensor and thus improve search performance. -

Column splitting

This method splits data referenced infrequently to store in separate containers. For example, do not store all device logs in one container; store only information frequently used for analysis in the same container and other information in a separate container.Storing logs like this will remove the need to reference unnecessary data and thus improve search performance.

-

table partitioning This method specifies particular columns as partitioning keys and thus automatically assigns data to multiple internal containers depending on the value of those columns. Split methods have classifications. Select a classification according to data.

Select the appropriate method to match search criteria or data characteristics.

[References]

- GridDB Features Reference

Minimal indexing maximizes performance.

Creating indexes appropriately to match the system’s data search criteria will improve search performance. Multiple indexes can be created in one container, but the number of indexes must be minimized to what is required.

This is because index data stresses database buffers. In systems where the memory size is not sufficient, excessive indexing decreases the buffer hit ratio and increases swapping, thereby leading to performance degradation.

It is possible to remove unnecessary indexes afterwards, but it may take a long time to complete removal, if a large amount of rows is already stored in the target container.

For this reason, take time beforehand to fully design containers and try to create only necessary indexes.

Designing containers in such a way that retrieval by primary key alone is enough to focus data will automatically result in minimal indexing. Moreover, such design automatically means splitting containers, which makes it possible to take advantage of each node’s processing concurrency.

To create effective indexes in the Advanced Edition, refer to the SQL optimization rules in the GridDB Advanced Edition SQL Tuning Guide.

[References]

- This documentation (GridDB Database Administrators Guide)

- GridDB Features Reference

- GridDB Programming Guide

- GridDB SQL Tuning Guide

Cluster configuration design

In designing cluster configuration, it is necessary to design the following items, to fulfill the requirements for availability, including the system’s utilization rates and RTO.

- Number of nodes configuring a cluster

- Failure detection (heartbeat)

- Replica processing (number of replicas, processing mode)

- Client failover timeout

GridDB autonomously places replicas (data) in the clusters configured by multiple nodes (server processes) and automatically determines the master node that manages the entire clusters. Even if a node fails, processing from the client application (NoSQL interface) can continue using failover.

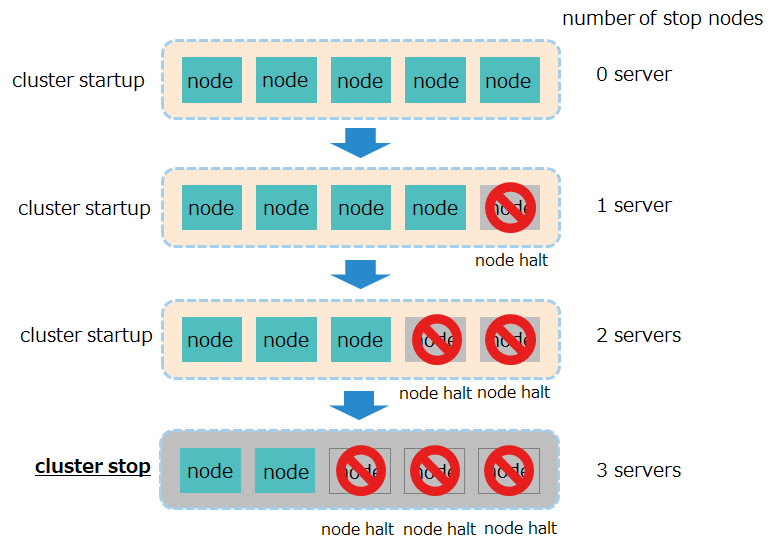

Number of nodes configuring a cluster

Depending on the number of node servers configuring clusters, the maximum number of node servers that can tolerate simultaneous node failures to allow cluster services to continue varies.

To meet the required utilization rates of the system, determine the number of nodes configuring the cluster, depending on the maximum number of node servers that can tolerate simultaneous node downs.

-

Cluster services will stop if more than half of the number of node servers configuring a cluster results in a node down.

Number of running cluster servers - For a system that requires availability, it is recommended to have at least three node servers that configure a cluster. With less than three node servers available, clusters will go down if one node server stops.

-

The number of configured nodes and the maximum number of stop nodes where clusters can operate have the following relationships:

number of configured nodes maximum number of stop nodes where clusters can operate 1 server 0 (low availability) 2 servers 0 (low availability) 3 servers 1 server 4 servers 1 server 5 servers 2 server

-

- For a system that does not require availability, it is possible to have one node server to run it with a single configuration.

[notes]

- In case of planned outages, including hardware maintenance, stop one node server at a time. If multiple nodes are stopped, in case a failure occurs in the rest of the nodes, the number of down servers might exceed half the total number of servers, which could stop the cluster.

If a node goes down, the following recovery processing will automatically be performed:

- Cases where the master goes down

- Redetermine the master.

- Relocate partitions (replicas).

- Cases where followers go down

- Relocate partitions (replicas).

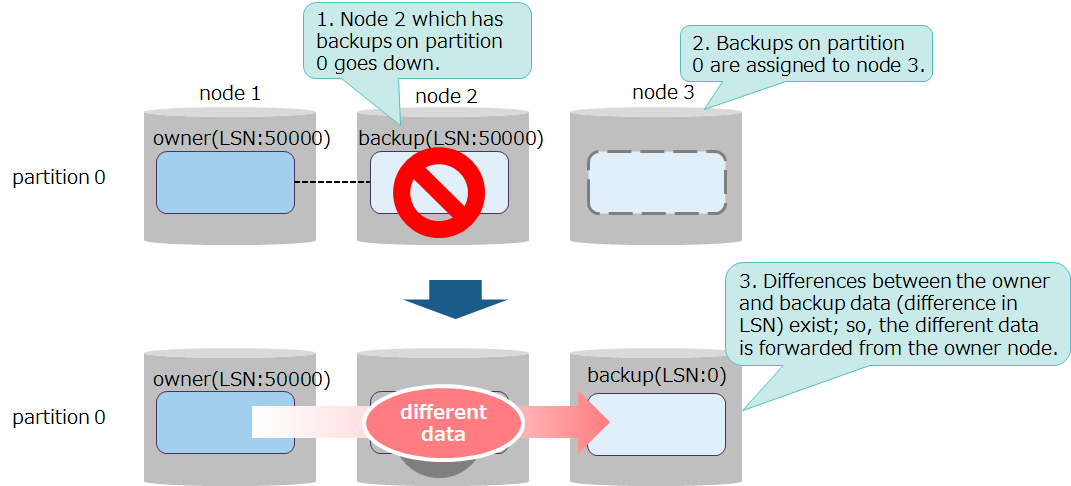

Partitions (replicas) are relocated not only when a cluster is contracted due to a node down, but also when a cluster is extended.

Before relocation, if backup data is older than owner data, the cluster will forward the delta data to the backup node and synchronize the owner and backup data.

- Whether or not differences between the owner and backup data exist is determined by the value of LSN (Log Sequence Number) each piece of data has. The value of LSN increases for each data update; hence, a greater value means newer data.

- Synchronization are of two types, in terms of the differences between the owner and backup data.

- short-term synchronization

- performed when the data differences are small. The amount of data forwarded is small; processing completes in less processing time.

- long-term synchronization

- performed when the data differences are large. The amount of data forwarded is large; processing may take a long time to complete.

- short-term synchronization

Synchronization described above is automatically performed by the server. There is no item that needs design work.

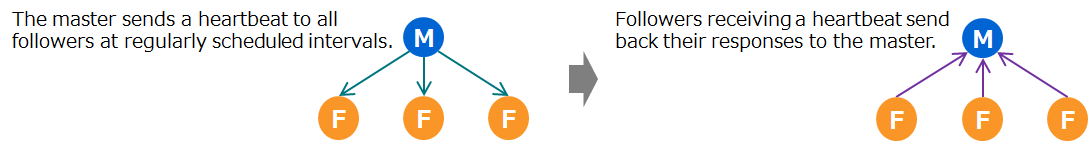

Failure detection (heartbeat)

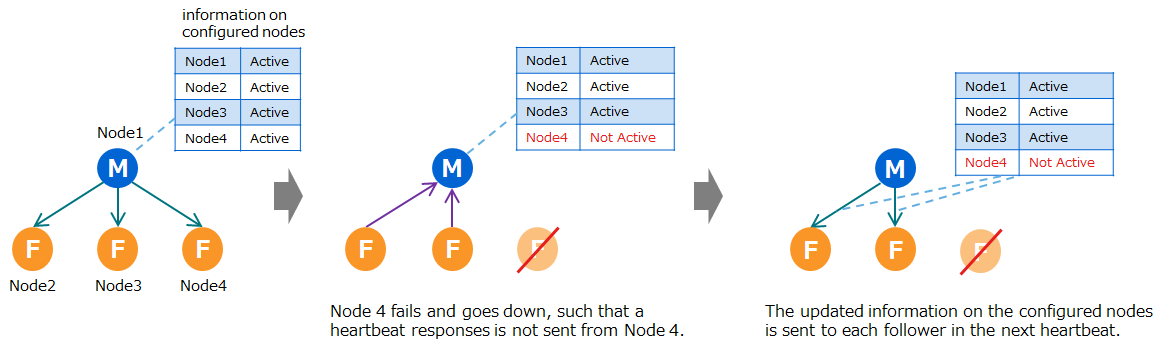

A cluster detects failure through a heartbeat between nodes. To check the survival of nodes, the master sends a heartbeat to all followers at regularly scheduled intervals. Followers receiving a heartbeat send back their responses to the master.

To check if a heartbeat sent from the master has arrived, followers check the received time of a heartbeat at regularly scheduled intervals.

To reflect the latest data between the master and followers, a heartbeat exchanges information on configured nodes and partition information management tables between the master and followers.

- Cases where the followers’ responses are not sent to the master

- If the followers’ heartbeat responses are not sent to the master twice in succession, the master will assume the followers have gone down.

- The master then updates the information on the configured nodes it has and sends it to each follower in the next heartbeat.

- Finally the cluster performs processing for failure detection

Cases where the followers' heartbeat responses are not sent - Cases where the master’s heartbeat is not sent to the followers

- If the master’s heartbeat is not sent to the followers twice in succession, the followers will assume the master has gone down.

- To determine a new master, all followers temporarily become a submaster. Then, one node server is selected from among all nodes to be the next master and the rest will become followers again.

- Finally the cluster performs processing for failure detection

The length of regularly scheduled intervals for both the master and followers is by default 5 seconds (value of /cluster/heartbeatInterval in gs_cluster).

related parameters

-

cluster definition file (gs_cluster.json)

parameter initial value description /cluster/heartbeatInterval 5 seconds - regular interval at which the master sends a heartbeat

- regular interval at which the followers check the time to receive a heartbeat from the master -

node definition file (gs_node.json)

parameter initial value description /cluster/serviceAddress 127.0.0.1 address used for sending/receiving a heartbeat /cluster/servicePort 10010 port number used for sending/receiving a heartbeat

Replica processing (number of replicas, processing mode)

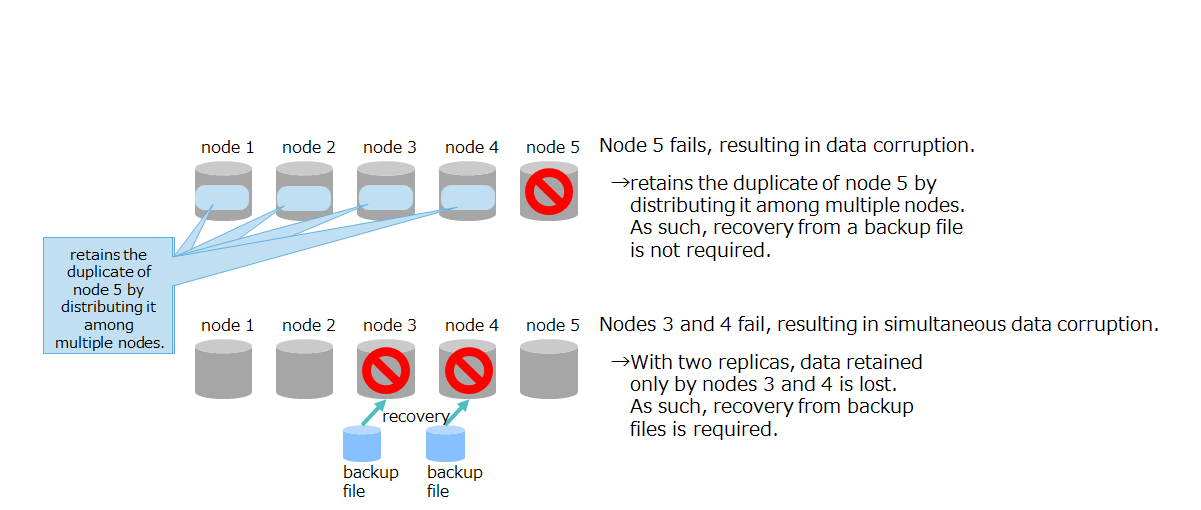

For greater data availability, GridDB creates replicas and retains them by distributing them among multiple nodes. For this reason, even if a node fails, GridDB can continue data access using replicas in the rest of the nodes.

The next several subsections explain points of design for replicas; replication in which replicas are created; and the mechanism of synchronization where replicas are recovered from failure.

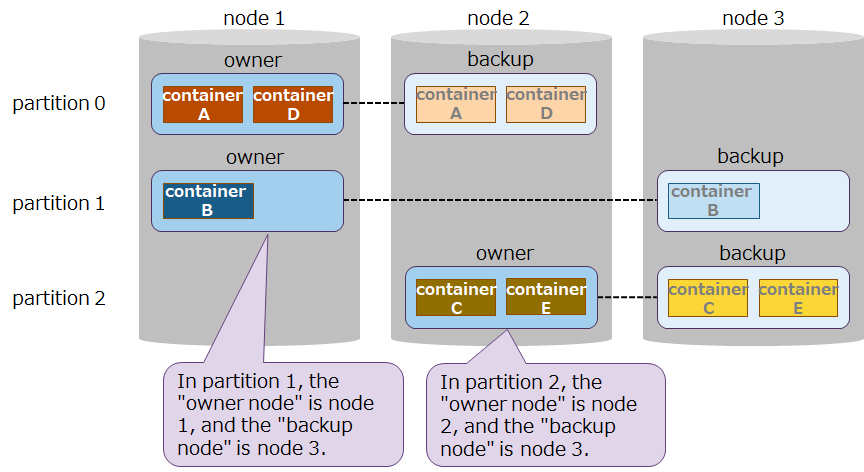

Replica

A replica has the following features:

- The data unit for a replica is the partition.

- Among replicas, the master’s data is called the “owner” and the duplicated data from the owner is called the “backup.” Moreover, the nodes that have each of the data above are called the “owner nodes” and the “backup nodes,” respectively.

-

Registration and reference by an application are applied to the owner. Backup is intended to be data for recovery when a failure occurs in the owner. Therefore, an application never access it .

Replica - The owner and backup in each partition are distributed to place them in different nodes. In which nodes these replicas are placed is automatically determined by the cluster.

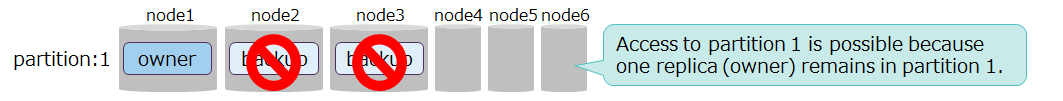

The number of replicas is determined by the maximum number of node servers that can withstand multiple failures to ensure data access, as dictated by the system’s utilization rates.

A multiple failure refers to a failure occurring in multiple nodes which causes them to go down all at once.

- If the number of nodes where multiple failures are occurring is less than that of replicas, the client can continue data access.

- In this case, temporarily fewer replicas are present in a cluster, which results in a decreased availability state, called “replica loss.” Eventually, new replicas are automatically created in synchronization, which sets the cluster back to the normal state.

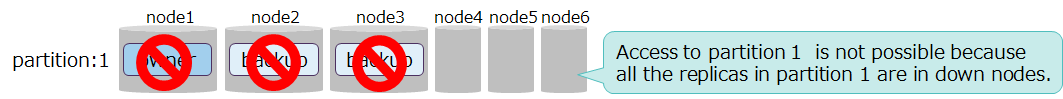

- If the number of nodes where multiple failures are occurring is equal to or greater than that of replicas and all the replicas in a partition are placed in the nodes which have gone down due to multiple failures, then data in that partition will be inaccessible.

- A partition where none of the owner and backup data is available is in a state called “owner loss.” Unless nodes which have replicas are recovered to enable them to participate in a cluster, the corresponding partition remains inaccessible.

- However, in some multiple failures where multiple nodes have gone down, if node downs occur at certain intervals as opposed to simultaneously, synchronization may be run during this period to create replicas in other nodes, so that the client can continue data access.

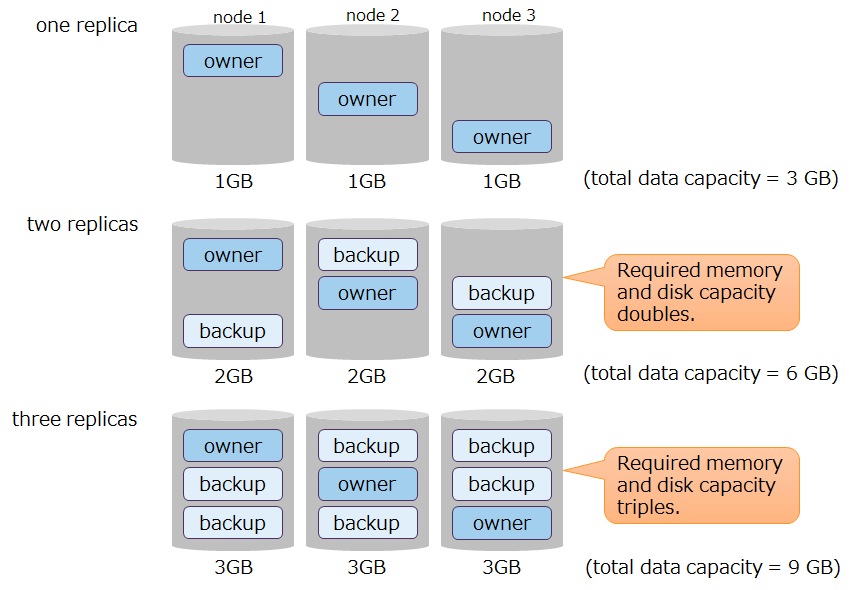

More replicas means greater availability with the following adverse effects:

-

effect on the amount of data

- More replicas means greater amount of data, which in turn increases the amount of memory and disks needed for processing.

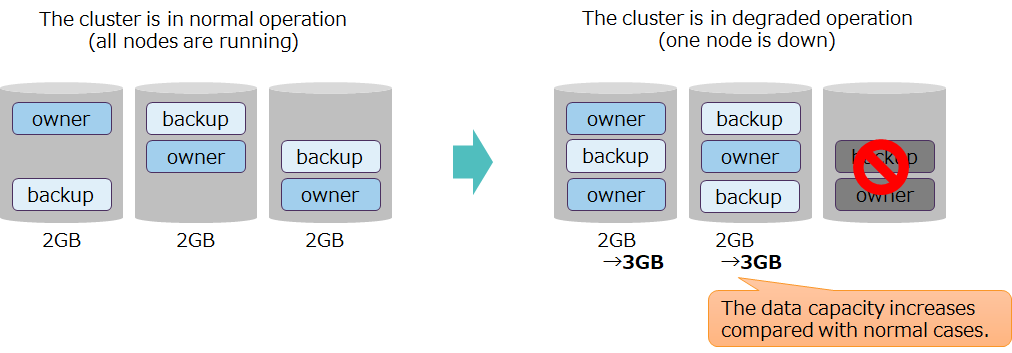

Relationship between the number of replicas and the amount of data - While a cluster is in degraded operation, data in the nodes that are stopped is placed in other nodes, such that during degraded operation, the amount of data handled by a node increases.

Relationship between the number of replicas and the amount of data

Below is a guideline for the number of replicas:

- For a multi-node cluster configuration, the recommended number of replicas is 2 (which is the default value in a definition file).

- For a single configuration, the recommended number of replicas is 1. Specifying 2 or above for the number of replicas does not have any effect on availability, because replicas are created in the same node.

- If the system has sufficient resources for memory and disks and requires high availability, consider having at least 3 replicas.

- In this case, set the number of replicas to equal to or less than half the number of configured nodes, such that,

- number of replicas <= (number of configured nodes + 1) /2

- Unless the above expression is satisfied, if node servers of less than the number of replicas fail, the system cannot satisfy the criteria for running the services (more than half the nodes are running), which will stop the services, regardless of whether data exists in replicas.

- In this case, set the number of replicas to equal to or less than half the number of configured nodes, such that,

related parameters

-

cluster definition file (gs_cluster.json)

parameter initial value description changes made after startup /cluster/replicationNum 2 number of replicas possible (Restart the cluster)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching. -possible (Restart the cluster): The parameter can be changed by modifying the definition file specifying the parameter and then restarting all the nodes and the cluster.

Replication processing

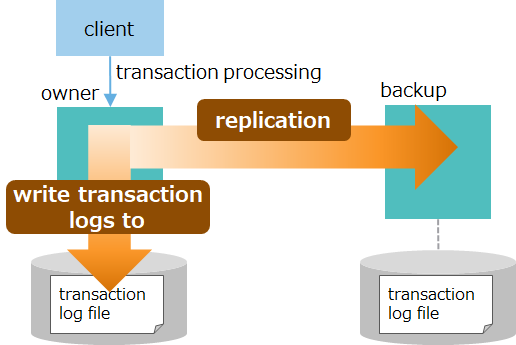

Data updated in transaction processing, including data registration and deletion can be written to disks and made permanent. This protects data updates from missing data in case of a node failure. Further, availability can be increased by forwarding updated data to a node containing backup data and replicating it in this node through replication.

In transaction processing, each of writing to log files and replication has two modes, including synchronous and asynchronous modes. Select one based on the system’s availability and performance requirements.

-

Replication modes

mode description asynchronous

(default)For each transaction processing, forwards updated data to a backup node but afterwards does not wait for receive completion afterwards. semi-synchronous For each transaction processing, forwards updated data to a backup node and afterwards waits for receive completion afterwards. (The process of reflecting updated data at the backup node is asynchronous. ) -

transaction log write mode

mode description asynchronous

(default)Regardless of the timing of transaction processing, flushes to transaction log files at regular intervals. synchronous For each transaction processing, flushes to transaction log files. - An asynchronous mode is a mode for such systems as IoT systems which register data frequently. Asynchronous writing improves speed. While the likelihood of missing data increases, GridDB simultaneously forwards data for replication which results in an increase in availability even in an asynchronous mode. For example, consider the case where, after notifying the application about completion of transaction processing, the machine for owner data goes down prior to the log write cycle. In this case, data updated by the owner is lost, but since updated data is forwarded to the backup node, the likelihood of missing data can be reduced.

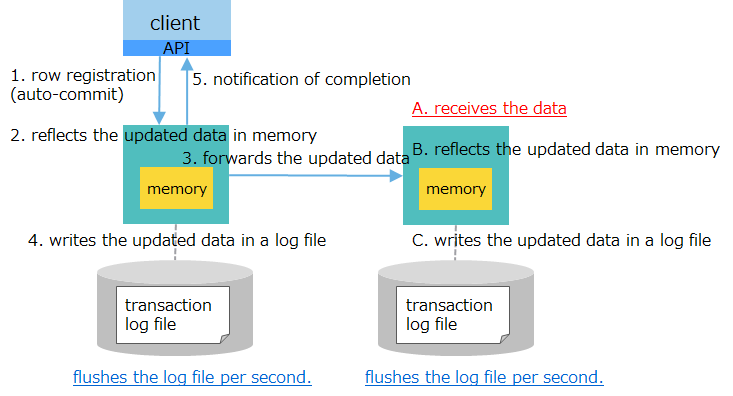

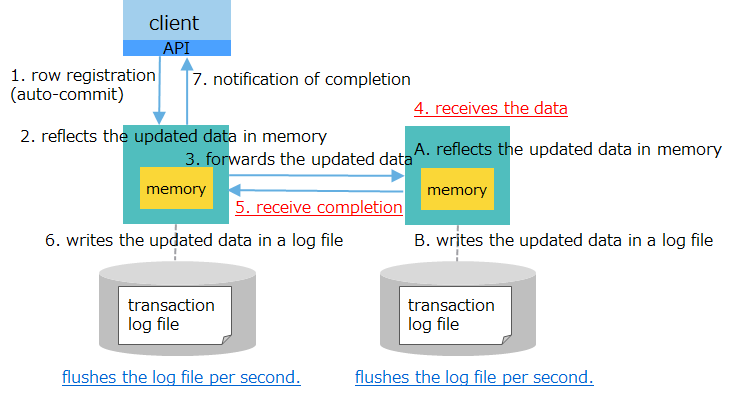

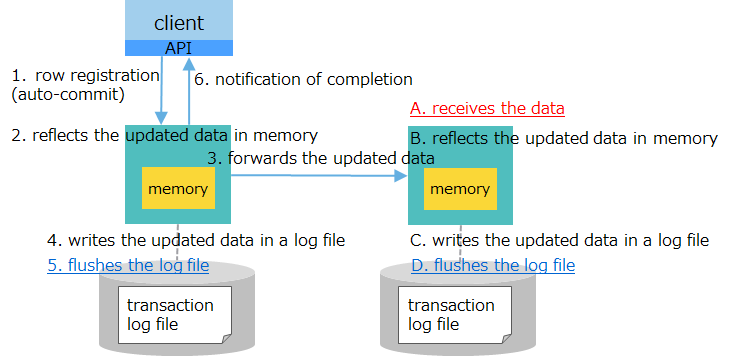

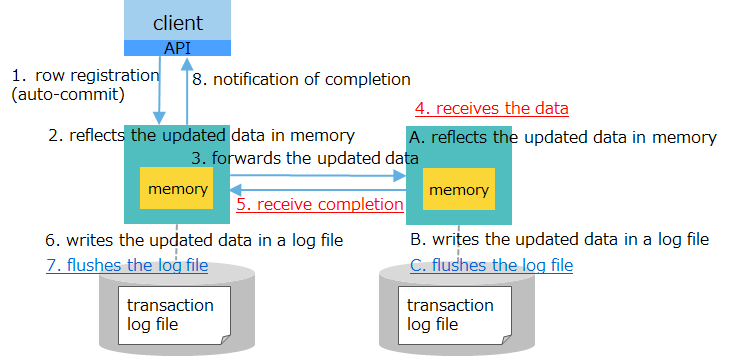

The table below explains combinations of transaction logs and replication modes, and process flows and performance for each combination.

| mode combination |

process flow | performance | state of data upon notification of completion sent to the application |

|---|---|---|---|

| [1] log: asynchronous (1 sec.) replication: asynchronous (default) |

|

high-speed | - flushes updated data within a second - forwards data to a backup node (but has not acknowledged receipt) |

| [2] log: asynchronous (1 sec.) replication: semi-synchronous |

|

moderately high speed | - flushes updated data within a second - forwards data to a backup node |

| [3] log: synchronous replication: asynchronous |

|

moderately low speed | - completes flushing updated data - forwards data to a backup node (but has not acknowledged receipt) |

| [4] log: synchronous replication: semi-synchronous |

|

low speed | - completes flushing updated data - forwards data to a backup node |

- in descending order by performance: [1] > [2] > [3] > [4] (where the number indicates the numbers under the heading “mode combination” in the table above)

- in descending order by availability: [4] > [3] > [2] > [1] (where the number indicates the numbers under the heading “mode combination” in the table above)

Below is a guideline for setups for transaction log write and replication modes.

- For normal systems, the recommended choice is a combination of asynchronous (1 sec.) for transaction logs and asynchronous for replication. (which is the default value in a definition file).

- This is a setup which provides a minimum level of availability, since both modes are asynchronous and hence high-speed, together with the mechanism of how data is forwarded to an application.

- There is a trade-off between performance and availability. For systems which require very high availability and reliability, consider combining semi-synchronous and synchronous modes in terms of balance with performance requirements.

related parameters

-

cluster definition file (gs_cluster.json)

parameter initial value description changes made after startup /transaction/replicationMode 0 (asynchronous) mode for replication

asynchronous: 0 or ASYNC

semi-synchronous: 1 or SEMISYNCpossible (Restart the cluster) /transaction/replicationTimeoutInterval 10s in semi-synchronous replication, time to wait for receive completion by a backup node. possible (Restart the cluster) -

node definition file (gs_node.json)

parameter initial value description changes made after startup /dataStore/logWriteMode 1 transaction log write mode

asynchronous: Specify cycle time in seconds using an integer x (where 1 ≤ x ≥ 231).

synchronous: 0 or -1possible (Restart nodes)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- possible (Restart the cluster): The parameter can be changed by modifying the definition file specifying the parameter and then restarting all the nodes and the cluster.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

Client failover timeout

Even if a node fails, the application can continue accessing data thanks to failover functionality provided by a node and a client API (NoSQL interface).

The rest of this subsection gives a detailed explanation of how failover is performed when a node fails by following all steps ranging from an occurrence of a failure to recovery.

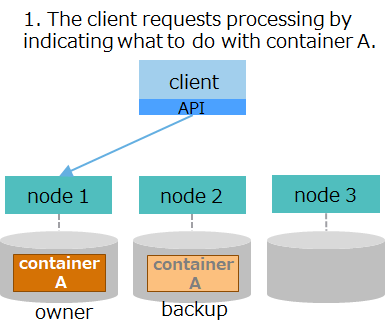

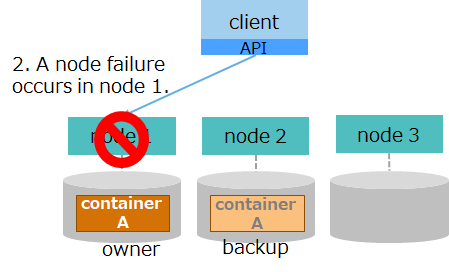

| flow | figure | description |

|---|---|---|

| (1) client’s request for processing | <p> </p> </p> |

1. The client requests processing by indicating what to do with a container through a client API. The client API connects to node 1, which stores container A to operate on. |

| (2) failure occurrence | <p> </p> </p> |

2. While processing a request from the client, the node goes down due to a node failure in node 1. |

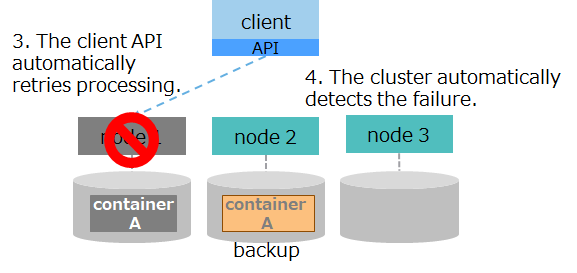

| (3) automatic recovery 1 | <p> </p> </p> |

3. The client API loses connection to node 1 and automatically retries processing. 4. The cluster automatically detects node 1 has gone down and reconfigures the cluster using the remaining nodes. |

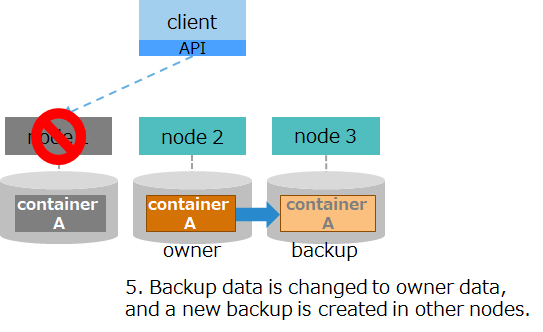

| (4) automatic recovery 2 | <p> </p> </p> |

>5. As a replacement for owner data in node 1, the cluster changes backup data in node 2 to owner data. In the background, the cluster creates backup in other nodes. |

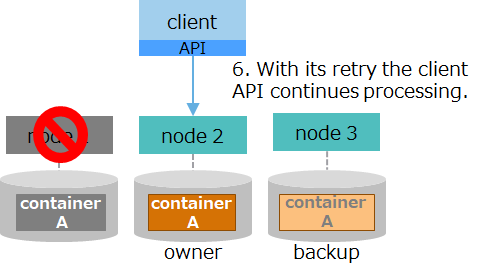

| (5)(5) continuation of processing | <p> </p> </p> |

6. The client API establishes connection to the new owner of container A, namely node 2, and automatically retries processing. The client continues processing without causing an error. |

If the node in the partition the client API is accessing fails, the client API automatically retries processing. If, thanks to the cluster’s autonomous data placement, the partition recovers while retrying, the client API automatically continues processing. The retrying time, or “failover timeout,” can be changed by specifying a property for application connection processing for the application.

- Cases where processing cannot continue, leading to an error

- Cases where extra time is required for synchronous processing for autonomous data placement and the partition does not recover within a failover timeout.

- Cases where a node failure leads to missing data and the partition enters a state of owner loss.

[notes]

- Only the NoSQL interface has a client failover functionality.

related parameters

-

property for connecting to a cluster from the client API

parameter initial value description failoverTimeout 120 seconds client failover timeout

Use an application program to specify.

Network design

In GridDB, it is necessary to design two types of networks, namely nodes and clusters.

Node network configuration

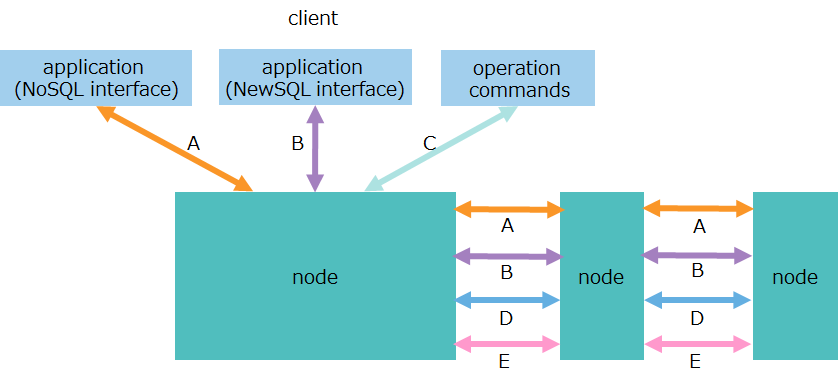

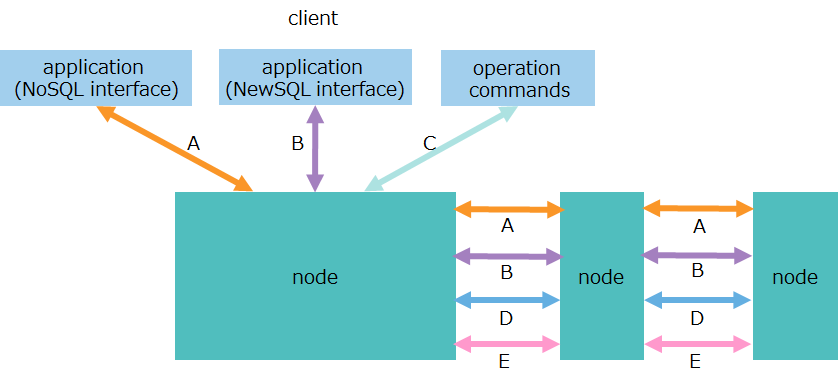

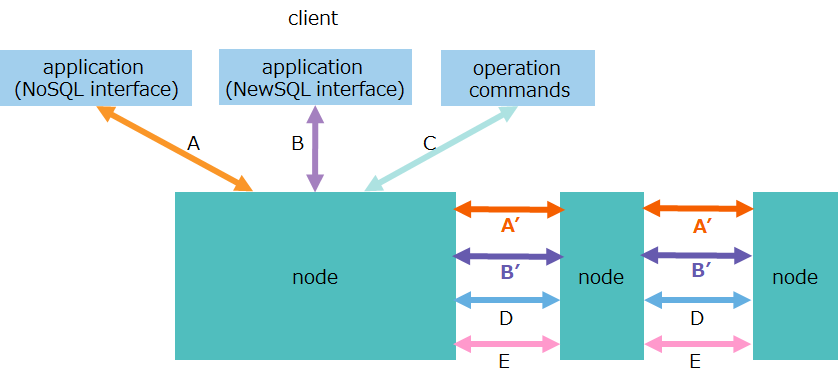

The GridDB nodes perform a variety of network communication tasks to communicate with the client and other nodes over the network. Communication paths used for this are of several types as shown in the table below:

| No. | item | communication path | description |

|---|---|---|---|

| A | transaction processing | - client-node - between nodes |

- communication for data operations over a NoSQL interface - communication for replication processing of transactions |

| B | SQL processing | - client-node - between nodes |

- communication for data operations over a NewSQL interface - communication for processing SQL by parallel and distributed computing |

| C | production and management operations | client-node | communication for accepting an operation request for production and management |

| D | cluster management | between nodes | communication for sending and receiving a heartbeat used for checking the survival of nodes, as well as information on cluster management |

| E | synchronization | between nodes | communication for data synchronization through partition relocation |

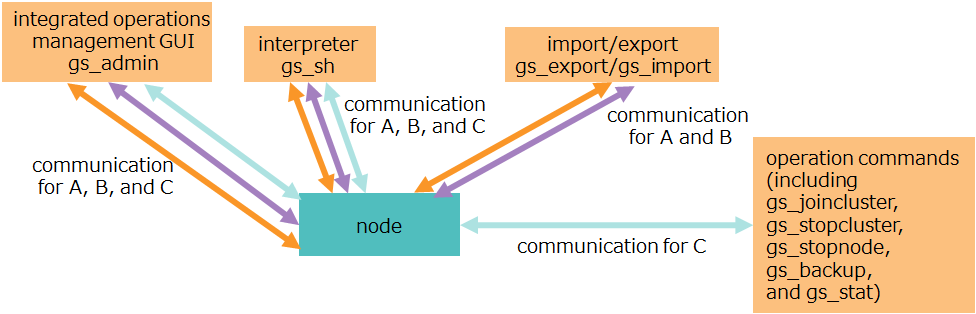

Main operation tools provided by GridDB use multiple types of communication as illustrated in the figure below:

| operation tools | communication types to be used |

|---|---|

| integrated operations management GUI (gs_admin) | A. transaction processing B. SQL processing C. production and management operations |

| interpreter (gs_sh) | A. transaction processing B. SQL processing C. production and management operations |

| operation command (including gs_joincluster and gs_stat) | C. production and management operations |

| export/import tool (gs_export, gs_import) | A. transaction processing B. SQL processing |

[notes]

- For IP addresses to be used for communication, specify the ones that can communicate with the client and all other nodes.

- To specify a host name for an IP address, make sure that the name is resolvable for the client and all other nodes.

- If an IP address (seviceAddress) is omitted in a node definition file, the IP address associated with the machine’s host name is used as a default.

- How to check an IP address associated with a host name:

$ hostname -i 172.0.10.1

- How to check an IP address associated with a host name:

related parameters

-

node definition file (gs_node.json)

- For each communication, set an IP address (serviceAddress) and a port number (servicePort).

parameter initial value description changes made after startup /cluster/serviceAddress -

(IP address that corresponds to a host name)address for cluster management possible (Restart nodes) /cluster/servicePort 10010 port number for cluster management possible (Restart nodes) /sync/serviceAddress -

(IP address that corresponds to a host name))address for synchronization possible (Restart nodes) /sync/servicePort 10020 port number for synchronization possible (Restart nodes) /system/serviceAddress -

(IP address that corresponds to a host name)address for production and management operations possible (Restart nodes) /system/servicePort 10040 port number for production and management operations possible (Restart nodes) /transaction/serviceAddress -

(IP address that corresponds to a host name)address for transaction processing possible (Restart nodes) /transaction/servicePort 10001 port number for transaction processing possible (Restart nodes) /sql/serviceAddress -

(IP address that corresponds to a host name))address for SQL processing possible (Restart nodes) /sql/servicePort 20001 number for SQL processing possible (Restart nodes)

[notes]

- The heading “changes made after startup” in the table above denotes whether the parameter can be changed after launching.

- possible (Restart nodes): The parameter can be changed by modifying the definition file specifying the parameter and then restarting the nodes.

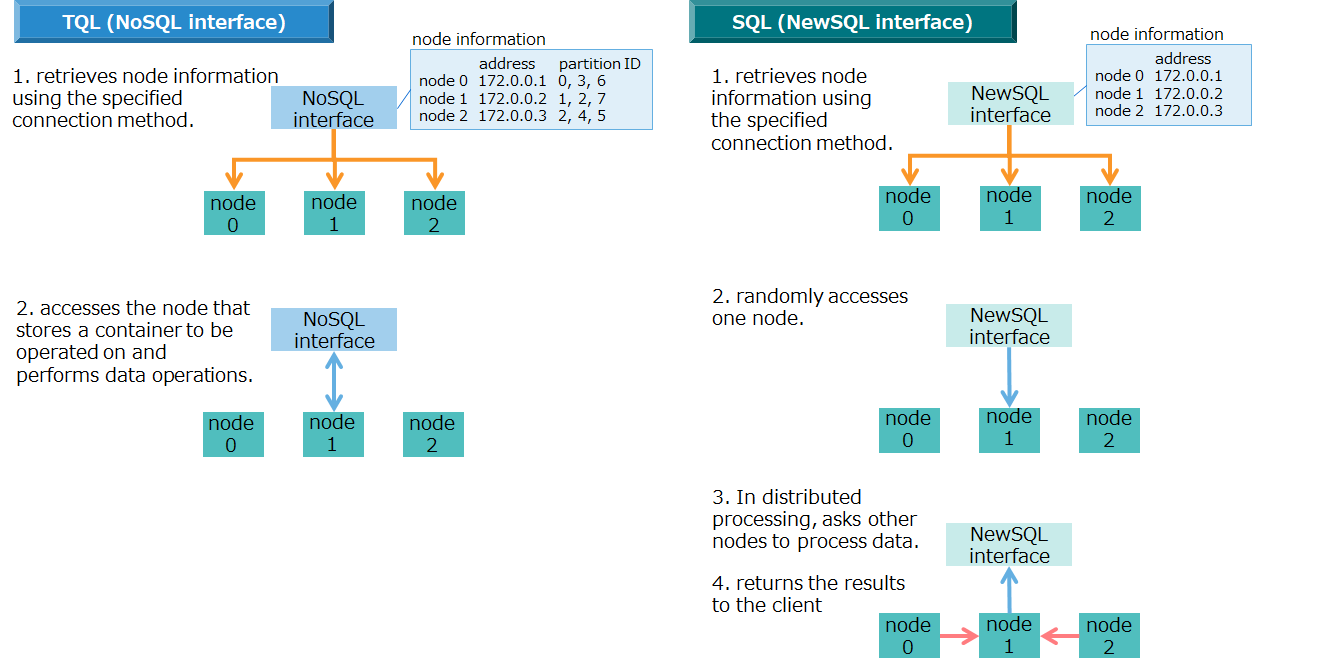

In performing data operations using TQL or SQL, the client connects to a cluster through a NoSQL/NewSQL interface to perform processing. Note that in the case of SQL, data operations using SQL involve distributed processing between nodes and hence require a high amount of communication between nodes.

- In TQL (NoSQL interface), data operations are performed on a per-container basis, which means the client communicates with the node which has that container.

- In SQL (NewSQL interface), data operations are performed using parallel and distributed computing, which means the client randomly accesses one node server and processes the SQL by distributing it to each node .

The points of design required for a node network are port numbers and bandwidth. The next several subsections explain points of design for each.

Port number

Port numbers GridDB nodes use are of several types as shown in the tables below:

By default, the numbers in the two tables are used. If the port number that the system plans to use is used by another application or elsewhere, then the default port number must be changed.

| No | item | description | port number |

|---|---|---|---|

| A | transaction processing | communication port for performing transaction processing | 10001 |

| B | SQL processing | communication port for performing SQL processing (AE only) | 20001 |

| C | production and management operations | communication port for accepting an operation request for production and management | 10040 |

| D | cluster management | communication port for sending and receiving a heartbeat used for checking the survival of nodes, as well as information on cluster management | 10010 |

| E | synchronization | communication port for data synchronization through partition relocation | 10020 |

To use multicast communication as a connection method, the following three additional port numbers in the table below are used:

| No | item | port number |

|---|---|---|

| F | multicast for transaction processing | 31999 |

| G | multicast for SQL processing (AE only) | 41999 |

| H | multicast for cluster management | 20000 |

Bandwidth

GridDB communicates a large volume of data. Therefore, the recommended bandwidth for the network bandwidth is 10 GbE.

The following three communication paths involve large data volumes:

- client-to-node communication over the NoSQL interface (A)

- “The traffic will be high while large amounts of data are being obtained from the cluster, for example by running a search that returns many hits using TQL.

- client-to-node communication over the NewSQL interface (B)

- “The traffic will be high while large amounts of data are being obtained from the cluster, for example by running a search that returns many hits using SQL.

- node-to-node communication by processing SQL using parallel and distributed computing (B)

- The traffic will be high if processing SQL in multiple nodes using parallel and distributed computing, for example by joining multiple containers.

When there is a shortage of network bandwidth due to a large amount of data traffic, it is recommended to increase bandwidth using multiple network interface cards (NICs).

[Example] normal case

-

Use the same IP address for all types.

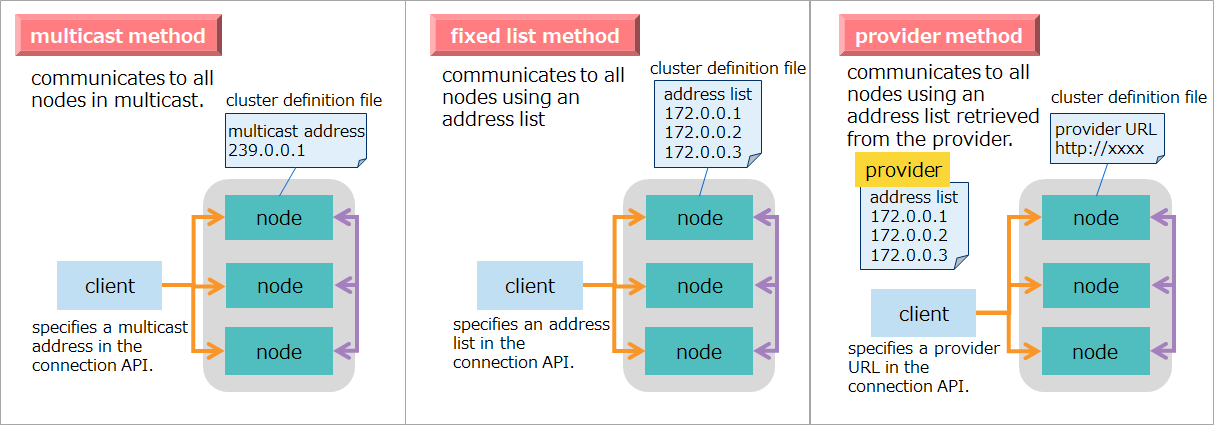

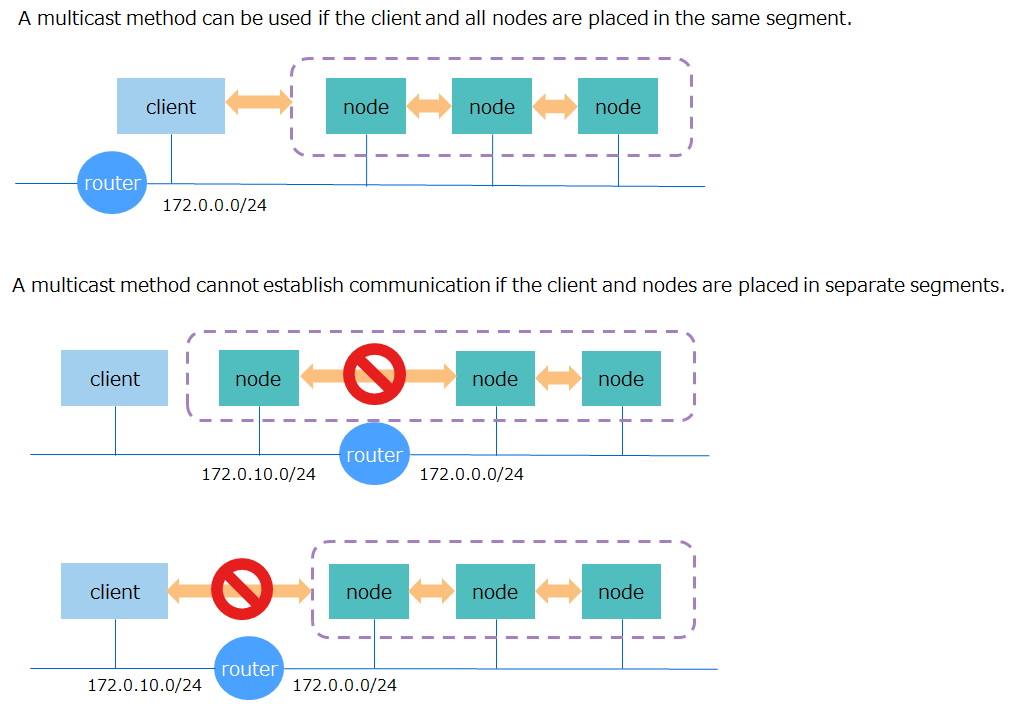

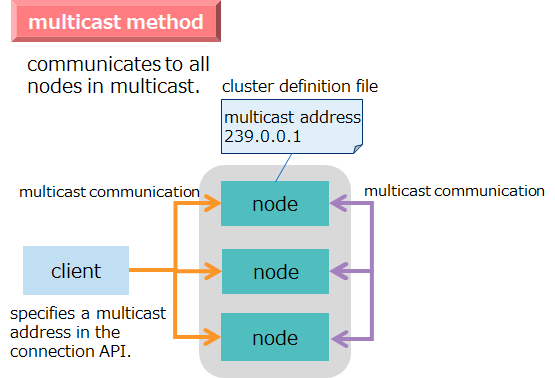

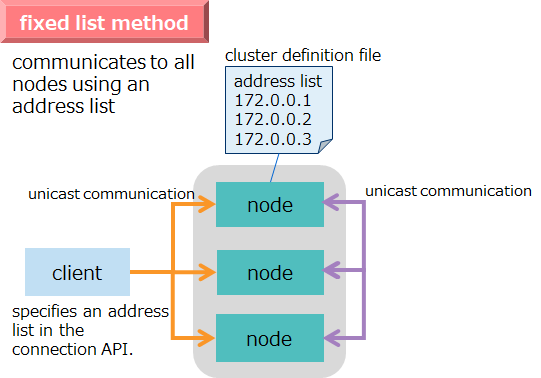

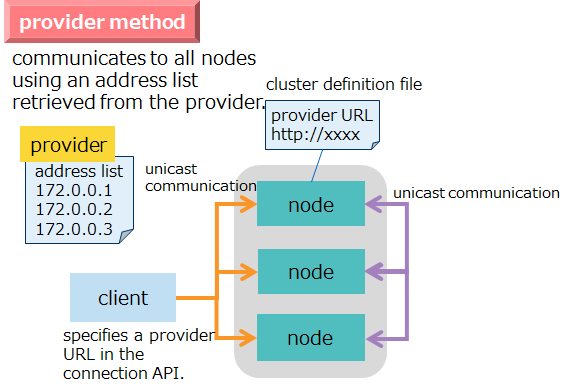

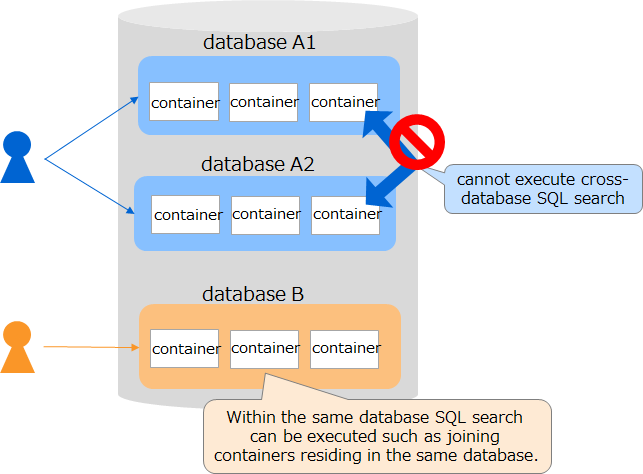

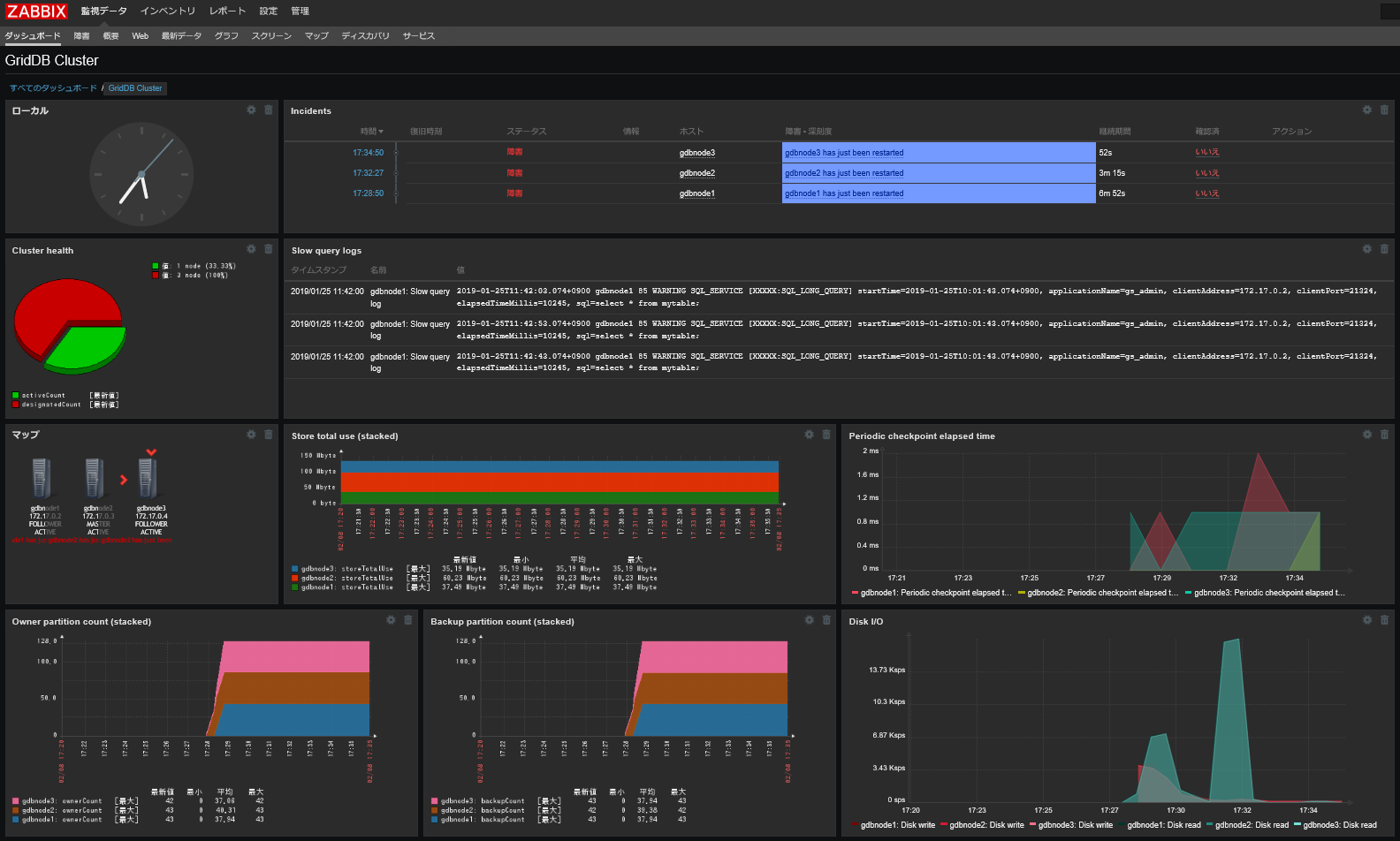

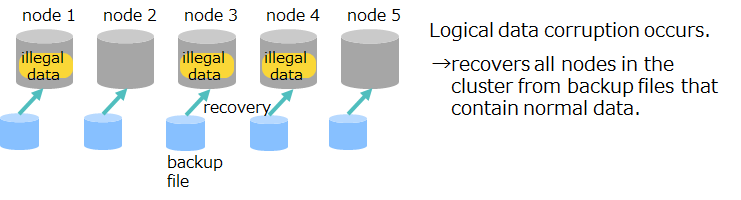

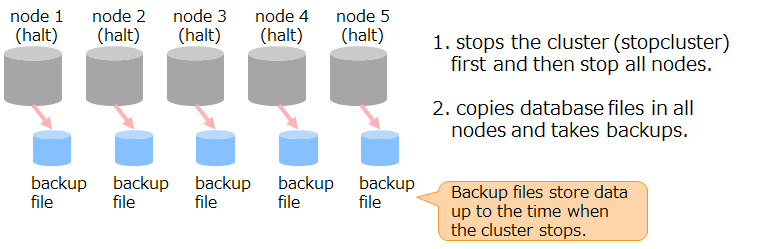

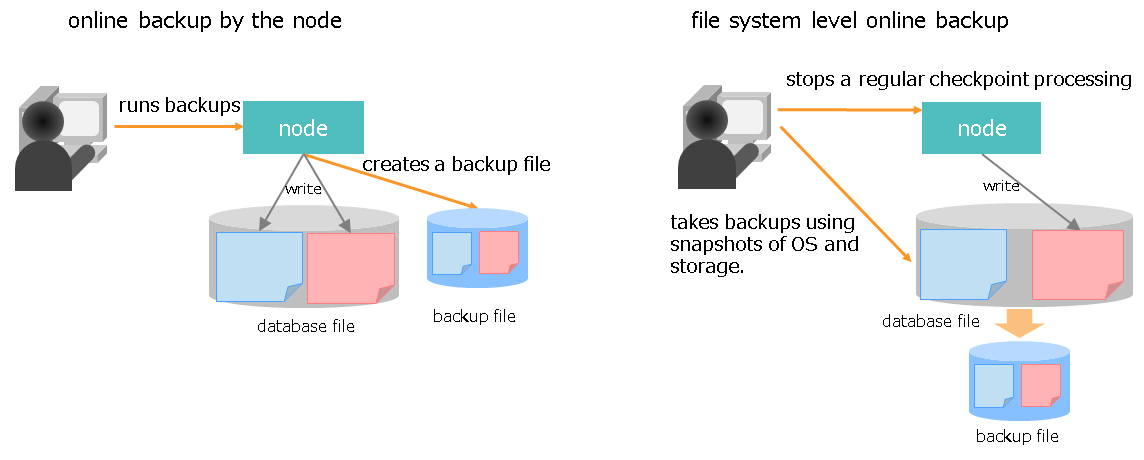

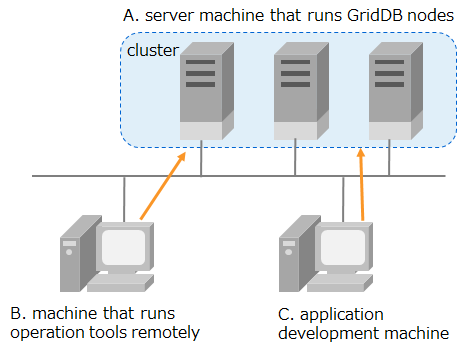

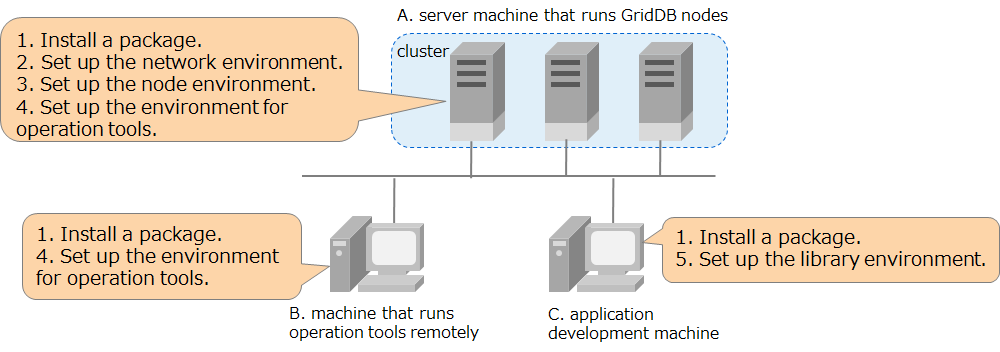

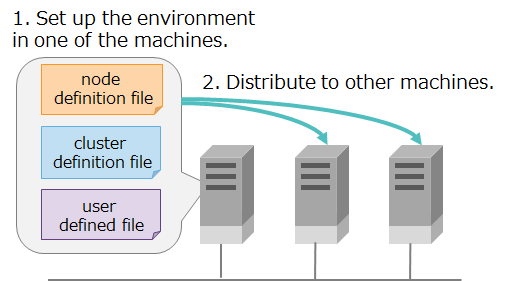

No. item IP address A transaction processing 172.0.10.1 B SQL processing 172.0.10.1 C production and management operations 172.0.10.1 D cluster management 172.0.10.1 E synchronization 172.0.10.1